Reflecting on some of the discussions I was in recently I can only say that there is no escaping reality. Here are some reference blogs for you.

- April 24th–Windows 2003 Is 10 Years Old

- Failed at dumping XP in a timely fashion? Reassert yourself by doing better with Windows Server 2003!

- Legacy Apps Preventing Your Move From Windows XP to Windows 8.1?

- The Zombie ISV®

You can’t get of Windows 2003 you say? Held hostage by ancient software from a previous century? Sure I understand your problems and perils. But we do not negotiate with hostage takers. We get rid of them. Be realistic, do you think this is somehow going to get any better with age? What in 24 months? What about 48? You get the drift. What’s bad now will only be horrible in x amount of time.

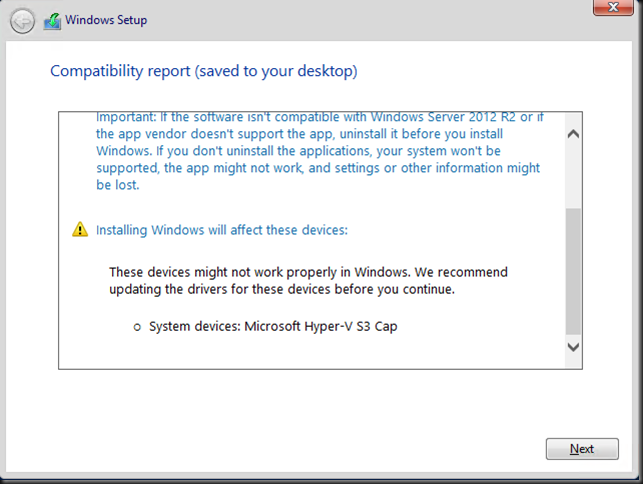

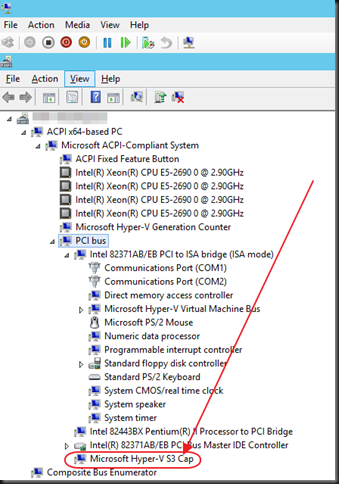

Look at some issues people run into already:

- Windows XP Clients Cannot Execute Logon Scripts against a Windows Server 2012 R2 Domain Controller – Workaround

- It turns out that weird things can happen when you mix Windows Server 2003 and Windows Server 2012 R2 domain controllers

Issues like this are not going to go away, new ones will pop up. Are you going to keep everything in your infrastructure frozen in time to try an avoid these? That’s not even coping, that’s suffering.

What ever it is that’s blocking you, tomorrow is when you start planning to deal with it and execute on that plan. Don’t be paralyzed by fear or indecision. Over 12 years it will have been a supported OS by its end of life. Windows 2003 had a real good run but now it’s over. Let it go before it hurts you. You have no added value from a more recent version of Windows? Really? We need to talk, seriously.

UPDATE: Inspired by Aidan Finn (@joe_elway) who offered a very good picture to get the message across => click the picture to get the soundtrack! LET IT GO!