Introduction

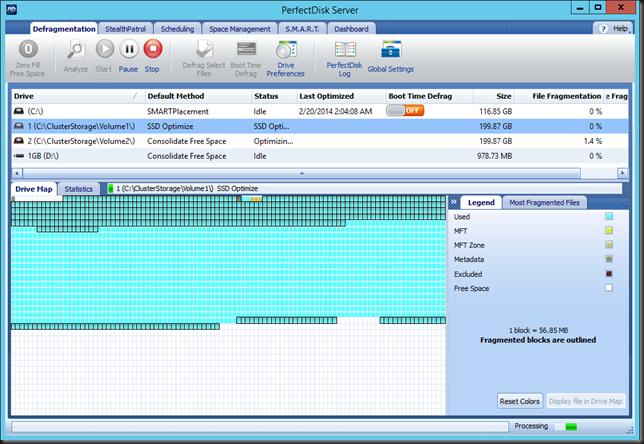

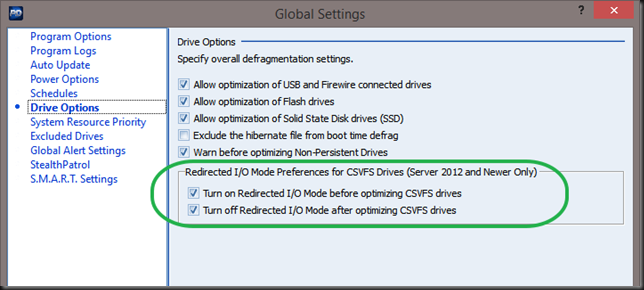

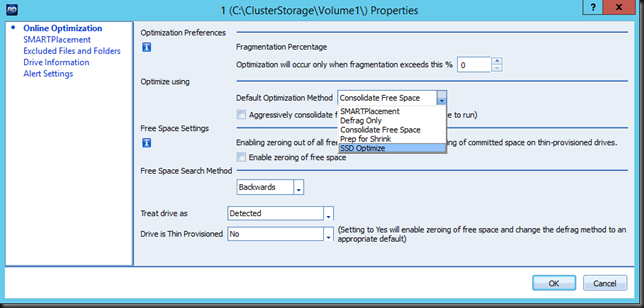

Cluster Shared Volumes without Active Directory will come online if certain conditions are met. Basically, when the cluster can form. That’s what we’ll talk about here. There are a lot of things to consider when virtualizing your Active Directory environment, partially or completely. Too much for this blog post alone and much of it has been discussed before. Here I’ll discuss when people count on their Cluster Shared Volumes coming online when active directory is not available but are disappointed and think that it’s a bug or a broken promise. It is not! We know that since Windows Server 2012 the CSVs coming online do not depend on Active Directory being available (thanks to the local CLIUSR account being used for cluster starts).

Cluster Shared Volumes without Active Directory

So, let’s address getting your Cluster Shared Volumes online when AD is not available. The things to realize is that not having Active Directory has been taken care off. The thing that still can go wrong is that the cluster doesn’t come on line properly and that means that the CSV LUNs won’t be online as those are cluster resource. Hyper-V can boot the VMs without the cluster being formed but as the VMs reside on a CSV and those are not available, that’s what’s causing the problem. That means that getting your cluster to come up is the real issue.

Getting your cluster to come up is the real issue.

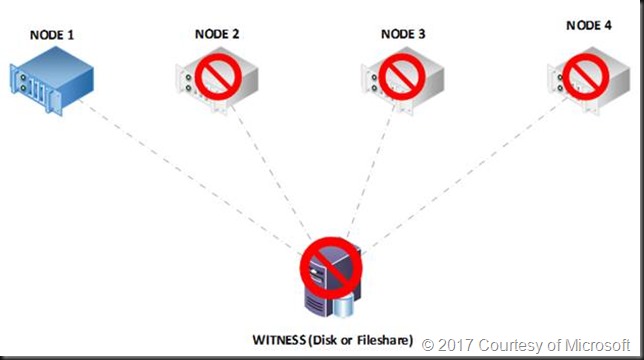

If cluster shared volumes can come up without a domain controller / AD being available since Windows Server 2012 (Failover Clustering and Active Directory Integration) how is it that some many people still have issues with it? How do you make this work for you? You can use a disk witness to protect you in most cases. When you have a file share or cloud witness you can take some step to avoid running into this issue.

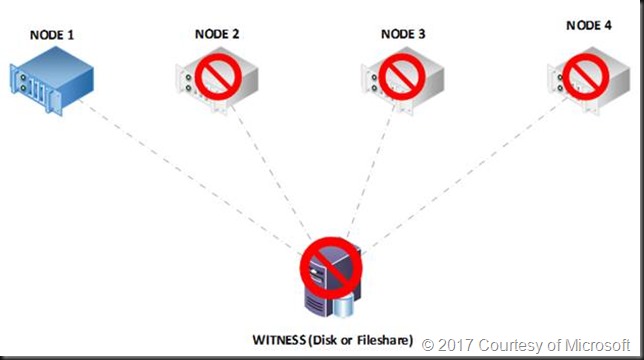

There is a great article on cluster behavior here: Failover Cluster Node Startup Order in Windows Server 2012 R2 (the same rules apply for Windows Server 2016). Read it and you’ll notice that the behavior differs depending on whether you have a witness defined and whether that witness is a witness disk or a file share or cloud witness. The disk witness has a copy of the cluster database and if available will help you out of any situation where the paxos on the disk witness (if it’s available) is more recent then the one on the cluster node as the node will download the cluster database info from the disk witness. But a file share or cloud witness don’t have a copy, so they have a small disadvantage under certain scenarios. CSV’s that won’t come up is when booting the nodes in the wrong order when using a file share or cloud witness is one of those scenarios but not having AD isn’t the root cause of this.

Some people will never notice this issue at all, especially not when they have disk witness, but when they do, it might be at a very inconvenient moment in time. Examples of where this situation can occur are a single cluster environment where the domain controllers are running on CSV and high available in that cluster that was shut down completely, the cluster has a file share witness and the nodes are started in the wrong order.

Making sure your CSVs come online = clustering being formed

Well for one make sure you are using Windows Server 2012 or higher for your cluster. That’s a given.

Beyond that you basically just need to know what to do in what scenario to get your cluster up and running so you won’t have issues with getting the CSV to come up. You just have to follow some rules of thumb and you’ll be fine. Also, there’s almost always a way to get out of pickle, just don’t panic. But also remember that you can make your live easier when you design your solutions with failure in mind and by knowing your options so you can act correctly. I my example I’ll be using Hyper-V cluster with CSVs but the same goes for SOFS, SQL Server clusters leveraging CSVs etc.

Planned down time

If you’re shutting down a complete cluster you have two options to make sure things go a smooth as possible.

Option 1 – A clean cluster shutdown by the book

- Shut down the workload, i.e. the VMs.

- Stop the cluster

- Shutdown the Cluster nodes.

- Boot the cluster nodes. You can start with any you like. The CSVs will come on line. You will be able to start the VMs. Do start with the domain controllers and wait for them to come on line before starting the others.

During this you’ll see some “collateral” events, errors, warnings due to Active Directory not being available. The cluster name has issues without Active Directory but that a management connection point, it doesn’t mean the cluster doesn’t work. Once Active Directory is available the cluster name will come on line automatically when the default failure policy restarts it. You can also manage the cluster via RDP or console by connection to “.” locally on that node or use the running node name. You can also try to bring cluster name on line manually when Active Directory is up and running.

Option 2 – A clean cluster shutdown as it happens most of the times in real life

Which is one a lot of people do to keep part of the work load running as long as possible.

- Shut down the no critical workload, i.e. the VMs.

- Pause a node so the critical workloads live migrate to other nodes

- When the node is paused, shut down the node.

- You rinse and repeat this until the last node is left with only the most critical VMs

- Finally, you stop the workload on that last cluster node and shut it down as well.

- Now comes the critical part: Remember what node you shut down last. You have to start that one first and you’ll see that your CSV will come on line. If you boot another node, you might panic as the CSV will not come on line.

Now I need to correct this a little bit. With a disk witness you are OK whatever node you boot. When the paxos numbers on the first node to boot and the disk witness are compared the most recent copy will be used. Either the local one on the node will be used directly or after it has been updated with the data form the disk witness. To make things simple for the ops team I always tell them to note the last node they shut down anyway no matter what type of witness they have. It’s good info to have.

With a file share or cloud witness the shutdown/startup order really comes into play. The reason this happens is that by shutting down node by node we end up with a one node cluster (last man standing). When that’s shut down that’s the only node that knows about the last (potential) changes in the cluster as it holds a copy of the cluster database. Remember that a files hare or a cloud witness has no copy of the cluster database. That’s why the last node to be shut down has to come on line first when comparing the paxos numbers with the witness as that node can form a cluster. If the node that boots first does not hold the most recent paxos number it cannot download the cluster databases info from the file share or cloud witness. Such a node cannot form a cluster and bring the CSVs online. If the first node to boot was the last one to shut down, it can form a cluster and the CSVs will come on line. This is the big difference with option one where you shut down the entire cluster and then take the nodes of line.

You might not know or remember the order. If that’s the case you still have options like starting the cluster node with the -fixquorum option (net.exe start clussvc /forcequorum) at the risk of loosing some cluster changes that are not in the local cluster database.

No need to go to immediately backups, extract the domain controller VMs from SAN snapshots or mount a LUN to a different machine to extract the VM files for the DC or so. Don’t panic!

One or more failed nodes

Well as long as the cluster survives your domain controller VMs should fail over. Keep ‘m on separate node (anti-affinity), separate CSV LUNs, if possible separate clusters if all domain controller virtual machines are going to be running high available on a cluster node and that cluster is still functional after all. No issues here.

Total cluster failure

The cluster nodes all show due to a “global” BSOD or are turned off due to a power failure or a storage array crash. This is more the realm of bad dreams I know, it does happen. Often things will recover and you’ll be fine but you can do your part. The same rules apply, get the cluster to form and you’ll have your CSVs on line. In a bad case -fixquorum is your friend but normally it’s not your first option. In the worst case you’ll need recovery from backup of the cluster or rebuild it. It’s a very bad day if it comes to that. And cluster recovery is not the subject of this post.

Conclusion

Don’t blame Active Directory and start troubleshooting or fixing the wrong problem. So yes, CSV will come on line when certain conditions are met and you can work yourself out of a pickle if needed. But during a disaster that’s only extra work and stress you might not want to worry about if you can avoid it. It’s good to know how to resolve issues around CSVs not coming online when the shit hits the fan as even the best laid out plans tend to get side tracked by reality when disaster strikes.

If you cannot guarantee control over all the prerequisites and might not have the skills in please when needed, you might consider other options. Some of these are actually the best practices of the past when a CSV would indeed not come on line without active directory in any way. This is great for AD related issues but not for you offline CSVs, they need the cluster to form properly!

Sure, you can run the domain controller virtual machines on local storage, and not made high available. This cloud be on one of the cluster nodes (*) or on a stand-alone Hyper-V host. Having a physical domain controller is also a possibility. This helps avoid issues with AD in virtualized environments as many other services are very dependent on them and it’s good to have on one available all of the time and get them back on line a.s.a.p..

I’ll leave you with the fact that virtualizing domain controllers can be done but it pays to study up on how to do it well and test your assumptions in the lab. There is a lot of information on virtualizing domain controllers for a reason. Read it and process what you’ve learned from it. You might find that this CCV thingies is not the most complex subject to deal with.

(*) Please note that some cluster deployments like HCI based on S2D do not support running other (local) storage in addition to the boot OS and the S2D storage pool volumes.