With Exchange 2016 on the horizon (RTM in Q4 2015) I’ve been prepping the lab infrastructure and dusting of some parts of the Exchange 2010/2013 lab deployments to make sure I’m ready to start testing an migration to Exchange 2016. While Office 365 offers great value for money sometimes there is no option to switch over completely and a (used) hybrid scenario is the way to go. This can be regulations, politics, laws, etc. No matter what we have to come up with a solutions that satisfy all needs as well as possible. Even in 2015 or 2016 this will mean on premises e-mail. This is no my “default” option when it comes to e-mail in anno 2015, but it’s still a valid option and choice. So they can get the best of both worlds and be compliant. Is this the least complex solution? No, but it gives them the compliancy they need and/or want. It’s not my job to push companies 100% to the cloud. That’s for the CIO to decide and for cloud vendors to market/sell. I’m in the business of helping create the best possible solution to the challenge at hand.

Figure: Exchange 2016 Architecture © Microsoft

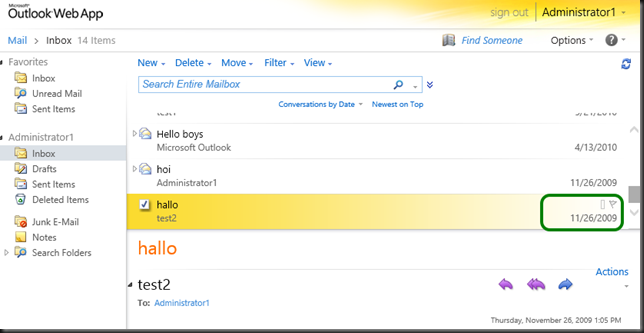

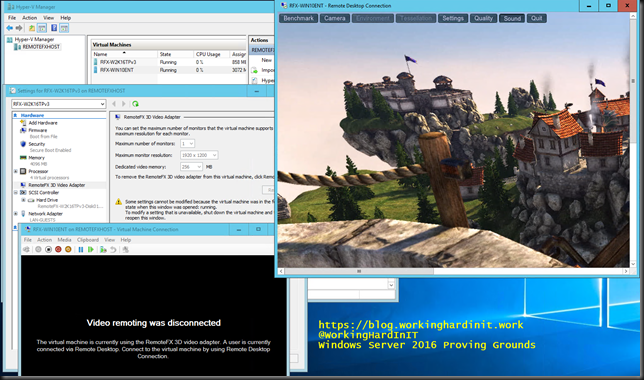

The labs were setup to test & prepare for production deployments. It all runs on Hyper-V and it has survived upgrades of hypervisor (half of the VMs are even running on Windows Server 2016 hosts) and the conversion of the VHDX to VHDX. These labs have been kept around for testing and trouble shooting. There are fully up to date. It’s fun to see the old 2009 test mails still sitting in some mail boxes.

Both Windows NLB and Kemp Technologies Loadmasters are used. Going forward we’ll certainly keep using the hardware load balancing solution. Oh, when it comes to load balancing, there only the best possible solution for your needs in your environment. That will determine which of the various options you have you’ll use. In Exchange 2016 that’s a will be very different from Exchange 2010 in regards to session affinity, affinity is no longer needed since Exchange 2013.

In case you’re wondering what LoadMaster you need take a look at their sizing guides:

- https://kemptechnologies.com/load-balancer-sizing-exchange-2010

- https://kemptechnologies.com/load-balancer-sizing-exchange-2013

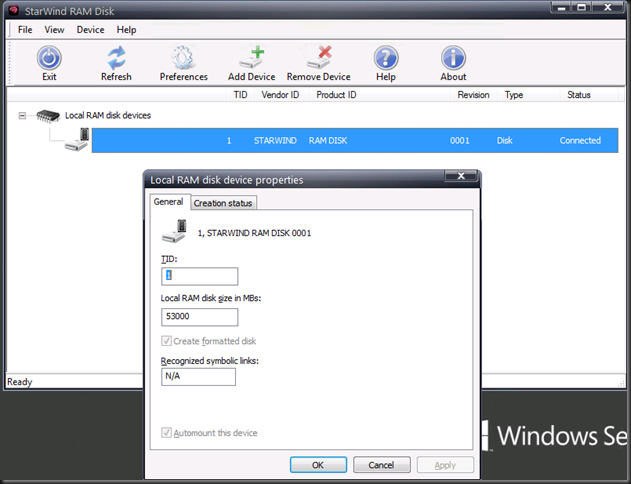

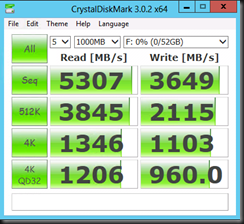

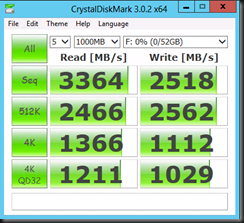

Another major change will be the networking. On Windows Server 2012 R2 we’ll go with a teamed 10Gbps NIC for all workloads simplifying the setup. Storage wise one change will be the use of ReFS, especially if we can do this on Storage Spaces. The data protection you get from that combination is just awesome. Disk wise the IOPS have dropped yet even a little more so that should be OK. Now, being a geek I’m still tempted to leverage cheap / larger SSDs to give flying performance ![]() . If possible at all I’d like to make it a self contained solution, so no external storage form any type of SAN / centralized storage. Just local disks. I’m eyeing the DELL R730DX for that purpose. Ample of storage, the ability to have 2 controllers and my experience with these has been outstanding.

. If possible at all I’d like to make it a self contained solution, so no external storage form any type of SAN / centralized storage. Just local disks. I’m eyeing the DELL R730DX for that purpose. Ample of storage, the ability to have 2 controllers and my experience with these has been outstanding.

So no virtualization? Sure where and when it makes sense and it fits in with the needs, wants and requirements of the business. You can virtualize Exchange and it is supported. It goes without saying (serious bragging alert here) that I can make Hyper-V scale and perform like a boss.