Cloud & Datacenter Conference Germany 2018

The Cloud & Datacenter Conference Germany 2018 is a shining beacon of light in a sea of marketing driven IT events. It is organized by Carsten Rachfahl via his company Rachfahl IT Solutions. Carsten is a Microsoft MVP and Regional Director whose commitment to excellence has show for many years in his community engagements. I think his integrity and style is a driving force behind this conferences ability to attract the quality of attendees, speakers and sponsors.

Cloud & Datacenter welcomes top expert speakers from the community and the industry. They deliver high quality sessions and share their combined experience and knowledge with attendees that are truly interested in working with those technologies. That combination delivers high value interactions and knowledge sharing. The sessions in combination with the interaction between everyone there is works very well due to the size of the conference. Its big enough to have the breath of topics needed I todays IT landscape while it is small enough to allow people to dive in deeper and discuss architecture, design, implementation and visions.

Some extra information

The Cloud & Datacenter Conference Germany 2018 is being held on May 15-16 2018 in the Congress Park Hanau, Scholes Plats 1, Hanau, 63450 Germany. That’s close to Frankfurt and as such has good travel accommodations. Topics of interest will be Azure, Azure Stack, Hybrid Cloud, Private Cloud, Software Defined Datacenter, System Center & O365. The conference is a real-life technology event so no one is pretending that the esoteric future is already here. We are working on that future by building it in our daily job and helping organizations move forward in an efficient and effective manner.

This is a great conference by technologists, for technologists. The opportunities to learn, network and exchange information are great. The speakers are approachable and all of them together are there both share and learn themselves. From my past experiences the organization outstanding and the feedback from attendees was outstanding.

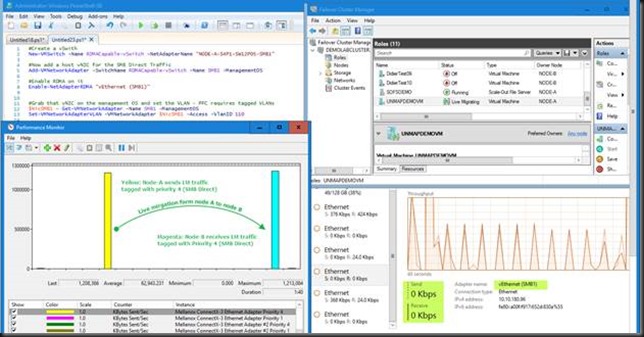

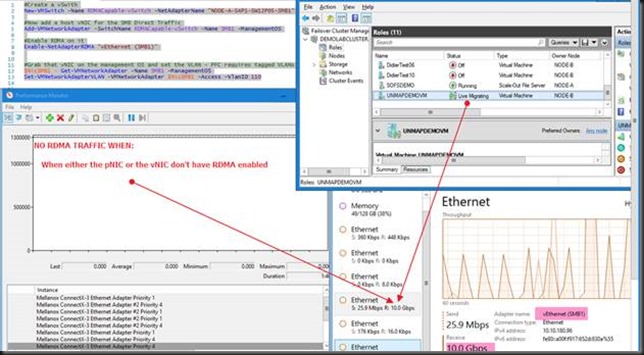

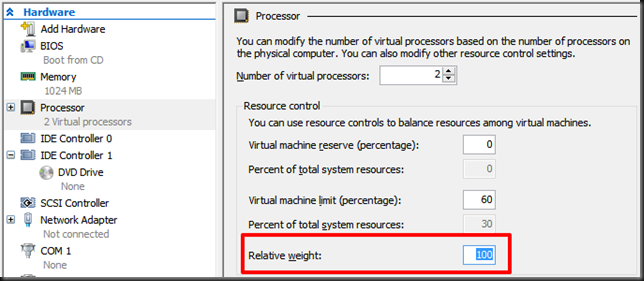

I’ll be speaking on RDMA to give a roadmap on this ever more important technology. On top of that I’ll be around to discuss high availability, clustering, data protection both on premises as in (hybrid) cloud scenarios.

Do your self a favor and register for the Cloud & Datacenter Conference Germany in 2018.

All I can say is that you should really consider attending. It’s most definitely worthwhile. The quality of the attendees, the speakers and the absolute top-notch organization of the conference have been proven in the previous years. The Cloud & Datacenter Conference is a testimony to the professionalism, integrity and quality my fellow MVP and friend Carsten Rachfahl delivers with his company Rachfahl IT solution on a daily basis to his customers. So, help yourself out in your career and register right here. I hope to see you there.

Note: The CDC is German spoken conference but as some speakers are from around the globe you’ll have to listen to some of them speaking in English. If you’ve ever heard my German, I’m sure you’ll prefer me speaking English anyway.

![clip_image002[4] clip_image002[4]](https://blog.workinghardinit.work/wp-content/uploads/2018/02/clip_image0024.jpg)