Introduction

Hyper-V offers 3 ways of managing or tweaking the CPU scheduler to provide the best possible configuration for certain scenarios and use cases. The defaults normally work fine but of certain conditions you might want to tweak them for the best possible outcome. The CPU resource controls at your disposal are:

- Virtual machine reserve – Think of this as the minimum CPU “QoS”

- Virtual machine limit – Think of this as the maximum CPU “QoS”

- Relative Weight – Think of this as the scale defining what VM is more important.

Note that you should understand what these setting are and can do. Threat them like spices. Select the ones you need and don’t overdo it. They’re there to help you, if needed you can leverage all three. But it’s highly unlikely you’ll need to do so. Using one or two will server you best if and when you need them.

In this blog post we’ll look at the virtual machine limit.

Virtual Machine Limit

The virtual machine limit is a setting on the vCPU configuration of a virtual machine that you can set to limit the % of CPU resources a virtual machine can grab from the host. This setting limits the vCPU, preventing it to use more than the defined maximum percentage. The default percentage is 100.

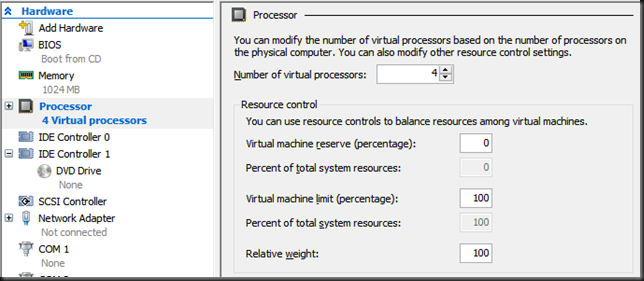

Let’s look at some examples below based on a simple 4 core host with a VM.

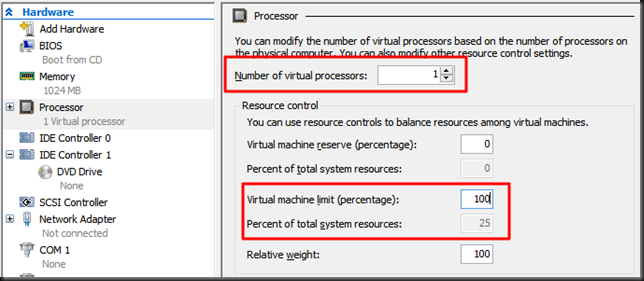

On a 1 vCPU virtual machine with a virtual machine limit of 100% this means it can grab the equivalent of compute time slices of maximum 1 CPU on the host. Which is 25% of the total system CPU resources

So in case of a home lab PC that has 4 cores you can see that setting the virtual machine limit to 100% means it’s limited to 100% of the total system CPU resources.

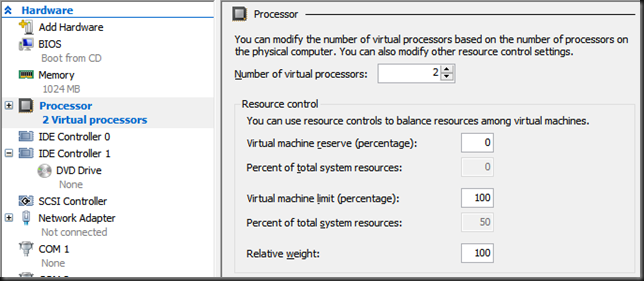

When I set the number of vCPUs to 2 this drops to 50% of the total system CPU resources.

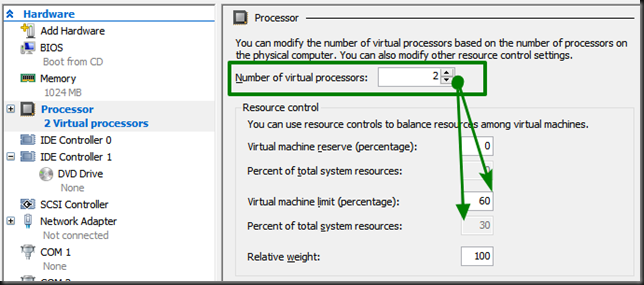

Now by playing with the virtual machine limit you can configure the desired maximum of the total CPU system resources a VM can grab. A 2 vCPU VM with 60% as a virtual machine limit get 30% of the total system CPU resources at the most on 4 CPU host.

Use cases

When you’re worried about run away virtual machines due to either misbehaving applications or developers who tend to run CPU stress tools in the guest and you really want to cap them to a certain limit without reducing the number of vCPUs (which they might need for testing parallelism, NUMA awareness) this might be a tool to use.

Limitations

This setting is always enforced. Even if a host has 32 cores and is only using 40% of them, a virtual machine could consume more CPU cycles without causing issues it won’t be able to. It’s a hard cap that’s always enforced.

The setting is enforced per vCPU. This means that when a single threaded app in a 4 vCPU virtual machine consumes the virtual machine limit it is capped even when the 3 other vCPU are totally idle. So it’s not 30% of all vCPUs is 30% per vCPU maximum.

Conclusion

It’s a tool you have at your disposal but it’s probably the least used one. It has limited uses case due to it’s limitations. For most scenarios you’re better of leveraging virtual machine reserve or the relative weight. These are more flexible and are only enforced when needed, providing a smarter use of resource.

Pingback: The Hyper-V Processor Virtual Machine Reserve - Working Hard In IT

I think you should layout your hypothetical host configuration at the top of the article and explain everything related to that. Currently you say “Which is 25% of the total system CPU resources” but you haven’t yet explained what the host setup is. Later you mention a 4 core PC, so I’m assuming that’s what you’re referring to the entire time but, I’m not sure. Also, can you talk about hyper-threading’s role in the total system resources calculation? For example, I have a 16 core host with hyper-threading enabled and if I assign 4 vCPU to a guest, it says I have 12% total system resources, when it really should be 25%. Lastly, a little typo with “VM can crab”. Thanks p.s. you don’t need to post this message to the public.

You’re right, the examples are of a 4 core host with a VM. It’s now clarified in the post. In regards to Hyperthreading the VM is unaware as is an application on the parent partition. The calculation of cpu time slices is done by the CPU scheduler which benefits normally in effiency improvements through hyperthreading. I don’t take it into consideration for our calculation of percentages as long as we realize hyper threading is not magically doubles the CPU capacity. We know it’s either (in the case of a 4 core host) 4 logical CPUs without hyperthreading or 8 CPUs with hyperthreading and according percentages (and CPU time slices). What I do avoid is creating VM with more vCPUs than we have real cores – don’t bank on them for extra cycles, only for more efficiency at best. In general the Hyper-V team states that Hyper-V is hyperthreading aware and it either helps or is neutral to the performance of a workload. Exceptions do exist. So if it shows to be bad for performance, disable it. Bit I cannot tell in advance if it will. What I did notice in the 2012(R2) era that it does help to do disable hyperthreading to have the most consistent possible behavior & results of vRSS/VMQ if that is of great importance to your workloads. Hope this helps. The spelling mistake is corrected.

At least on some hosts, using this feature apparently comes with significant overhead.

We tried to use this feature on an 8-core Hyper-V 2012 R2 host (4 physical cores with 2-way hyperthreading) with two 8-core guests, one for testing and the other one for production. Normally, we limit the testing VM to 20% to prevent slowing down the production VM, but today we had to test something CPU-intensive, in preparation for planned deployment on the production VM, so I bumped the limit to 80%.

I noticed that the test proceeded extremely slowly, and started looking for answers. I found that a heavy single-threaded load only resulted in ~4% total CPU load in the Hyper-V manager, instead of the expected 10% (which equals 80% of one core out of 8). I reset the limit to the default 100% (no limit), and load suddenly went up to the expected 12%. Even more interestingly, the _production_ VM (which has been unlimited the whole time) also became noticeably faster. Setting the limit to 99% on the testing VM dropped the load on that VM to ~6%, and caused both VMs to slow down again. We settled for managing loads between the two VMs entirely using reserve and relative weight, which don’t appear to have this issue.

Moral of the story: Before using this feature, test to make sure your host handles it correctly! On some hosts, merely starting a VM with a non-100% CPU limit can slow down all VMs on that host, including ones with no CPU limit.