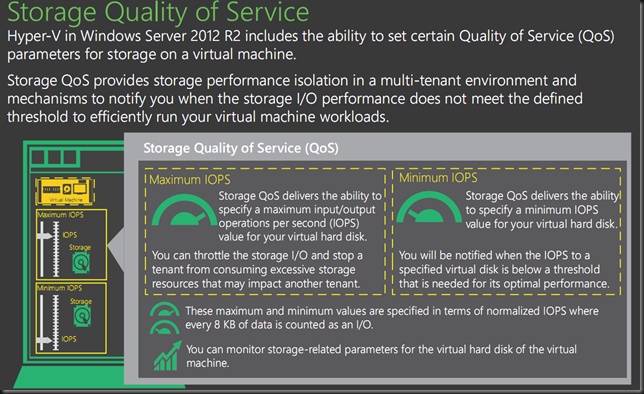

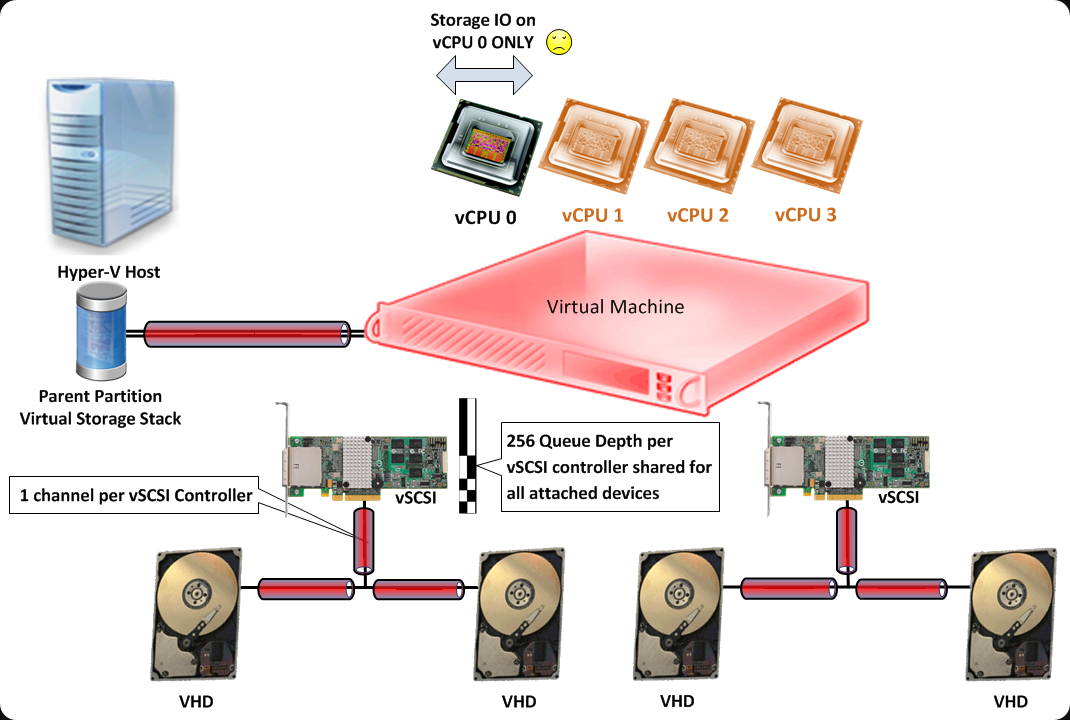

In Windows Server 2012 R2 Hyper-V we have the ability to set quality-of-service (QoS) options for a virtual machine at the virtual disk level. There is no QoS (yet) for shared VHDX, so it’s a per individual VM, per virtual hard disk associated with that virtual machine setting for now.

What can we do?

- Limit – Maximum IOPS

- Reserve – Minimum IOPS threshold alerts

- Measure – New Storage attributes in VM Metrics

Limit

Storage QoS allows you to specify maximum input/output operations per second (IOPS) value for a virtual hard disk associated with virtual machine. This puts a limit on what a virtual disk can use. This means that one or more VMs cannot steal away all IOPS from the others (perhaps even belonging to separate customers). So this is an automatic hard cap.

Reserve

We can also set a minimum IOPS value. This is often referred to as the reserve. This is not hard minimum. Here’s a worth of warning, unless you hopelessly overprovision your physical storage capabilities (ruling out disk, controller issues, HBA problems & other risks that impact deliverable IOPS) and dedicate it to a single Hyper-V host with a single VM (ruling out the unknown) you cannot ever guarantee IOPS. It’s best effort. It might fail but than events will alert you that things are going south. We will be notified when the IOPS to a specified virtual hard disk is below that reserve you specified?that is needed for its optimal performance. We’ll talk more about this in another blog post.

Measure

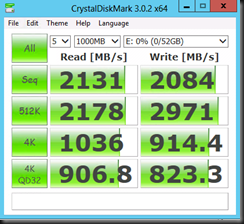

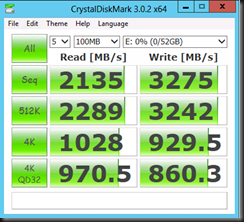

The virtual machine metrics infrastructure have been extended with storage related attributes so we can monitor the performance (and so charge or show back). To do this they use what they call “normalized IOPS” where every 8 K of data is counted as one I/O. This is how the values are measured and set. So it’s just for that purpose alone.

- One 4K I/O = 1 Normalized I/O

- One 8K I/O = 1 Normalized I/O

- One 10K I/O = 2 Normalized I/Os

- One 16K I/O = 2 Normalized I/Os

- One 20K I/O = 3 Normalized I/Os

A Little Scenario

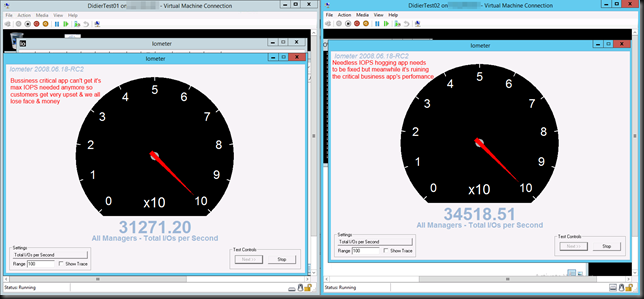

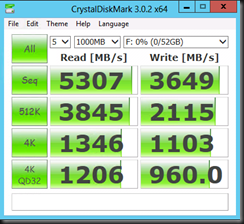

We take IO Meter and we put it inside 2 virtual machines. These virtual machine reside on a Hyper-V Cluster that is leveraging shared storage on a SAN. Let’s say you have a VM that requires 45000 IOPS at times and as long as it can get that when needed all is well.

All is well until one day a project that goes into production has not been designed/written with storage IOPS (real needs & effects) in mind. So while they have no issue the application behaves as a scrounging hog eating a humongous size of the IOPS the storage can deliver.

Now, you do some investigation (pays to be buddies with a good developer and own the entire infrastructure stack) and notice that they don’t need those IOPS as they:

- Can do more intelligent data retrieval slashing IOPS in half.

- They waste 75% of the time in several suboptimal algorithms for sorting & parsing data anyway.

- The number of users isn’t that high and the impact of reducing storage IOPS is non existent due to (2).

All valid excuses to take the IOPS away …You think let’s ask the PM to deal with this. They might, they might not, and if they do it might take time. But while that remains to be seen, you have a critical solution that serves many customers who’re losing real money because of that drop in IOPS has become an issue with the application. So what’s the easiest thing to do? Cap that IOPS hog! Here the video on how you deal with this on Vimeo: http://vimeo.com/82728497

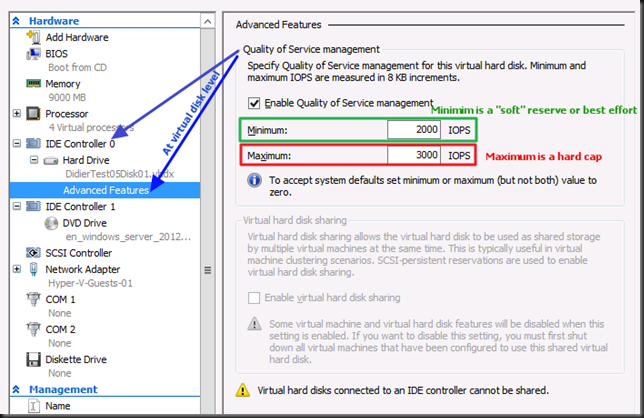

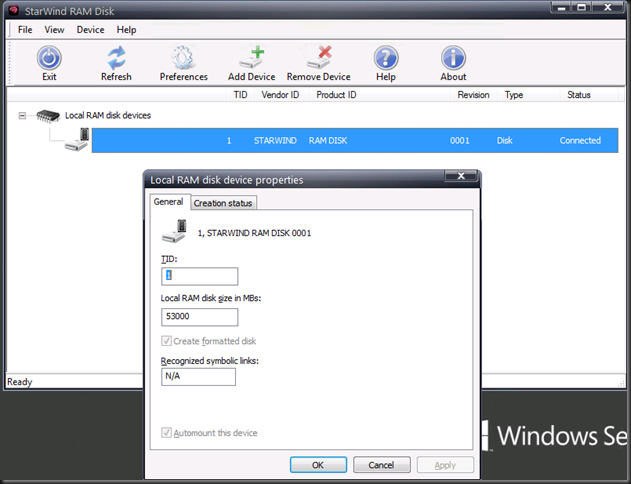

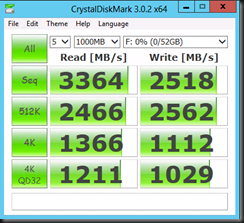

Now let’s enable QoS as in the screenshot below. We give it a best effort 2000 IOPS minimum and a hard maximum of 3000 IOPS.

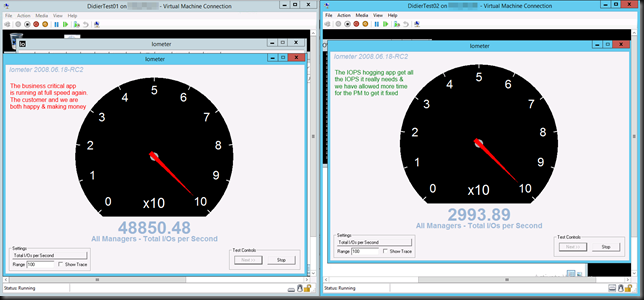

The moment you click “Apply” it kicks in! You can do this live, not service interruption/ system downtime is needed.

I actually have a hard cap of 50000 on the business critical app as well just to make sure the other VMs don’t get starved. Remember that minimum is a soft reserve. You get warned but it can’t give what potentially isn’t available. After all, as always, it’s technology, not magic.

In a next blog we’ll discuss QoS a bit more and what’s in play with storage IO management in Hyper-V, what the limitations are and as such we get an idea what Microsoft should pay attention to vNext.

Remarks

Well doing this for a 24 node Hyper-V cluster with 500 VMs could be a bit of challenge.