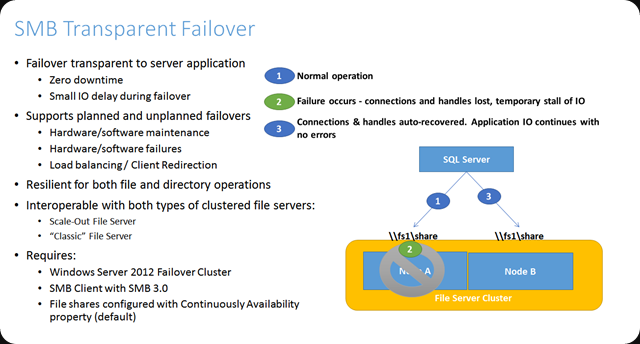

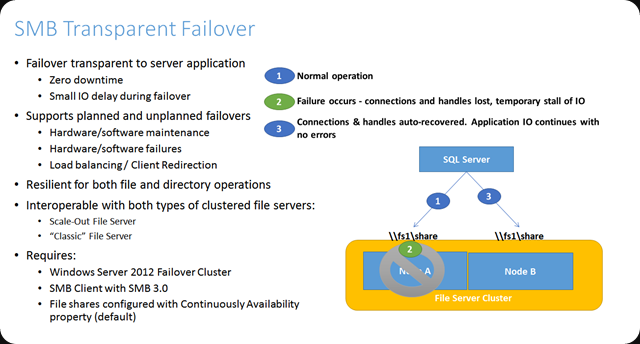

SMB 3 for Transparent Failover File Shares

SMB 3 gives us lots of goodies and one of them is Transparent Failover which allows us to make file shares continuously available on a cluster. I have talked about this before in Transparent Failover & Node Fault Tolerance With SMB 2.2 Tested (yes, that was with the developer preview bits after BUILD 2011, I was hooked fast and early) and here Continuously Available File Shares Don’t Support Short File Names – "The request is not supported" & “CA failure – Failed to set continuously available property on a new or existing file share as Resume Key filter is not started.”

This is an awesome capability to have. This also made me decide to deploy Windows 8 and now 8.1 as the default client OS. The fact that maintenance (it the Resume Key filter that makes this possible) can now happen during day time and patches can be done via Cluster Aware Updating is such a win-win for everyone it’s a no brainer. Just do it. Even better, it’s continuous availability thanks to the Witness service!

When the node running the file share crashes, the clients will experience a somewhat long delay in responsiveness but after 10 seconds the continue where they left off when the role has resumed on the other node. Awesome! Learn more bout this here Continuously Available File Server: Under the Hood and SMB Transparent Failover – making file shares continuously available.

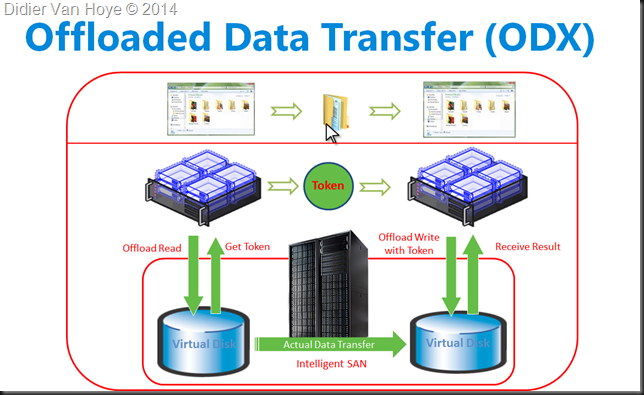

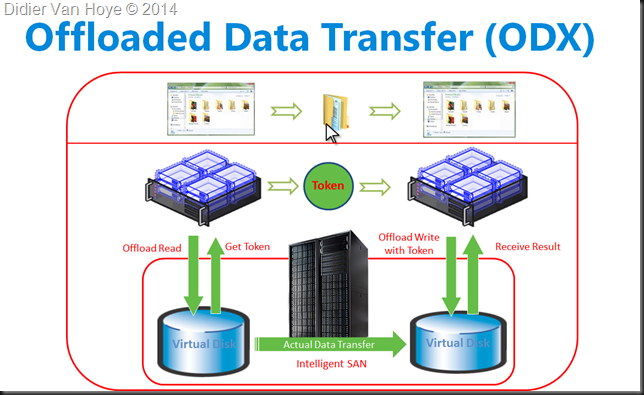

Windows Clients also benefits from ODX

But there is more it’s SMB 3 & ODX that brings us even more goodness. The offloading of read & write to the SAN saving CPU cycles and bandwidth. Especially in the case of branch offices this rocks. SMB 3 clients who copy data between files shares on Windows Server 2012 (R2) that has storage an a ODX capable SAN get the benefit that the transfer request is translated to ODX by the server who gets a token that represents the data. This token is used by Windows to do the copying and is delivered to the storage array who internally does all the heavy lifting and tell the client the job is done. No more reading data form disk, translating it into TCP/IP, moving it across the wire to reassemble them on the other side and write them to disk.

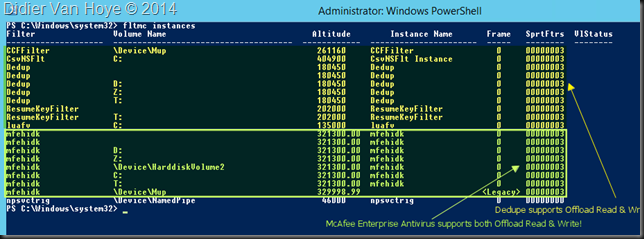

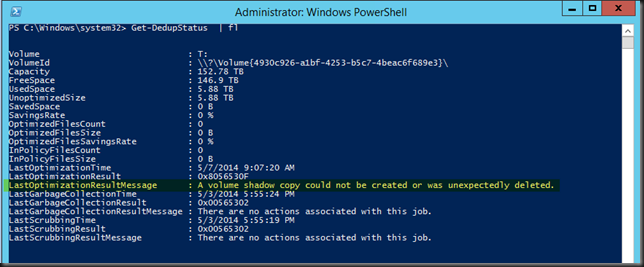

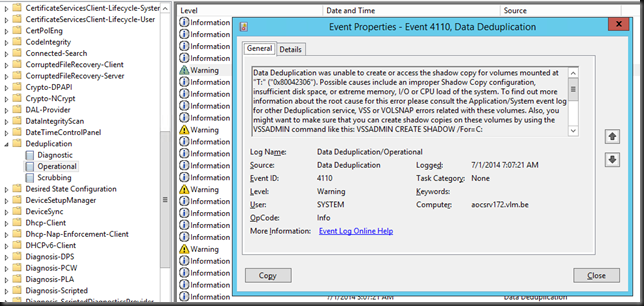

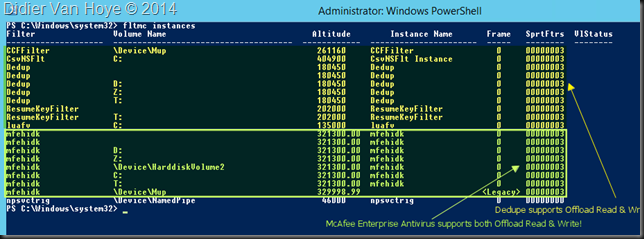

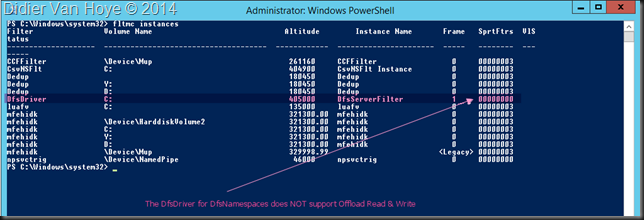

To make ODX happen we need a decent SAN that supports this well. A DELL Compellent shines here. Next to that you can’t have any filter drives on the volumes that don’t support offloaded read and write. This means that we need to make sure that features like data deduplication support this but also that 3rd party vendors for anti-virus and backup don’t ruin the party.

In the screenshot above you can see that Windows data deduplication supports ODX. And if you run antivirus on the host you have to make sure that the filter driver supports ODX. In our case McAfee Enterprise does. So we’re good. Do make sure to exclude the cluster related folders & subfolders from on access scans and schedules scans.

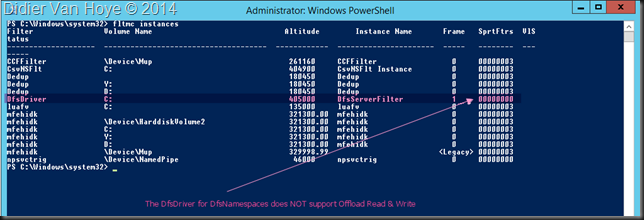

Do not run DFS Namespace servers on the cluster nodes. The DfsDriver does not support ODX!

The solution is easy, run your DFS Namespaces servers separate from your cluster hosts, somewhere else. That’s not a show stopper.

The user experience

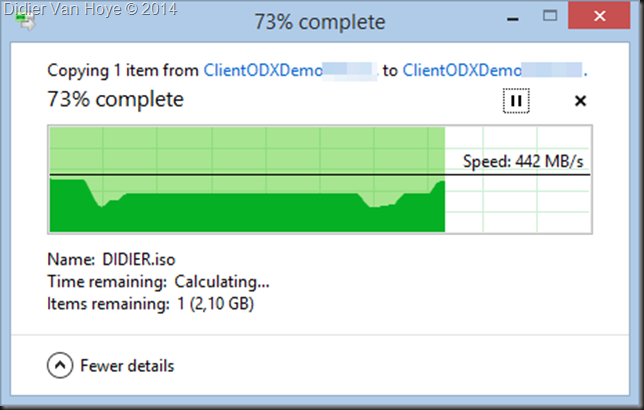

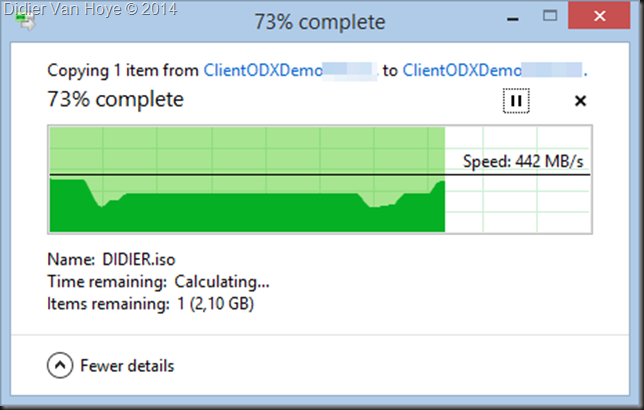

What it looks like to a user? Totally normal except for the speed at which the file copies happen.

Here’s me copying an ISO file from a file share on server A to a file share on server B from my Windows 8.1 workstation at the branch office in another city, 65 KM away from our data center and connected via a 200Mbps pipe (MPLS).

On average we get about 300 MB/s or 2.4 Gbps, which “over” a 200Mbps WAN is a kind of magic. I assure you that they’re not complaining and get used to this quite (too) fast  .

.

The IT Pro experience

Leveraging SMB 3 and ODX means we avoid that people consume tons of bandwidth over the WAN and make copying large data sets a lot faster. On top of that the CPU cycles and bandwidth on the server are conserved for other needs as well. All this while we can failover the cluster nodes without our business users being impacted. Continuous to high availability, speed, less bandwidth & CPU cycles needed. What’s not to like?

Pretty cool huh! These improvements help out a lot and we’ve paid for them via software assurance so why not leverage them? Light up your IT infrastructure and make it shine.

What’s stopping you?

So what are your plans to leverage your software assurance benefits? What’s stopping you? When I asked that I got a couple of answers:

- I don’t have money for new hardware. Well my SAN is also pré Windows 2012 (DELL Compellent SC40 controllers. I just chose based on my own research not on what VARs like to sell to get maximal kickbacks

. The servers I used are almost 4 years old but fully up to date DELL PowerEdge R710’s, recuperated from their duty as Hyper-V hosts. These server easily last us 6 years and over time we collected some spare servers for parts or replacement after the support expires. DELL doesn’t take away your access to firmware &drivers like some do and their servers aren’t artificially crippled in feature set.

. The servers I used are almost 4 years old but fully up to date DELL PowerEdge R710’s, recuperated from their duty as Hyper-V hosts. These server easily last us 6 years and over time we collected some spare servers for parts or replacement after the support expires. DELL doesn’t take away your access to firmware &drivers like some do and their servers aren’t artificially crippled in feature set.

- Skills? Study, learn, test! I mean it, no excuse!

- Bad support from ISV an OEMs for recent Windows versions are holding you back? Buy other brands, vote with your money and do not accept their excuses. You pay them to deliver.

As IT professionals we must and we can deliver. This is only possible as the result of sustained effort & planning. All the labs, testing, studying helps out when I’m designing and deploying solutions. As I take the entire stack into account in designs and we do our due diligence, I know it will work. The fact that being active in the community also helps me know early on what vendors & products have issues and makes that we can avoid the “marchitecture” solutions that don’t deliver when deployed. You can achieve this as well, you just have to make it happen. That’s not too expensive or time consuming, at least a lot less than being stuck after you spent your money.