Creating a bootable VHD or VHDX from an existing one is a great capability to have.There are a couple of reasons why one might need or want to do this. In windows 2012 (R2) this is even a part of normal live migration operations. Storage live migration for example is nothing but the live streaming of the data of your live virtual hard disk into a new VDH/VHDX. You have multiple options when it comes to creating a bootable VHD/VHDX from an existing one and they all serve their specific purposes,which might or might not overlap.

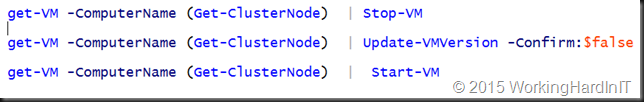

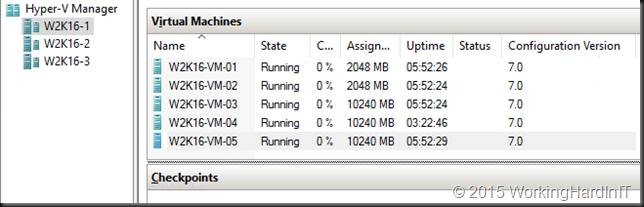

This is great stuff to do migrations, reorganize storage, defrag your internal dynamic VHDX structure etc. But you’re not limited to those options. When you want to convert from VHD to VHDX you’ll leverage Convert-VHDX. You can also create a new VHDX with an old one as the source with New-VHDX. Great for all kind of operations including off line migration, updates, testing on exact copies of the original disk etc. You might think it’s better to just copy the disk but for a conversion that will not work, that won’t deal with internal fragmentation which can be important for performance testing when your migrating to new storage, a new cluster & Hyper-V version and such.

Recently people asked me if this would work with their OS disk. The virtual disk that the boot from. Yes that will work. Both New-VHD and Convert-VHD will create a fully bootable new virtual disk if the source virtual disk was bootable to begin with. No problem, They have to, if you think about it. Using Convert-VHD to move from VHD to VHDX and even change the cluster sizes of the disk would be no good if the VM doesn’t boot anymore. Like wise with New-VHD.

The only thing that need some real tender loving care is when you convert a VM from generation to generation 2. The script provided to to that by John Howard (MSFT) use fully supported technologies. The script itself is not a supported product, but you’re not doing anything unsupported with it.

So all people needing to convert, defrag or move VMs to new virtual hard disks. Do a few test to verify your assumptions and go forward. Step into that bright new future you’ve been missing out on for the past 3 years.