Introduction

I was working on a hardware refresh, consolidation and upgrade to Windows Server 2019 project. This mainly boils down to cluster operating system rolling upgrades from Windows Server 2016 to Windows Server 2019 with new servers replacing the old ones. Pretty straight forward. So what does this has to do with shared nothing live migration with a virtual switch change and VLAN ID configuration

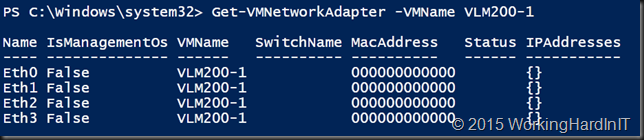

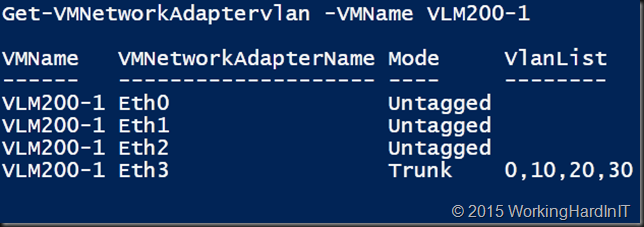

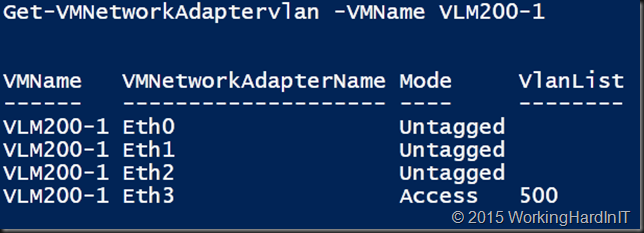

Due to the consolidation aspect, we also had to move virtual machines from some older clusters to the new clusters. The old cluster nodes have multiple virtual switches. These connect to different VLANs. Some of the virtual machines have on only one virtual network adapter that connects to one of the virtual switches. Many of the virtual machines are multihomed. The number of virtual NICs per virtual machine was anything between 1 to 3. For this purpose, we had the challenge of doing a shared nothing live migration with a virtual switch change and VLAN ID configuration. All this without downtime.

Meeting the challenge

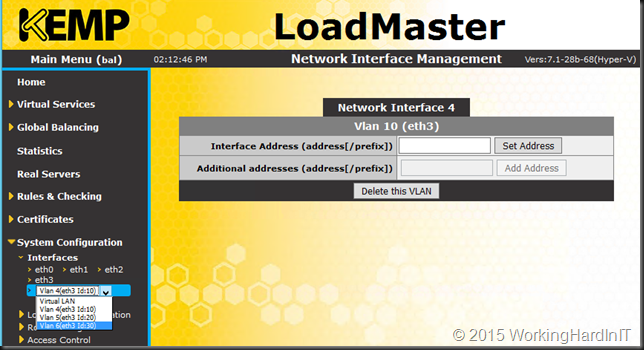

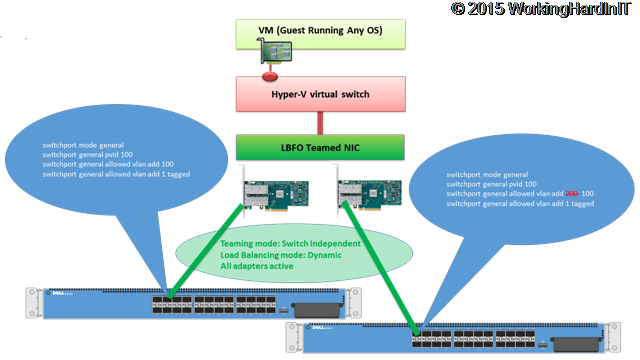

In the new cluster, there is only one converged virtual switch. This virtual switch attaches to trunked network ports with all the required VLANs. As we have only one virtual switch on the new Hyper-V cluster nodes, the name differs from those on the old Hyper-V cluster nodes. This prevents live migration. Fixing this is our challenge.

First of all, compare-vm is your friend to find out blocking incompatibilities between the source and the target nodes. You can read about that in many places. Here, we focus on our challenge.

Making Shared nothing migration work

The first step is to make sure shared nothing migration works. We can achieve this in several ways.

Option 1

We can disconnect the virtual machine network adapters from their virtual switch. While this allows you to migrate the virtual machines, this leads to connectivity loss. This is not acceptable.

Option2

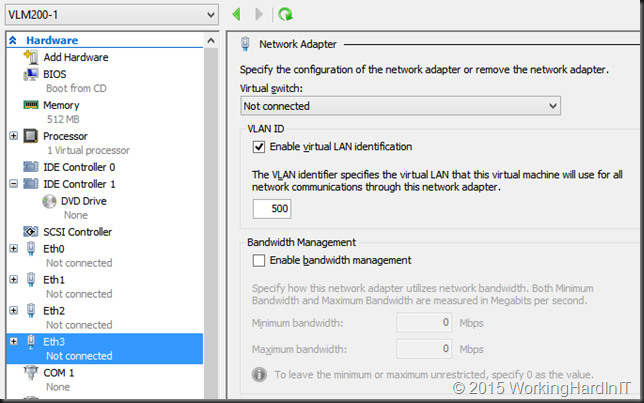

We can preemptively set the virtual machine network adapters to a virtual switch with the same name as the one on the target and enable VLAN ID. Consequently, this means you have to create those and need NICs to do so. but unless you configure and connect those to the network just like on the new Hyper-V hosts this also leads to connectivity loss. That was not possible in this case. So this option again is unacceptable.

Option 3

What I did was create dummy virtual switches on the target hosts. For this purpose, I used some spare LOM NICs. I did not configure them otherwise. As a matter of fact, tI did not even connect them. Just the fact that they exist with the same names as on the old Hyper-V hosts is sufficient to make shared nothing migration possible. Actually, this is a great time point to remind ourselves that we don’t even spare NICs. Dummy private virtual switches that are not even attached to a NIC will also do.

After we have finished the migrations we just delete the dummy virtual switches. That all there is to do if you used private ones. If you used spare NICs just disable them again. Now all is as was and should be on the new cluster nodes.

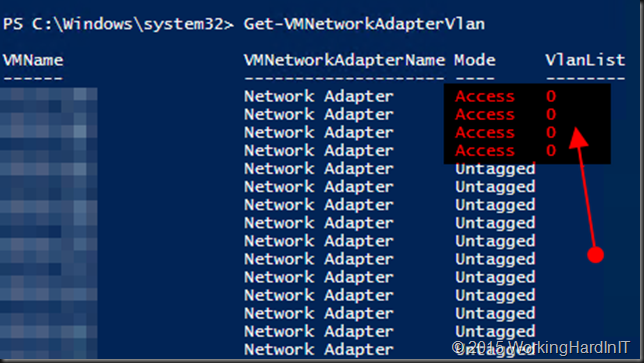

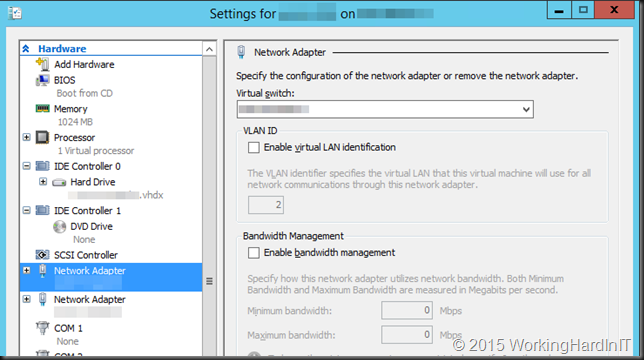

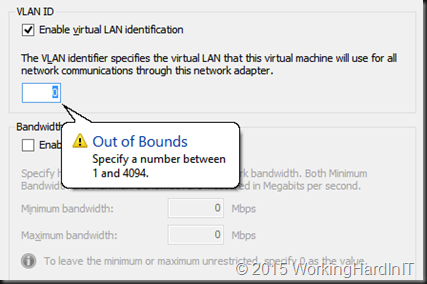

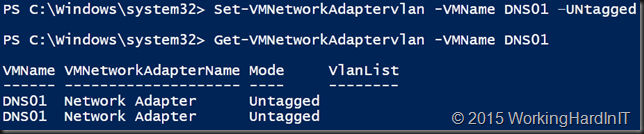

Turning shared nothing migration into shared nothing live migration

Remember, we need zero downtime. You have to keep in mind that long as the shared nothing live migration is running all is well. We have connectivity to the original virtual machines on the old cluster nodes. As soon as the shared nothing live migration finishes we do 2 things. First of all, we connect the virtual network adapters of the virtual machines to the new converged virtual switch. Also, we enable the VLAN ID. To achieve this, we script it out in PowerShell. As a result, is so fast we only drop only 1 or 2 pings. Just like a standard live migration.

Below you can find a conceptual script you can adapt for your own purposes. For real migrations add logging and error handling. Please note that to leverage share nothing migration you need to be aware of the security requirements. Credential Security Support Provider (CredSSP) is the default option. If you want or must use Kerberos you must configure constrained delegation in Active Directory.

I chose to use CredSSP as we would decommission the old host soon afterward anyway. It also means we did not need Active Directory work done. This can be handy if that is not evident in the environment you are in. We started the script on every source Hyper-V host, migrating a bunch of VMs to a new Hyper-V host. This works very well for us. Hope this helps.

Sample Script

#The source Hyper-V host

$SourceNode = 'NODE-A'

#The LUN where you want to storage migrate your VMs away from

$SourceRootPath = "C:\ClusterStorage\Volume1*"

#The source Hyper-V host

#The target Hypr-V host

$TargetNode = 'ZULU'

#The storage pathe where you want to storage migrate your VMs to

$TargetRootPath = "C:\ClusterStorage\Volume1"

$OldVirtualSwitch01 = 'vSwitch-VLAN500'

$OldVirtualSwitch02 = 'vSwitch-VLAN600'

$NewVirtualSwitch = 'ConvergedVirtualSwitch'

$VlanId01 = 500

$VlanId02 = 600

#Grab all the VM we find that have virtual disks on the source CSV - WARNING for W2K12 you'll need to loop through all cluster nodes.

$AllVMsOnRootPath = Get-VM -ComputerName $SourceNode | where-object { $_.HardDrives.Path -like $SourceRootPath }

#We loop through all VMs we find on our SourceRoootPath

ForEach ($VM in $AllVMsOnRootPath) {

#We generate the final VM destination path

$TargetVMPath = $TargetRootPath + "\" + ($VM.Name).ToUpper()

#Grab the VM name

$VMName = $VM.Name

$VM.VMid

$VMName

if ($VM.isclustered -eq $True) {

write-Host -ForegroundColor Magenta $VM.Name "is clustered and is being removed from cluster"

Remove-ClusterGroup -VMId $VM.VMid -Force -RemoveResources

Do { Start-Sleep -seconds 1 } While ($VM.isclustered -eq $True)

write-Host -ForegroundColor Yellow $VM.Name "has been removed from cluster"

}

#Do the actual storage migration of the VM, $DestinationVMPath creates the default subfolder structure

#for the virtual machine config, snapshots, smartpaging & virtual hard disk files.

Move-VM -Name $VMName -ComputerName $VM.ComputerName -IncludeStorage -DestinationStoragePath $TargetVMPath -DestinationHost $TargetNode

$OldvSwitch01 = Get-VMNetworkAdapter -ComputerName $TargetNode -VMName $MovedVM.VMName | where-object SwitchName -eq $OldVirtualSwitch01

if ($Null -ne $OldvSwitch01) {

foreach ($VMNetworkadapater in $OldvSwitch01)

{ write-host 'Moving to correct vSwitch'

Connect-VMNetworkAdapter -VMNetworkAdapter $OldvSwitch01 -SwitchName $NewVirtualSwitch

write-out "Setting VLAN $VlanId01"

Set-VMNetworkAdapterVlan -VMNetworkAdapter $OldvSwitch01 -Access -VLANid $VlanId01

}

}

$OldvSwitch02 = Get-VMNetworkAdapter -ComputerName $TargetNode -VMName $MovedVM.VMName | where-object SwitchName -eq $OldVirtualSwitch02

if ($NULL -ne $OldvSwitch02) {

foreach ($VMNetworkadapater in $OldvSwitch02) {

write-host 'Moving to correct vSwitch'

Connect-VMNetworkAdapter -VMNetworkAdapter $OldvSwitch02 -SwitchName $NewVirtualSwitch

write-host "Setting VLAN $VlanId02"

Set-VMNetworkAdapterVlan -VMNetworkAdapter $OldvSwitch02 -Access -VLANid $VlanId02

}

}

}