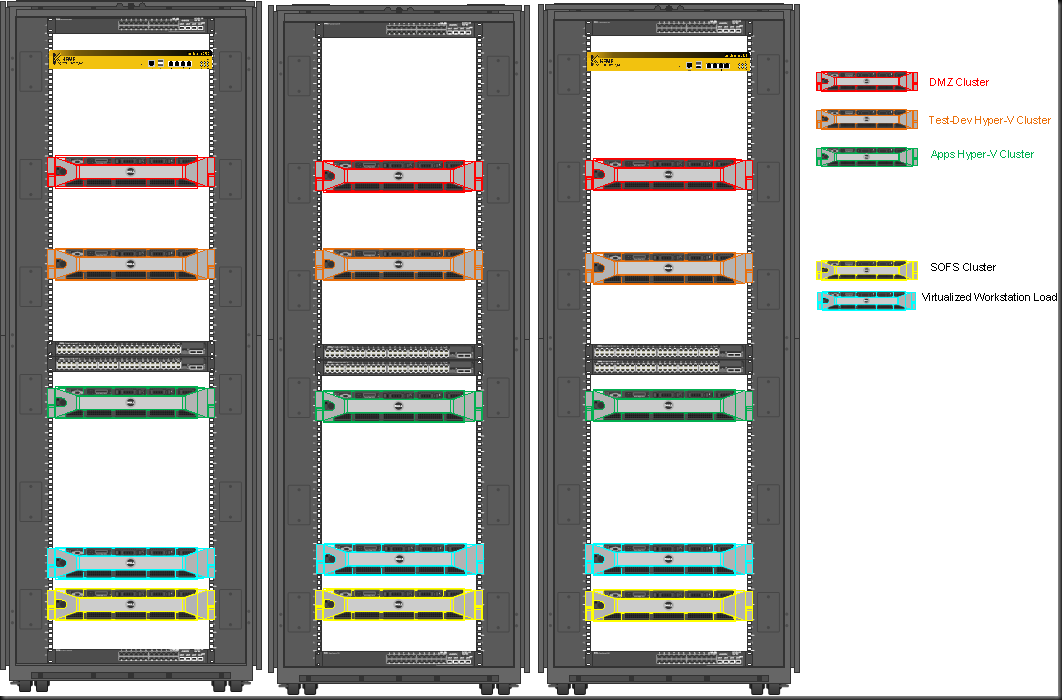

I was writing some small PowerShell scripts to kick pause and resume Hyper-V cluster hosts and I wanted to monitor the progress of draining the virtual machines of the node when pausing it. I found this nice blog about Draining Nodes for Planned Maintenance with Windows Server 2012 discussing this subject and providing us with the properties to do just that.

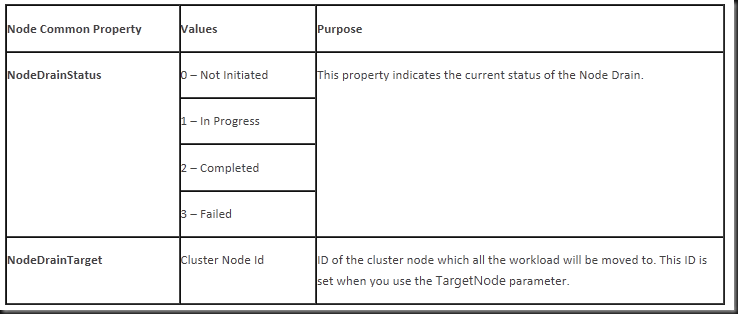

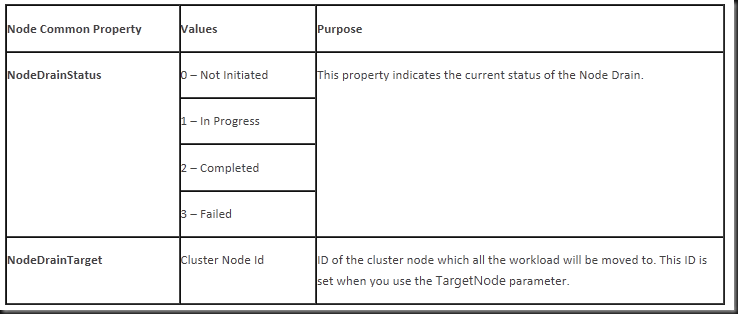

It seems we have two common properties at our disposal: NodeDrainStatus and NodeDrainTarget.

So I set to work but I just didn’t manage to get those properties to be read. It was like they didn’t exist. So I pinged Jeff Wouters who happens to use PowerShell for just about anything and asked him if it was me being stupid and missing the obvious. Well it turned out to be missing the obvious for sure as those properties do no exist. Jeff told me to double check using:

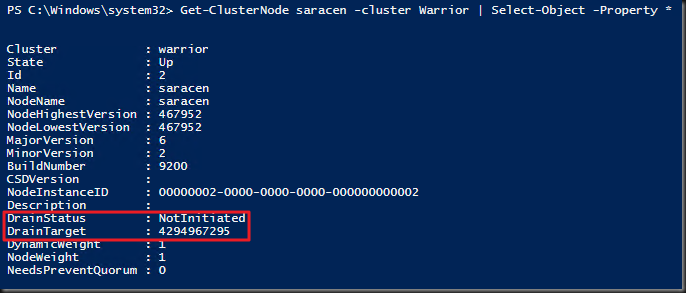

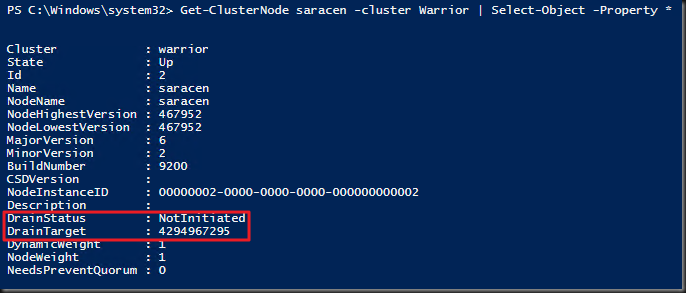

Get-ClusterNode MyNode -cluster MyCluster | Select-Object -Property *

Guess what, it’s not NodeDrainStatus and NodeDrainTarget but DrainStatus and DrainTarget.

What put me off here was the following example in the same blog post:

Get-ClusterResourceType "Virtual Machine" | Get-ClusterParameter NodeDrainMoveTypeThreshold

That should have been a dead give away. As we’ve been using MoveTypeTresHold a lot the recent months and there is no NodeDrain in that value either. But it just didn’t register. By the way you don’t need to create the property either is exists. I guess this code was valid with some version (Beta?) but not anymore. You can just get en set the property like this

Get-ClusterResourceType “Virtual Machine” -Cluster MyCluster | Get-ClusterParameter MoveTypeThreshold

Get-ClusterResourceType “Virtual Machine” -Cluster MyCluster | Set-ClusterParameter MoveTypeThreshold 2000

So lessons learned. Trust but verify  . Don’t forget that a lot of things in IT have a time limited value. Make sure that to look at the date of what you’re reading and about what pre RTM version of the product the information is relevant to.

. Don’t forget that a lot of things in IT have a time limited value. Make sure that to look at the date of what you’re reading and about what pre RTM version of the product the information is relevant to.

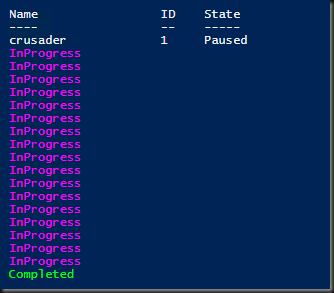

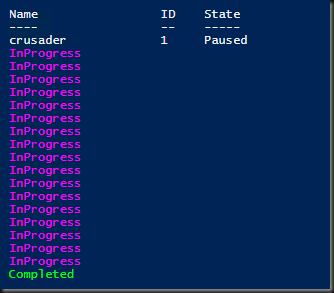

To conclude here’s the PowerShell snippet I used to monitor the draining process.

Suspend-clusternode –Name crusader -Cluster warrior -Drain

Do

{

Write-Host (get-clusternode –Name “crusader” -Cluster warrior).DrainStatus -ForegroundColor Magenta

Sleep 1

}

until ((get-clusternode –Name “crusader” -Cluster warrior).DrainStatus -ne "InProgress")

If ((get-clusternode –Name “crusader” -Cluster warrior).DrainStatus -eq "Completed")

{

Write-Host (get-clusternode –Name “crusader” -Cluster warrior).DrainStatus -ForegroundColor Green

}

Which outputs