Recently as a DELL Compellent customer version 7.6.0.47 became available to us. I download it and found some welcome new capabilities in the release notes.

- Support for vSphere 6

- 2024 bit public key support for SSL/TLS

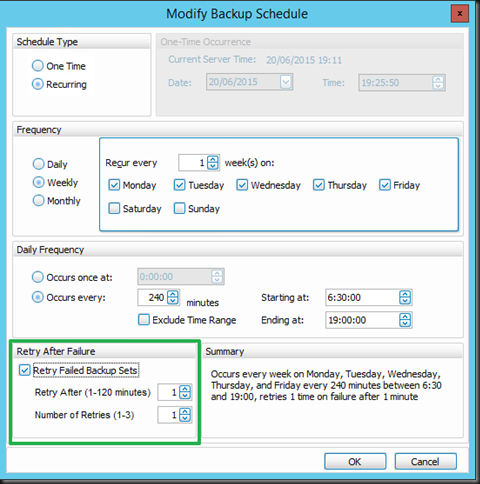

- The ability to retry failed jobs (Microsoft Extensions Only)

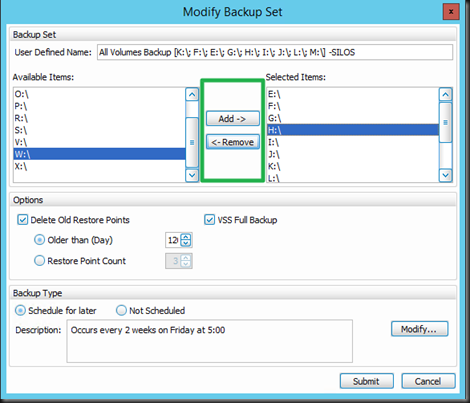

- The ability to modify a backup set (Microsoft Extensions Only)

The ability to retry failed jobs is handy. There might be a conflicting backup running via a 3rd party tool leveraging the hardware VSS provider. So the ability to retry can mitigate this. As we do multiple replays per day and have them scheduled recurrently we already mitigated the negative effects of this, but this only gibes us more options to deal with such situations. It’s good.

The ability to modify a backup set is one I love. It was just so annoying not to be able to do this before. A change in the environment meant having to create a new backup set. That also meant keeping around the old job for as long as you wanted to retain the replays associated with that job. Not the most optimal way of handling change I’d say, so this made me happy when I saw it.

Now I’d like DELL to invest a bit more in make restore of volume based replays of virtual machines easier. I actually like the volume based ones with Hyper-V as it’s one snapshot per CSV for all VMs and it doesn’t require all the VMs to reside on the host where we originally defined the backup set. Optimally you do run all the VMs on the node that own the CSV but otherwise it has less restrictions. I my humble opinion anything that restricts VM mobility is bad and goes against the grain of virtualization and dynamic optimization. I wonder if this has more to do with older CVS/Hyper-V versions, current limitations in Windows Server Hyper-V or CVS or a combination. This makes for a nice discussion, so if anyone from MSFT & the DELL Storage team responsible for Repay Manager wants to have one, just let me know ![]()

Last but not least I’d love DELL to communicate in Q4 of 2015 on how they will integrate their data protection offering in Compellent/Replay manager with Windows Server 2016 Backup changes and enhancements. That’s quite a change that’s happing for Hyper-V and it would be good for all to know what’s being done to leverage that. Another thing that is high on my priority for success is to enable leveraging replays with Live Volumes. For me that’s the biggest drawback to Live Volumes: having to chose between high/continuous availability and application consistent replays for data protection and other use cases).

I have some more things on my wish list but these are out of scope in regards to the subject of this blog post.