Introduction

There are pundits out there that claim that you cannot create a fully provisioned LUN on a Compellent SAN. Now that what I call unsubstantiated rumors, better know as bull shit.

Sure the magic sauce of many modern storage array lies in thin provisioning. Let there be no mistake about that. But there are scenarios where you might want to leverage a fully provisioned volume. This is also know a s thick provisioned LUN. You can read about one such a scenario where they make perfect sense in this blog post Mind the UNMAP Impact On Performance In Certain Scenarios

Create a Full or Thick Provisioned Volume on Compellent

First of all you create brand new volume in the Storage Center System Explorer. That’s a standard as it gets.

You then map this volume to a server

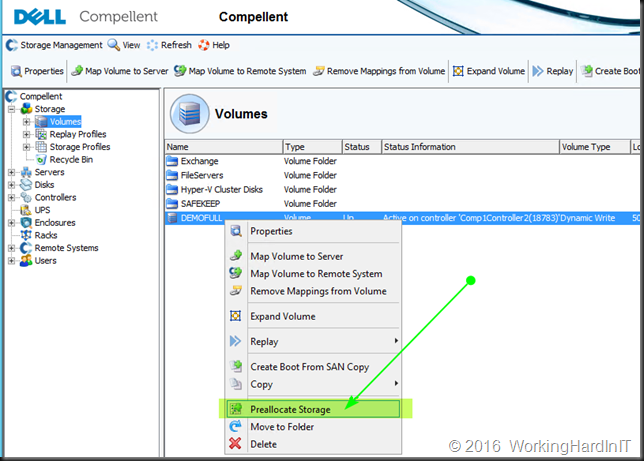

At that moment, before you even mount that volume on your server let alone do anything else such a bringing it on line or formatting it you’ll “Preallocate Storage” for that volume in Storage Center.

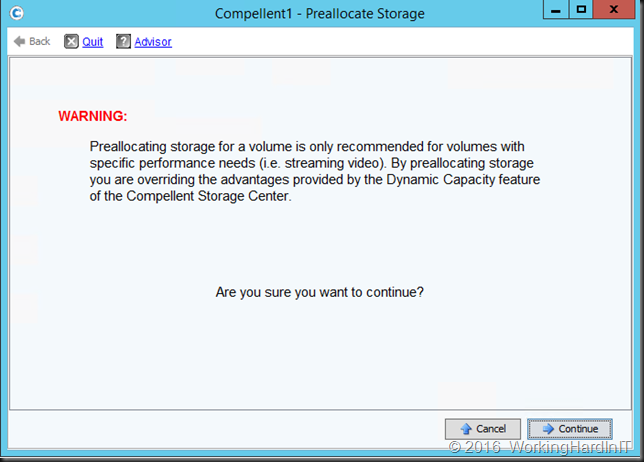

You’ll get a warning as this is not a default action and you should only do so when the conditions of the IO warrant this.

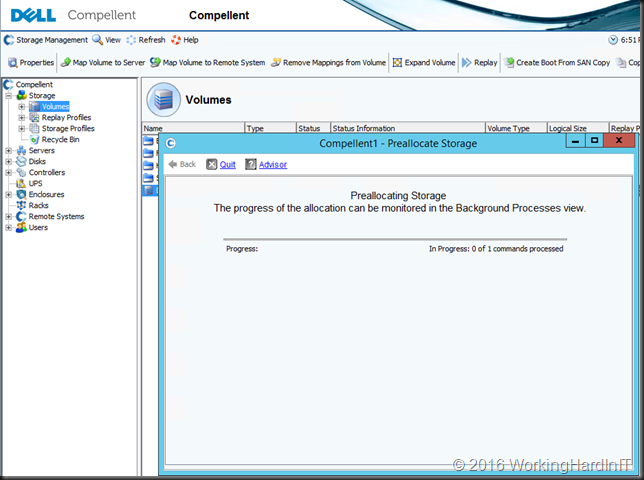

When you continue you’ll get some feedback. This can take quite some time depending on the size of the volume.

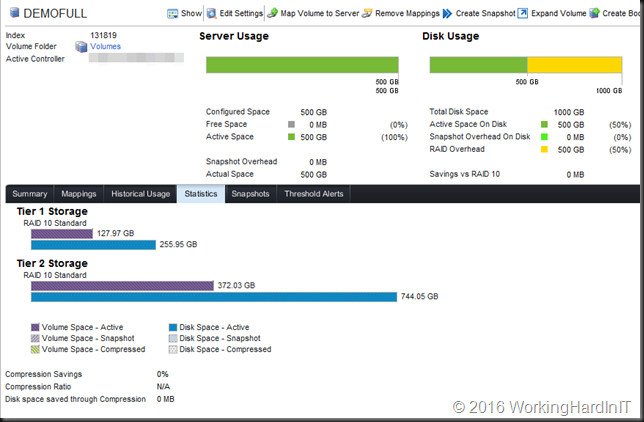

When it’s done peek at the statistics of that full or this provisioned volume on the Compellent.This is what it looks like when you look at the statistics for that volume after is was done. So before we even formatted the volume on a server and wrote data to it. It’s using all the space on the SAN for the start.

Due to data protection it’s even more. It’s clear form the image above that a 500GB disk in RAID 10 fully provisioned is using 1TB of space as its all still in RAID 10 (no tiering down has occurred yet). Raid 10 has an overhead factor 2. The volume is for a large part in Tier 2 because my Tier 1 is full, so writing spilled over into Tier 2.

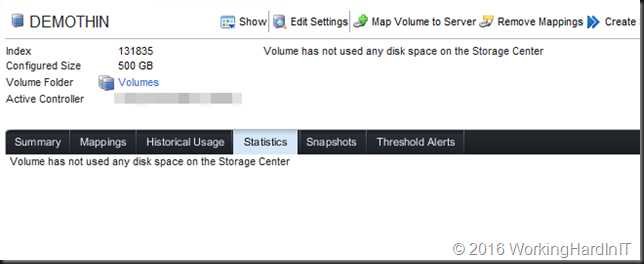

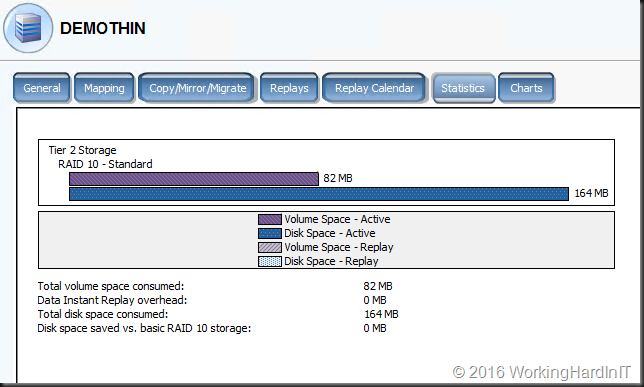

Now compare this to a thinly provisioned volume that we just created and again we haven’t even touched it in any other way.

Yup, until we actually write data to the volume it’s highly space efficient, there is absolutely no spaces use and we’ll see only a little when we mount, initialize the disk in Windows, create a simple volume and format it.

This is completely in Tier 2 and my tier 1 is full. I accept donations of SANs and SSD’s for my lab it this bothers you ![]() . When we write data to it you’ll see this rise and over time you’ll see it tier down and up as well.

. When we write data to it you’ll see this rise and over time you’ll see it tier down and up as well.