Bug when changing the “store this conditional forwarder in active directory” setting

Recently I encountered a bug when changing the “store this conditional forwarder in active directory” setting. I have been doing quite some active directory extensions to Azure lately. Part of that, post-process, is making sure that DNS name resolution from on-premises to Azure and vice versa is working optimally. When it comes to resolving Azure private endpoints and other private DNS zones from on-premises we need to add the conditional forwarders for the respective Azure DNS zones.

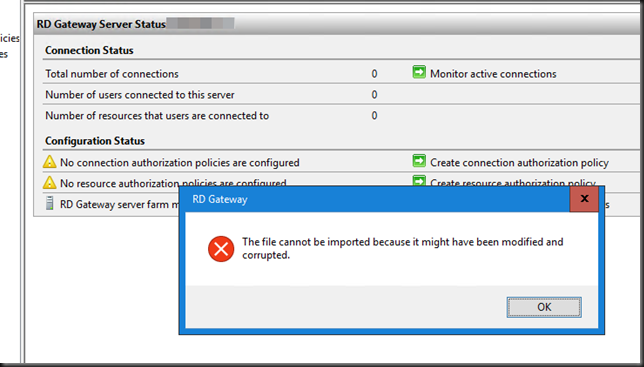

As we have different needs for this configuration on-premises versus in Azure we disable “Store this conditional forwarder in Active Directory, and replicate as follows” for all zones. This is the defaultm when you add a conditional forwarder.

However, you will also need to do this, in certain cases for other conditional forwarders depending on the DNS infrastructure between Azure and on-premises. I tend to change those non-Azure resource conditional forwarders before I add the one needed for Azure.

While that sounds easy enough, you can easily get into a pickle. When you change this, while the configuration seems perfectly fine, the name resolution for those zones where you change this stops working. That is bad. No bueno!

That can break a lot of services and applications leading to support calls, causing upset application owners, and lost revenue while leaving you scrambling to find a fix.

So how do we fix this?

Well, the only solution is to remove each and every conditional forwarder involved and add them again, While re-adding it you might get an “unknown error” in the GUI, but ignore it. Just go ahead. When your reverse lookup zones are in order it will resolve to the FQDN and name resolution will start working again. You can also use PowerShell or the command line. It is worth checking if changing the setting via PowerShell or the command line triggers the bug or not.

Please note that, as your are not replication the conditional forwarders in Active Directory, you must do that on all DNS servers on-premises involved in resolving Azure resources.

Is this a known bug?

Well, it looks like it, but I have yet to find a knowledge base article about it. There are mentions of other people running into the issue. This is not per se Azure-related. Take a look here DNS Conditional Forwarder stops working as soon as it’s Domain Replicated – Microsoft Q&A and AD Integrating conditional DNS forwarders stops them working (microsoft.com).

Note that this bug when changing the “store this conditional forwarder in active directory” setting will appear when you either enable or disable it.

This bug has existed for many years and over many versions of Windows DNS. The last encounters I had was with Windows Server 2019 and 2022. But beware with Windows Server 2016 and 2012 (R2) as well.