Symbolic link to an Azure file share

We recently used a symbolic link to an Azure file share to transparently replace a local folder in which data sets are cached for download. That means that the existing service transparently copies the data sets to an Azure file share without having to change anything in the code to do so. With a small adaptation of the code, we can now provide download links to data in the Azure file share so this process is also transparent for the clients downloading the data sets.

You can already guess the reason for this exercise. We did this to fix a bandwidth issue on-premises by creating an easy workaround with minimal code changes. As more and more clients download more and more data sets, this service consumes too much bandwidth. This means we have to throttle the service and/or implement QoS to it. While this helps the other services using that internet connection, it does nothing to improve download speeds for the clients. This is just an example and is not meant as architectural or design advice. It is an interim fix to an existing problem. This trick is something that is used with AKS as well for example.

How to add a symbolic link to an Azure file share

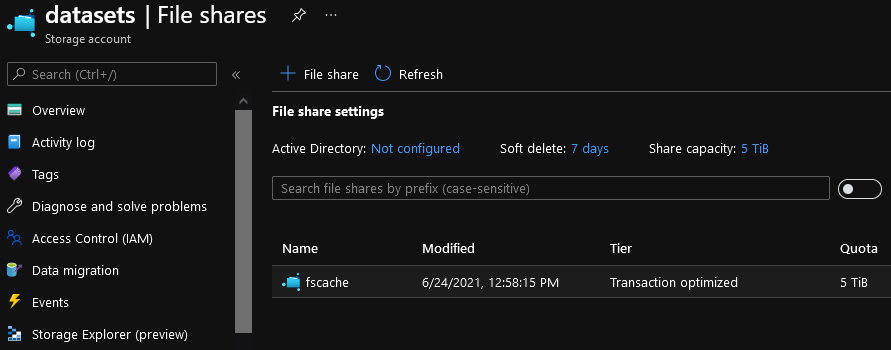

Create an Azure file share

Create a storage account and create a file share.

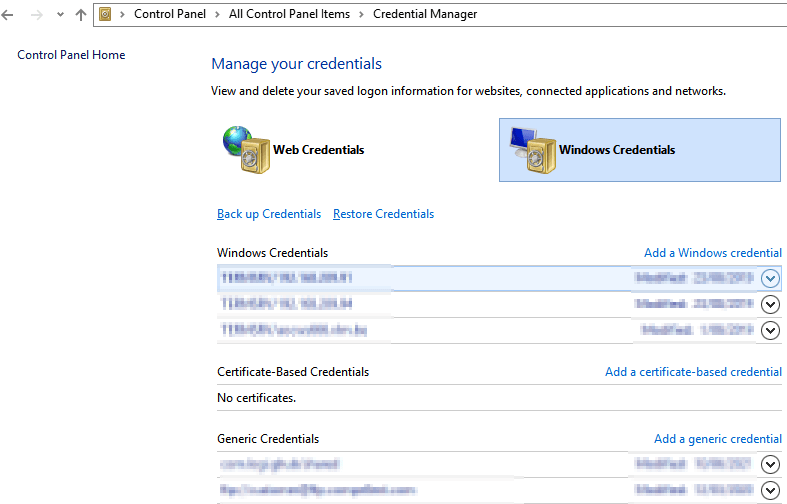

Handling credentials

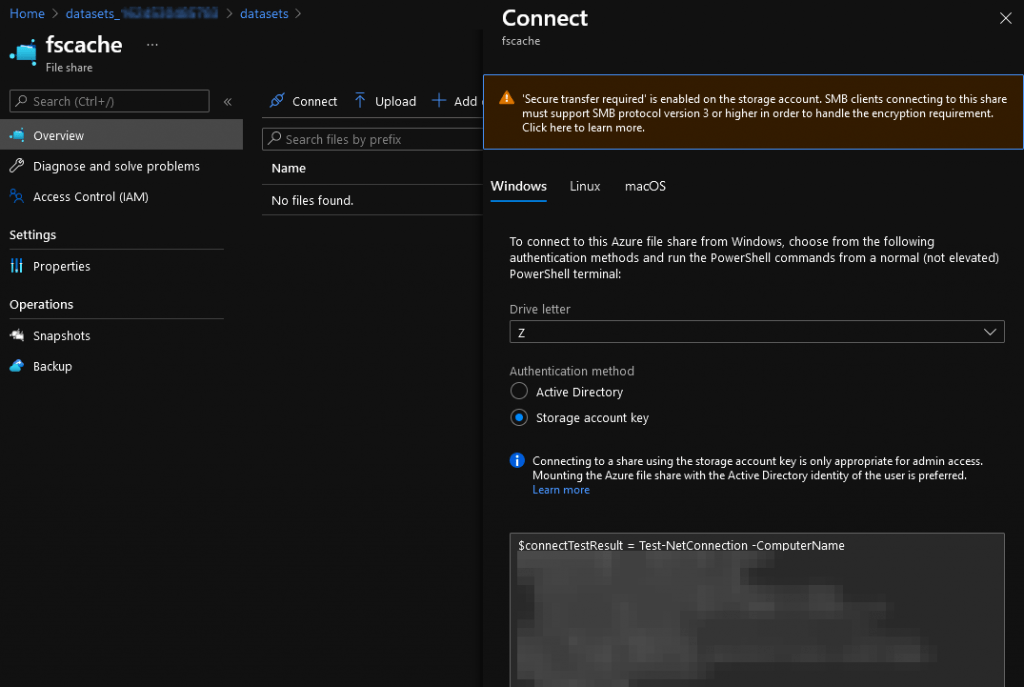

Dealing with the credentials needed for this is easy. All we need to do is add the information into the credential manager as a Windows credential. That would be the user, the password, and the file share UNC path. Note that here the password is our storage account key.

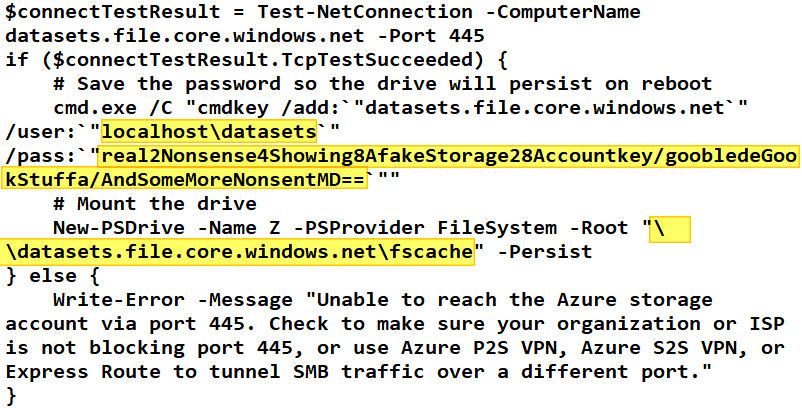

Grab the info you need from the “connect” settings for your Azure file share. We will not map the the files hare to a drive, so there is no need to run this PowerShell script.

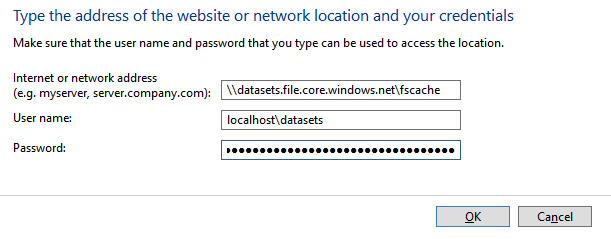

So in this example that is:

Internet or networkk address: \\datasets.file.core.windows.net\fscache

User name: localhost\datasets

Password: real2Nonsense4Showing8AfakeStorage28Accountkey/goobledeGookStuffa/AndSomeMoreNonsentMD==

We will add these credentials to the Credential Manager as Windows Credentials.

That is it, if you entered everything correctly, this will work.

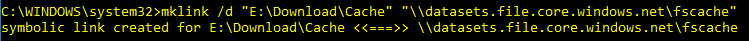

Creating the symbolic link

Once you have added the credentials creating the symbolic link is very easy.

mklink /d "E:\Download\Cache" "\\datasets.file.core.windows.net\fscache"

You do need to take care you create the symbolic in the right place in your folder structure. But other than that, that is all you need to do.

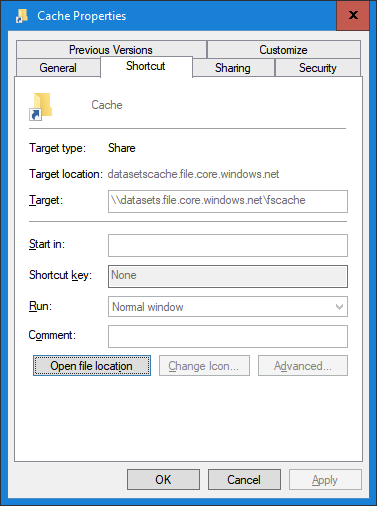

The symbolic link is available and can be used transparently by the service/application.

To test the file share in Azure you can upload or download data via azcopy or Azure Storage Explorer. The download functionality in our case is handled in the code, But here is a quick example of how to do a download it via azcopy using a shared access key signature.

azcopy copy "https://datasets.file.core.windows.net/fscache/DataSetSatNavSouthernUtah.zip?sv=2020-02-10&ss=bfqt&srt=sco&sp=rwdlacuptfx&se=2021-06-25T06:06:02Z&st=2021-06-24T22:06:02Z&spr=https&sig=%2FA%9SOrrY4KFAKEikPKeysOycLb4neBLogpPostpAQ624%3D" "ED:\MyDataSetDownloads" --recursive

Pro tip: if you need to remove the symbolic link but keep the data, use rmdir “E:\Download\Cache” and not del “E:\Download\Cache” or you will delete the data. That might not be what you want.

Next steps

Mind you, this was the easy and quick fix for a problem this service was facing. This is not a design or architecture. We are considering replacing the symbolic link solution with Azure File Sync. With a bandwidth cap and QoS on-premises, we would offer the primary download link to the cloud. There they can get all the bandwidth Azure can offer. Next to that, we would have an alternative link, marked as slow, that still points to the on-prem version of the data. This means the current implementation is still fully functional even when the Azure files share has an issue. Sure, the local copy comes with a significantly reduced performance, but it provides a failsafe.

The future

Well, the future lies in turning this into a solution running 100% in the cloud. Now, due to a large number of dependencies on various on-premies data sources, this is a long-term effort. We decided no to let perfection be the enemy of the good and fixed their biggest pain point today.

Conclusion

For sure, the use of a symbolic link to access an Azure file share is not something that will amaze people that have been working in the cloud for a while. It is however a nice example of how the use of Azure combined with on-premises services can result in a hybrid solution that solves real-world problems

This particular scenario enables them to distribute their data sets without having to worry about bandwidth limitations on-premises. That means they do to invest in a bigger internet pipe and a firewall with more throughput, or having to port their service and all its dependencies to a full-blown Azure solution.

Sometimes successful and cost-effective solutions come in the form of little tweaks that allow us to fix pain points easily.