Windows Server 2012 (R2) has made many improvements to how storage optimization and maintenance is done. You can read a lot more about this in What’s New in Defrag for Windows Server 2012/2012R2. It boils down to a more intelligent approach depending on the capability of the underlying storage.

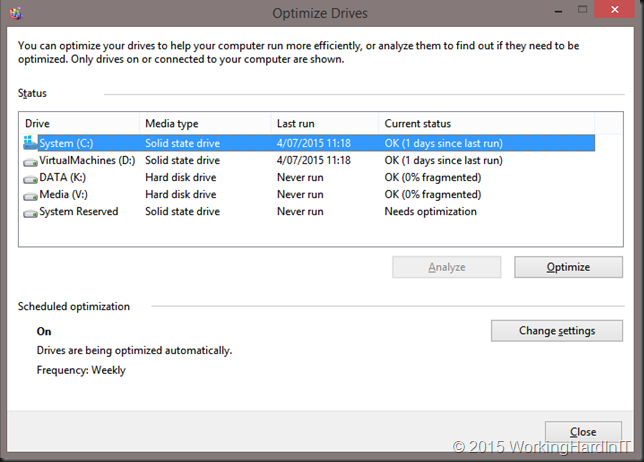

This is reflected in the Media type we see when we look at Optimize Drives.

This is my workstation … looks pretty correct a couple of SSDs and a couple of HDDs.

SSD are optimized intelligently by the way.When VSS is leveraged SSD do get fragmentation and so one in while they are “defragmented”. This has to do with keeping performance up to par. Read more about this in The real and complete story – Does Windows defragment your SSD? by Scott Hanselman.

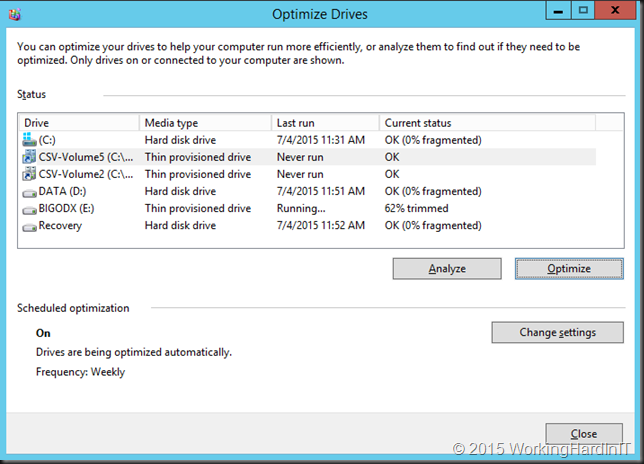

The next example is a Hyper-V Cluster. You can see the local disks identified as HDD and the CSV as Thin provisioned disks. Makes sense to me, the SAN I use supports thin provisioned disks.

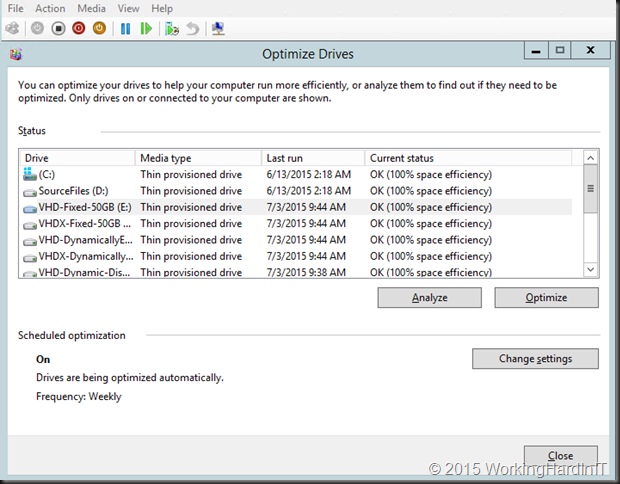

But now, let’s look at a Virtual Machine with virtual disks of every type known and on any type of storage we could find. All virtual disks are identified as “Thin provisioned disk”. How can that be?

What had me puzzled a little bit is that in a virtual machine each and every virtual disk is identified as thin provisioned disk. It doesn’t matter what type of virtual disk it is: fixed VHD/VHDX or dynamically expanding VHD/VHDX. It also doesn’t matter on what physical disk the virtual disk resides: SATA, SAS, SSD, SAN (iSCSI/FC) LUN or CSV, SMB Share …

So how does this work with a fixed VHD on a local SATA disk? A VHD doesn’t know about UNMAP, does it? And a SATA HHD? How does that compute? Well, my understanding on this is that all virtual disks, dynamically expanding or fixed, both VHDX/VHD are identified as thin provisioned disks, no matter what type of physical disk they reside on (CSV, SAS, SATA, SSD, shared/non shared). This is to allow for UNMAP (RETRIMs in Storage Optimizer speak, which is way of dealing with the TRIM limitations / imperfections, again see Scott Hanselman’s blog for this) command to be sent from the guest to the Hyper-V storage stack below. If it’s a VHD those UNMAP command are basically black holed just like they would never be passed down to a local SATA HHD (on the host) that has no idea what it is and used for.

But wait a minute ….what about SSD and defragmentation you say, my VHDX lives on an SSD.. Well they are for one not identified as SSD or HDD. The hypervisors deals with the storage optimization at the virtual layer. The host OS handles the physical layer as intelligent as it can to optimize the disks as best as it can. How that happens depends on the actual storage beneath in the case of a modern SAN you’ll notice it’s also identified as a Thin provisioned disk. SANs or hyper converged storage arrays provide you with storage that is also virtual with all kinds of features and are often based on tier storage which will be a mix of SSD/SAS/NL-SAS and in some cases even NVMe Flash. So what would an OS have to identify it as? The storage array must play its part in this.

So, if you ever wondered why that is, now you know. Hope you found this interesting!