Introduction

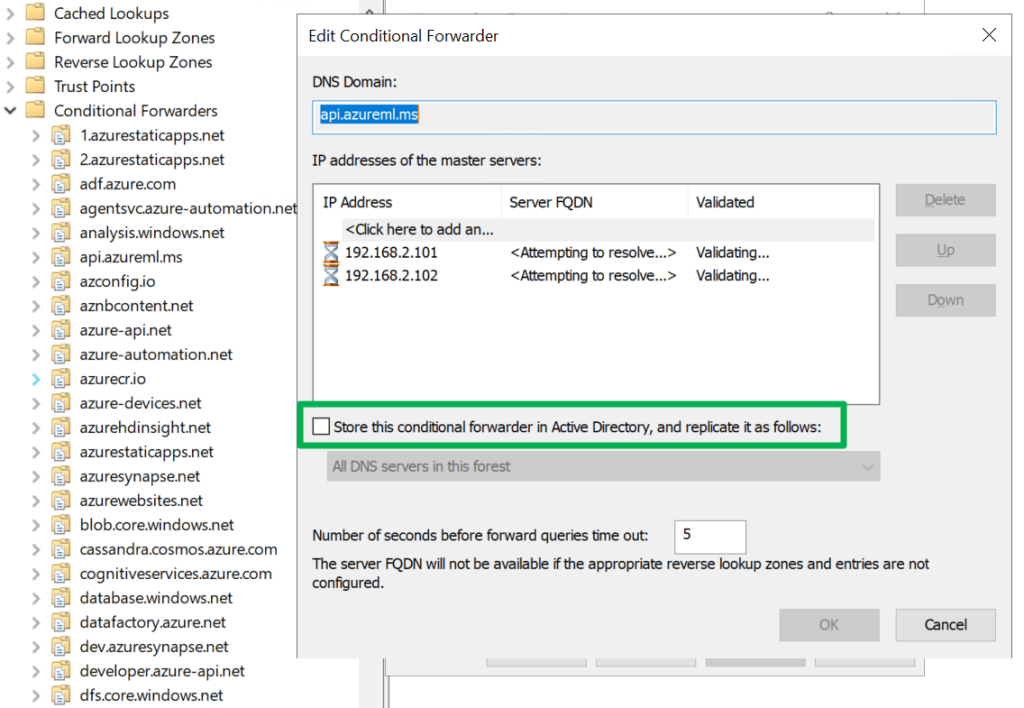

I recently wrote a PowerShell script to maintain Azure Public DNS zone conditional forwarders. If you look at the list is quite long. Adding these manually is tedious and error-prone. Sure you might only need a few, but hey, I think and prepare long term.

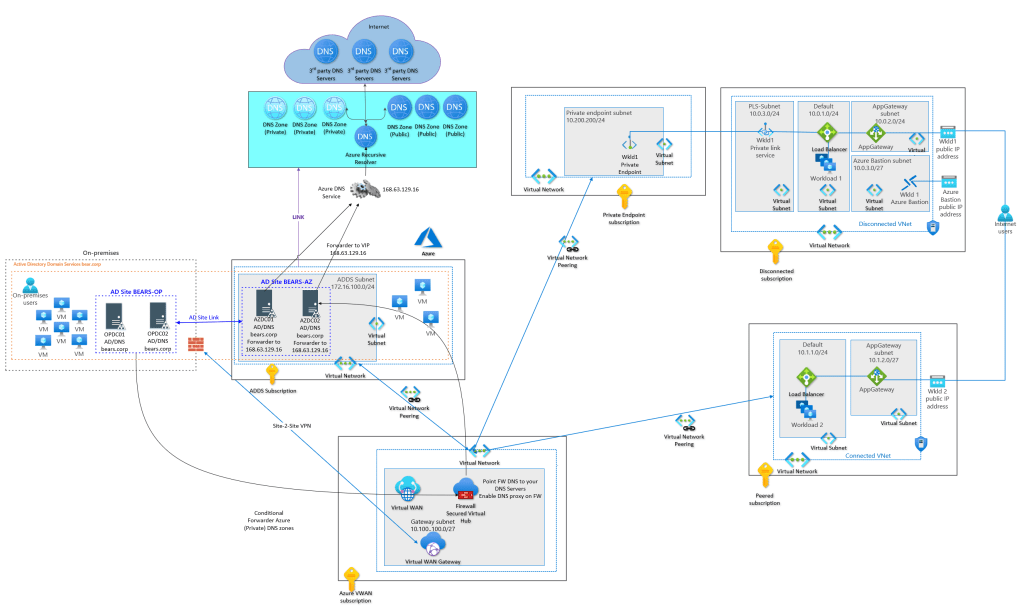

Some background on DNS and private endpoints

When using private endpoints in Azure correct DNS name resolution is essential. While Azure can do a lot of things for you under the hood it is important to wrap your head around name resolution in Azure, for all your public, private, and custom DNS requirements. In the end, you need a DNS solution that is maintainable and works for current and future use cases. Your peaceful IT existence will fall apart fast without the ability to correctly resolve the private endpoint IP addresses to their fully qualified domain name (FQDN).

That in itself is a big subject I will not dive into right now. I will say that host files (if applicable) are OK for testing but not a maintainable solution, except for the smallest environments. In Azure, you can link virtual networks to Private DNS zones to resolve DNS queries for private endpoints. As an alternative, you can use your own custom DNS Server(s) with a forwarder to Azure’s VIP 168.63.129.16 and, at least on-premises conditional forwarders.

The latter is a requirement to resolve DNS queries for Azure resources with private endpoints for on-premises. At least until Azure DNS Private Resolver becomes generally available. That will be the way forward in the future if you otherwise have no need for custom DNS servers.

Please note that name resolution for private endpoints uses the public DNS zones. This allows existing Microsoft Azure services with DNS configurations for a public endpoint to keep functioning when accessed from the internet. Azure will intercept queries that originate from Azure or connected on-premises locations and reply with the private IP address of private endpoints. This configuration must be overridden to connect using your private endpoint.

On-premises DNS Servers

While your custom DNS servers in Azure can forward queries they are not authoritative for to the Azure VIP 168.63.129.16, on-premises servers cannot reach that IP address. They need to send the DNS queries for private Azure resources to a custom DSN Server in Azure via conditional forwarding. The Azure custom DNS server will forward the query to 168.63.129.16.

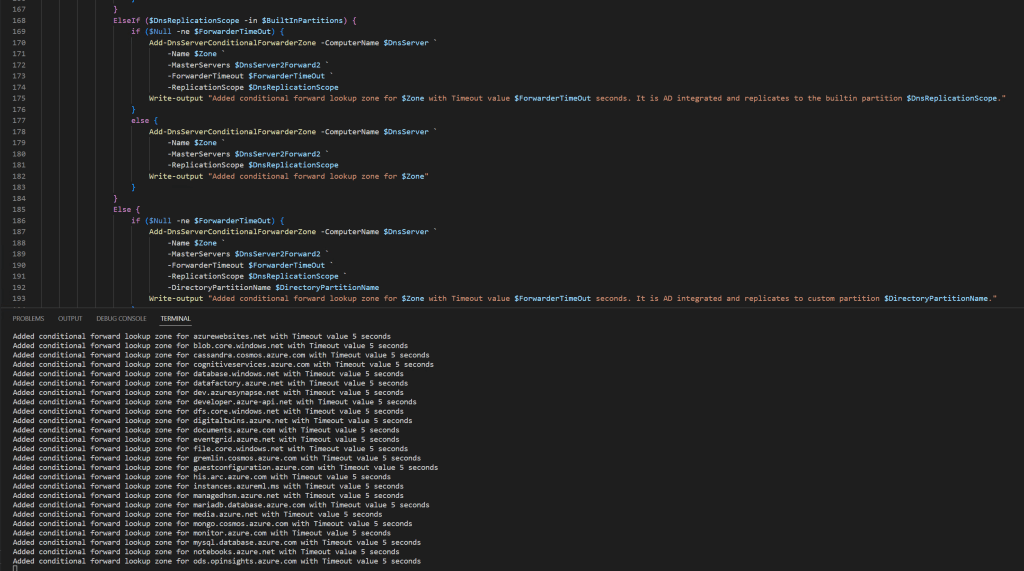

PowerShell script to maintain Azure Public DNS zone conditional forwarders

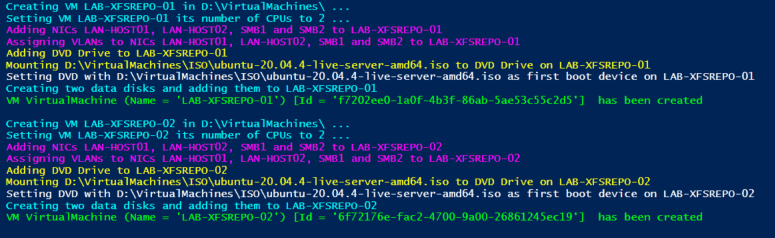

Below you will find the code for the script. I created a CSV file with all the Azure public DNS Conditional forwarder zones. Zones with placeholders for regions, partitions, or SQL instances will be generated. For that, you need to provide the correct parameters. if not these are ignored.

Another attention point is the fact that you can opt to store the zones in Active Directory or not. If so you can specify in what builtin or custom partition.

There are examples in the script TestAzurePublicDNSZoneForwardersScript.ps1 on how to use it. You will need at least one playground DNS server or better, 2 AD integrated DC/DNS servers for testing.

You can find the script at WorkingHardInIT/AzurePublicDnsZoneForwarders (github.com)

Conclusion

That’s it. I can extend the PowerShell script to maintain Azure Public DNS zone conditional forwarders with extra options when adding or updating conditional forwarders. Right now, for its current role, it does what I need. I do not plan to add an option to update the “store this conditional forwarder in Active Directory” setting as this has a bug.

See Bug when changing the “store this conditional forwarder in active directory” setting for more info. The gist is that it makes changing the setting causes DNS queries for the conditional forwarder to fail. We avoid that issue by removing and adding the conditional forwarders again. In many (most?) use cases so far, the default setting of not storing the conditional forwarder in Active Directory is what I need, so the script has no option to change that default setting until I might need it.