There are 3 type of disk fragmentation you might need to deal with in regards to Hyper-V:

- Fragmentation of the file system on the host LUN where the VMs reside.

- Fragmentation of files system on the LUNs inside of the VM.

- Block fragmentation of the VHDX itself. This is potentially more of an issue with dynamic disks and differencing disks.

We deal with the first type by defragmenting the LUN, which might be a CSV, in which case you can take a look here for more information on this Defragmenting your CSV Windows 2012 R2 Style with Raxco Perfect Disk 13 SP2. For more information on fragmentation in general take a look here What’s New in Defrag for Windows Server 2012/2012R. The second type is business as usual and is similar to the first one except that it’s the file system inside a VM.

For the third type we need to create a new virtual disk using the fragmented one as the source. See Checking and Correcting Virtual Hard Disk Fragmentation. This easily done but it does cause down time unless you leverage storage live migration. So that’s my preferred method, especially as I leverage ODX when I do this, so it’s pretty fast. So always leave yourself some margin on storage to be able to perform maintenance operations. That has always been true and still is.

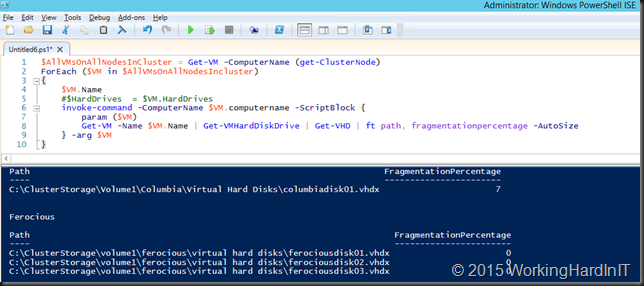

But how do you find out that you have this issue? PowerShell is your friend! Here’s a snippet to show you can check all VMs their vhdx files on a cluster:

$AllVMsOnAllNodesInCluster = Get-VM -ComputerName (get-ClusterNode)

ForEach ($VM in $AllVMsOnAllNodesIncluster)

{

$VM.Name

#$HardDrives = $VM.HardDrives

invoke-command -ComputerName $VM.computername -ScriptBlock {

param ($VM)

Get-VM -Name $VM.Name | Get-VMHardDiskDrive | Get-VHD | ft path, fragmentationpercentage -AutoSize

} -arg $VM

}

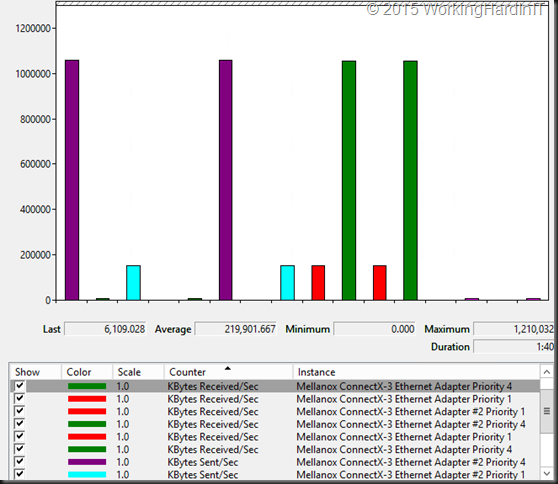

Here’s a screenshot of some output of this snippet

As said the best solution that does not incur down time is to storage (live) migrate the virtual disks affected. We can automate this and put in some logic to do this for all virtual hard disks that are more than X% fragmented. Do take care to also check for disk space or the migration will fail.

Hope this helps some of you!