It’s time to demonstrate ETS in action! There is a quick video on ETS on Vimeo to show what it look like.

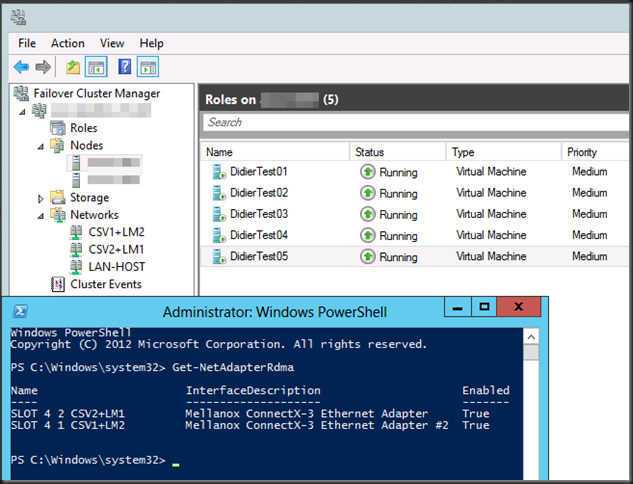

I’m using Mellanox ConnectX-3 ethernet cards, in 2 node DELL PowerEdge R720 Hyper- cluster lab. We’ve configured the two ports for SMB Direct & set live migration to leverage them both over SMB Direct. For the purpose of this demo we’ll generate non RDMA over RoCE (TCP/IP) traffic over these two 10Gbps ports to simulate a problematic scenario where all bandwidth is already being used and to see how Enhanced Transmission Selection (ETS) will help in this scenario. I have done this with DELL Force 10, PowerConnect 8100, N4000 series or a mix of both. This particular demo was leveraging PC8132Fs. I use what’s available to me in a lab at the time of writing.

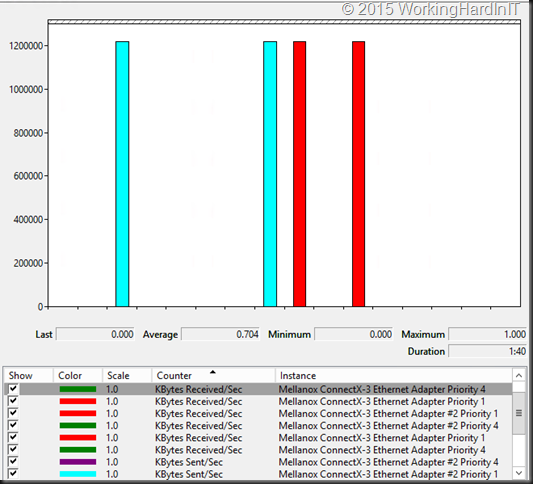

To achieve the network load this we leverage ntttcp.exe to generate the non RDMA TCP/IP traffic. Using the Mellanox QoS counters we visualize this. In blue you see the sending traffic from node A, in red the receiving traffic on Node B. Note that this traffic is tagged with priority 1. We tag SMB Direct traffic with priority 4.

You can see that both Mellanox cards are running at full bandwidth, 2* 10Gbps from node A to node B and it’s all none RDMA traffic. Also note that I’m hitting all 16 physical cores (hyper threading is enabled). By doing so I avoid being bottlenecked by a singe core as in contrast to RDMA traffic there’s no huge CPU offload going on here.

As these are the cards I have assigned to use for live migration (depending on the setup also CSV or SOFS traffic) over SMB Direct you’ll see that the competition for bandwidth will be fierce if we don’t have a mechanism to guide this to a desired outcome. That’s exactly what we leverage DCB with PFC and ETS for.

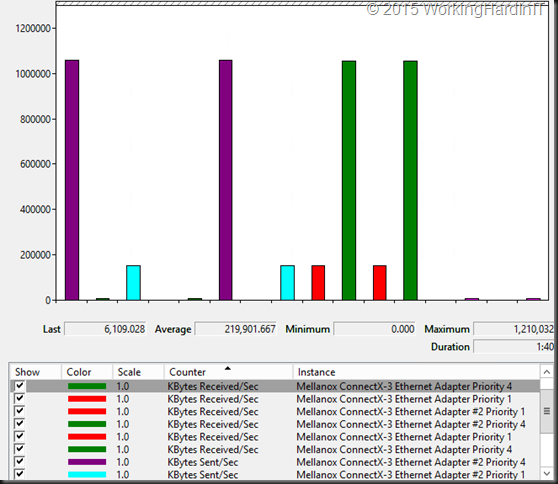

So let’s kick off live migration of 4 virtual machines with 10GB of memory each. That should take about 20 seconds on 2 * 10Gbps cards. We first live migrate them form node B to Node A. That’s in the reverse direction of where we are sending TCP/IP traffic. You see 10Gbps being used all over and this is expected.

Remember that the network is full duplex. That means that you can send at 10Gbps (TCP/IP from node A to node B, RDMA from node B to A and vice versa) and receive at 10Gbps on a port. Actually if the backplane of the switch is powerful enough you can do so on all ports. So this is normal. Node A is sending TCP/IP traffic to node B at line speed and Node B is sending SMB Direct traffic to node A (the live migration) at line speed.

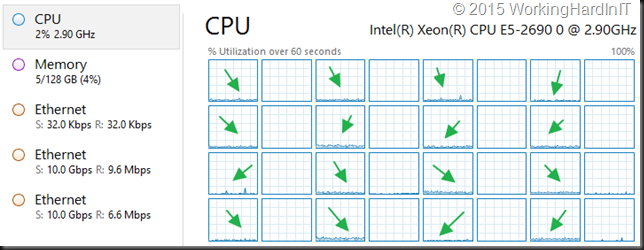

But what if we live migrate over SMB Direct in the same direction as the TCP/IP traffic is going, from node A to node B? Well have a look. To me this looks awesome.

ETS kicks in immediately. We configure the minimum bandwidth for SMB Direct Traffic to be 90%. Anything left after that (10%) is given to other traffic, in this demo the TCP/IP traffic we generated. As priority 4 tagged RoCE traffic is also configured to be lossless with PFC you don’t have to worry about dropping packets under contention. Now think about this and how you can steer your traffic behavior at times when the resources need to be divided amongst competing workloads.

I hope you now have a better idea on why QoS is useful, how it works and that it indeed does work. While I have taken the opportunity to demonstrate this with SMB Direct over RoCE I’d like to stress that QoS is not just about RoCE where it’s “mandatory” due to the fact it requires at least PFC. It’s a very much a needed tool that’s very beneficial in any converged scenario and that the optional ETS might be a very good idea, depending on your environment.

Again, to get you a better idea, here’s a short, quick video on ETS on Vimeo.