I’m assuming most of you are at lest familiar with the concept of converged networking and SMB Multichannel and SMB Direct. This is not going to be a lesson on these subjects. We’re just setting the stage here for our simple demo configuration and its relation to real world scenarios. This to remind you of the why and where of what we do an demo in our next blog posts on SMB Direct over RoCE with two DCB features: Priority Flow Control (PFC) and Enhanced Transmission Selection (ETS).

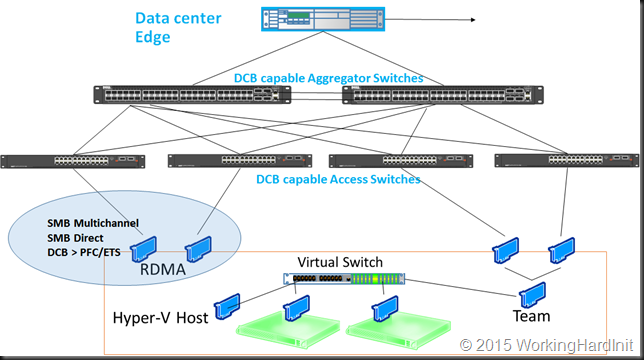

Generalized and simplified a modern virtualized data center network looks a lot like this:

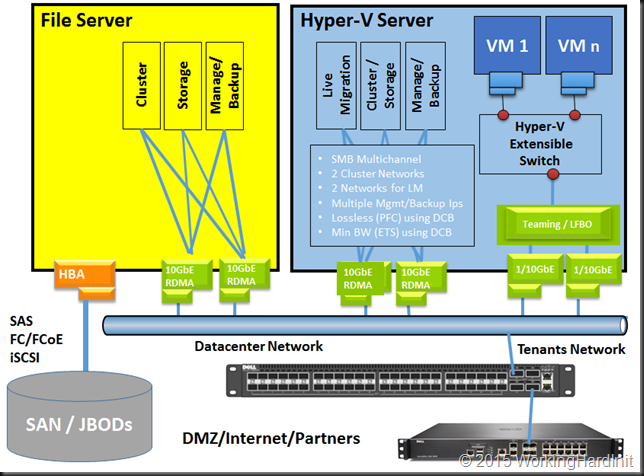

It’s more or less converged, that means all kinds of traffic move over the same infrastructure, which is great for standardization and your budget. Unless you get into performance issues. That’s where QoS can help. As we’re doing SMB Direct over RoCE we’ll use DCB to handle QoS. Mind you, QoS is an aid and it will not help to do too much over too little bandwidth. Let’s zoom in a bit on the Hyper-V & storage side of things. In general the RDMA capable variant of a modern SOFS / Hyper-V environment network looks as below in a bit more detail:

The RDMA capable traffic is SMB Direct over RoCE in this use case. This is used for Live Migration, CSV Traffic & storage traffic to the SOFS Server.

DCB cannot distinguish between these SMB traffic uses cases. It’s all RDMA traffic over port 445 the DCB configuration will not distinguish between these. That’s why on top of DCB we leverage SMB Bandwidth Limit (see https://blog.workinghardinit.work/2013/09/03/preventing-live-migration-over-smb-starving-csv-traffic-in-windows-server-2012-r2-with-set-smbbandwidthlimit/). This prevents the live migration traffic form pushing aside the Storage traffic. This is a windows configured feature and does not rely on DCB or other forms of QoS.

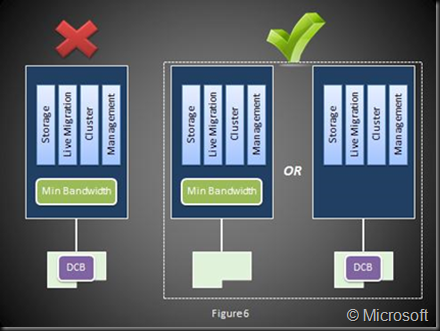

To make sure cluster traffic itself, backups, data copies, management etc… don’t starve each other we implement QoS leveraging DCB (the ETS part). As we need to use DCB with RoCE in real worlds scenarios to make it lossless (the PFC part) and as you do not mix different QoS approaches on the same networks stack we stick with DCB for the other workloads on the same networks stack.

Mind you this does not prevent scenarios where management and backups are done over vNICs on the Hyper-V switch and where we leverage Hyper-V QoS as that’s on another network stack.

In our lab demos we’ll keep things simple: We’ll do live migration over SMB Direct (RoCE)and we’ll simulate intense backup traffic over the same pair of NICs to illustrate a RoCE configuration to guarantee minimal bandwidth for both and keep the RDMA traffic lossless (PFC). To make it very clear we’ll do a demo setup where we use two 10GbE NICs per host and allocate a minimum bandwidth of 90% for live migration and allocate the remaining10% minimum bandwidth to all other traffic (i.e. which includes our intense backup traffic). Read more about the configuration in SMB Direct over RoCE Demo – Hosts & Switches Configuration Example.

Pingback: SMB Direct over RoCE Demo – Hosts & Switches Configuration Example - WorkingHardInItWorkingHardInIt