I’ve written before (see "Key Value Pair Exchange WMI Component Property GuestIntrinsicExchangeItems & Assumptions") on the need to & ways with PowerShell to determine the version of the integration services or integration components running in your guests. These need to be in sync with the one running on the hosts. Meaning that all the hosts in a cluster should be running the same version as well as the guests.

During an upgrade with a service pack this get the necessary attention and scripts (PowerShell) are written to check versions and create reports and normally you end up with a pretty consistent cluster. Over time virtual machines are imported, inherited from another cluster of created on a test/developer host and shipped to production. I know, I know, this isn’t something that should happen, but I don’t always have the luxury of working in a perfect world.

Enough said. This means you might end up with guests that are not running the most recent version of the integration tools. Apart from checking manually in the guest (which is tedious, see my blog "Upgrading a Hyper-V R2 Cluster to Windows 2008 R2 SP1" on how to do this) or running previously mentioned script you can also check the Hyper-V event log.

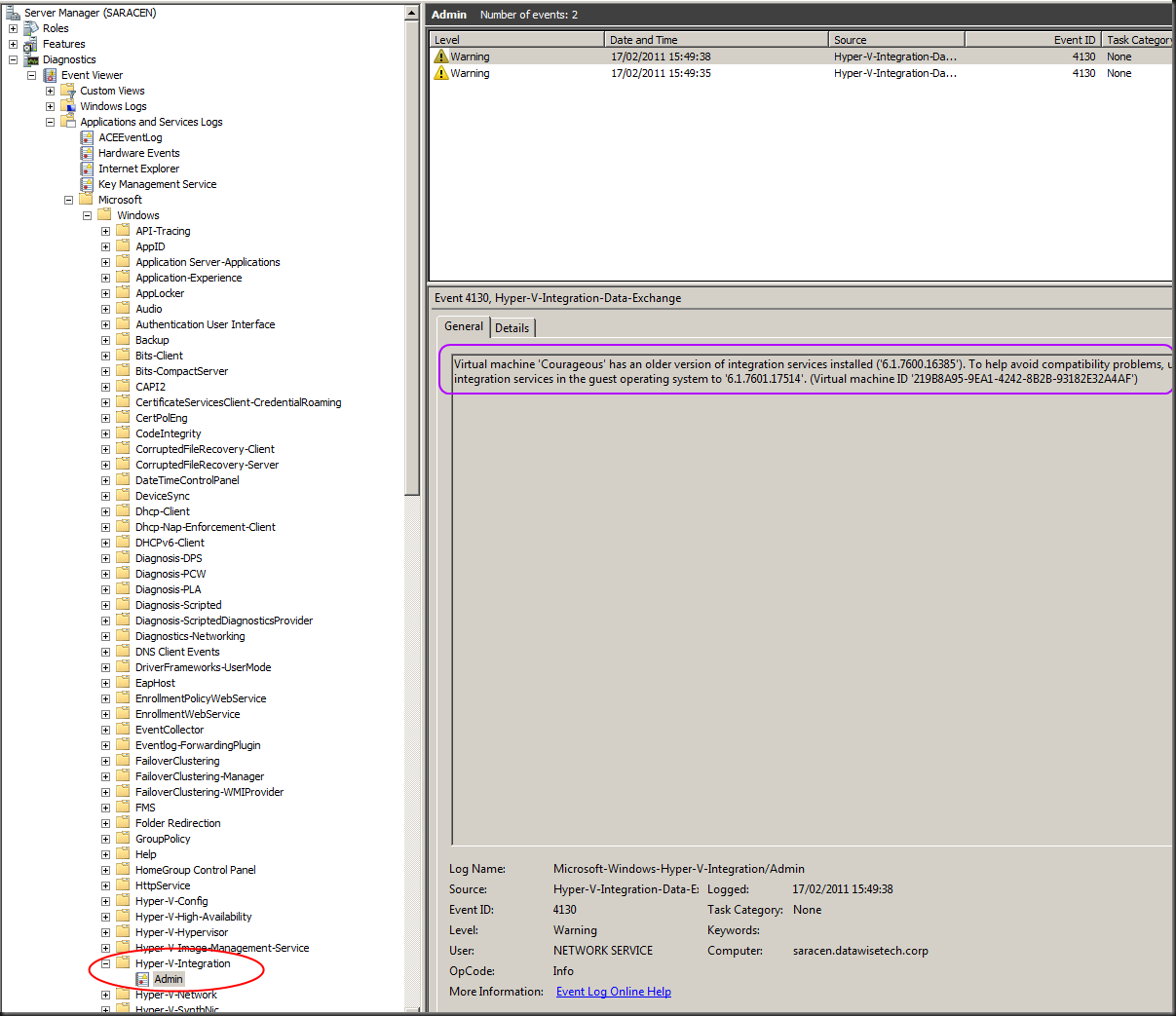

Another way to spot virtual machines that might not have the most recent version of the integration tools is via the Hyper-V logs. In Server Manager you drill down in the “Diagnostics” to, “Event Viewer” and than navigate your way through "Applications and Services Logs", "Microsoft", "Windows" until you hit “Hyper-V-Integration”

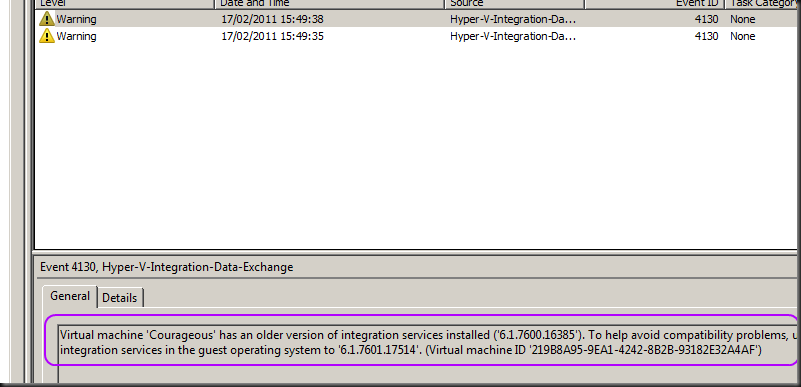

Take a closer look and you’ll see the warning about 2 guests having an older version of the integration tools installed.

As you can see it records a warning for every virtual machine whose integration services are older than the host running Hyper-V. This makes it easy to grab a list of guest needing some attention. The down side is that you need to check all hosts, not to bad for a small cluster but not very efficient on the larger ones.

So just remember this as another way to spot virtual machines that might not have the most recent version of the integration tools. It’s not a replacement for some cool PowerShell scripting or the BPA tools, but it is a handy quick way to check the version for all the guests on a host when you’re in a hurry.

It might be nice if integration services version management becomes easier in the future. Meaning a built-in way to report on the versions in the guests and an easier way to deploy these automatically if there not part of a service pack (this is the case when the guest OS and the host OS differ or when you can’t install the SP in the guest for some application compatibility reason). You can do this in bulk using SCVMM and of cause Scripting this with PowerShell comes to the rescue here again, especially when dealing with hundreds of virtual machines in multiple large clusters. Orchestration via System Center Orchestrator can also be used. Integration with WSUS would be another nice option, for those that don’t have Configuration Manager or Orchestrator but that’s not supported as far as I know for now.