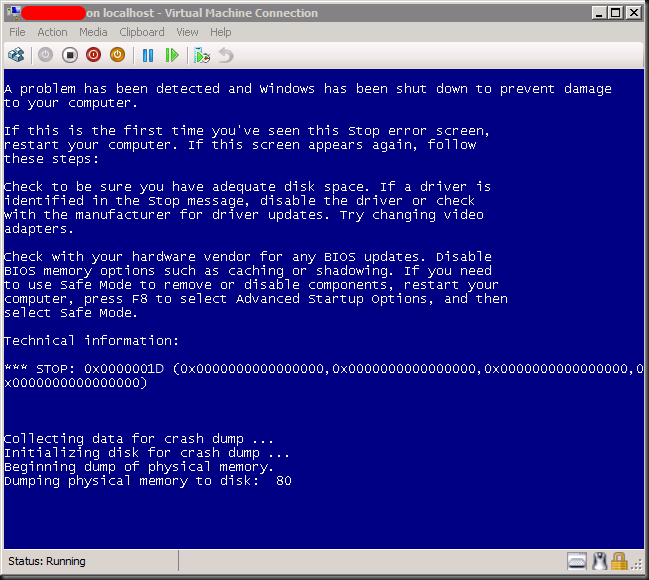

The BSOD

I helped hunt down this bug and tested the private fix. Some months ago, during the summer of 2011, I was putting some new Hyper-V clusters under stress tests. You know, letting it work very hard for a longer period of time to see if anything falls off or goes “boink". It all looked pretty robust and and after some tweaking also very fast. Just when you’re about to declare “we’re all set” here you see a BSOD on one of the guests that’s being live migrated happily announcing: “DRIVER_IRQL_NOT_LESS_OR_EQUALSTOP: 0x000000D1 (0x0000000000000000, 0x0000000000000000, 0x0000000000000000, 0x0000000000000000)”

Now that doesn’t make ME very happy however. So I investigate to see if there are any more VMs dropping dead during live migration but we don’t see any. Known issues like out of date versions of the integration tools or the like are not in play nor are any other possible suspects.

We throw the MEMORY.DMP file in the debugger and we come up with the following culprit:

DRIVER_IRQL_NOT_LESS_OR_EQUAL (d1)

The driver probably at fault is storvsc.sys

Probably caused by : storvsc.sys ( storvsc!StorChannelVmbusCallback+2b8 )

Hmmmmmmm, we start searching the internet but we don’t find much. We also throw it on to Twitter to see if the community comes up with something. Meanwhile we keep looking and find this little blog post by a Microsoft support engineer Rob Scheepens:

We pinged Rob and opened a case with MS support. That evening Hans Vredevoort (www.hyper-v.nu), who saw my tweet, mails me with the details of a fellow MVP in the USA having this same issue. We get in contact an via both Microsoft & the Hyper-V community we start hunting the cause of this bug. The progress on this issue can be read at the Microsoft blog above. You’ll notice that the fix is in the works now.

Hunting down the STOP error

What did we establish:

- It only happens occasionally with a live migration and it rather ad hoc, not every time, not after X amount of live migrations or X amount of up time.

- It seems sometimes to happens only with guests running dynamically expanding VHDs attached to ISCSI controllers in Hyper-V. But that’s not really the case as I remember one being with fixed VHD attached to an SCSI controller. In our case the VMs we could reproduce the issue with in a reasonable time were all SQL Server test and development guests running SQL Server 2008 where the dynamically expanding disks are used as “poor man’s thin provisioning”.

- I have not heard of this on Windows 2008 hosts, only R2, but I have not tested this.

So it’s reproducible but it takes intensive live migration activity. Meanwhile we received private instrumentation to install on both guests & hosts to collect “enriched” memory.dumps when a guest experiences a BSOD. With PowerShell we have continuous live migration running to reproduce the issue. The fact that can live migrate over 10Gbps does help ![]() . Because you can get lucky but in reality needs many hundreds of live migration to reproduce it. On some machines many thousands. Not a joke but I total we did 8000 Live migrations to test the fix and we did about 12000 to reproduce the issue on several VMs with different configurations to send memory dumps to MSFT. So yes, you really need some PowerShell and having a 10Gbps Live Migration network also helps

. Because you can get lucky but in reality needs many hundreds of live migration to reproduce it. On some machines many thousands. Not a joke but I total we did 8000 Live migrations to test the fix and we did about 12000 to reproduce the issue on several VMs with different configurations to send memory dumps to MSFT. So yes, you really need some PowerShell and having a 10Gbps Live Migration network also helps ![]() .

.

All the collected MEMORY.DMP files form these live migration exercises were uploaded to Microsoft for analysis. That took a while, also because they had a boatload of live migrations to do and I don’t know if their test lab has 10 Gbps.

On Tuesday the 25th of October Microsoft contacts us with good news. They have root-caused the problem and a hotfix is in the works. You can download that here http://support.microsoft.com/kb/2636573

On Thursday 27th of October we get access to a private fix and after installing this one we’ve been running thousands of live migrations without seeing the issue.

The public release of this hotfix is currently planned (HTP11-12) under KB2636573.

The details for the curious

Root Cause?

The root cause can be summarized as follows: “StorVSP was modifying guest memory while the VM’s virtual motherboard was being powered off.” Doing this storvsc access a NULL pointer in a memory buffer that is already freed up. The result of this is a BSOD or STOP error in the virtual machine.

Only SCSI attached VHDs

OK but why do we only see this with SCSI attach VHDs? Now the issue happens during power down of the virtual machines’ mother board because there is a disk enumeration during the shut down phase. And this enumeration only happens with SCSI disks.

Right! So the more VHDs we had attached to SCSI controllers in a virtual machine the higher the likelihood of this happening.

Why so much more likely with dynamically expanding VHDs

But still we saw this exponentially more with dynamically expanding disks. Why is that? Well it’s not that dynamically expanding disks trigger disk enumeration more than fixed disks. However it seems that any disk expansion, which causes write delays, can lead to a timing issue that will cause the disk enumeration to hit the issue described above. So this significantly increases the risk that the STOP error will happen and it explains that the chance this will happen with fixed VHDs attached to SCSI controllers is significantly lower. This is sync with what we saw. The virtual machines with a lot of dynamic disks attach to SCSI controllers that had a lot of activity (and thus potential for expanding) is the ones where we could reproduce this the fasted.

Conclusion

It can take some time to hunt down certain bugs, especially the rare ones that only happen every now and then so occurrences are few and far between. But when you put in some effort Microsoft helps out and works on a fix. And no you don’t have to have the most expensive support contract for that to happen. As a matter of fact this call was logged under a free support call with the TechNet Plus subscription. And as it was a bug, they return it as unused.