Introduction

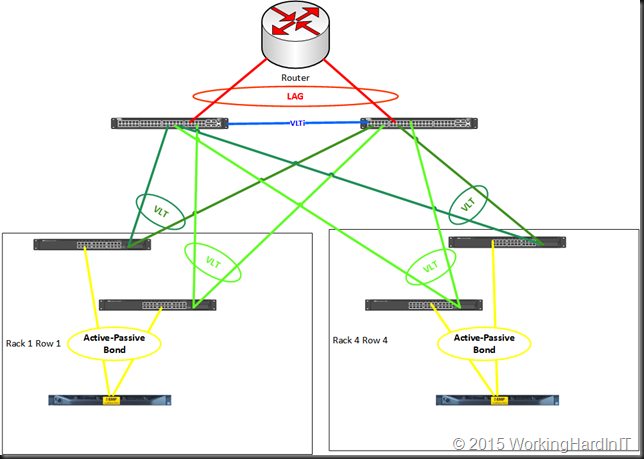

The error Kemp LoadMaster template import issue: Command serverinit needs 1 parameter(s) was encounter during a migration project I was involved in recnetly. Recently. The job was to migrate the virtual services of an aging Kemp LoadMaster HA solution (LM-2200, 32 bit) to newer and more capable versions (running 7.2.43, X64 bit). Even more important, migrate to a version that is still in support. The LM-2200 series are like many older ones End of Life (EOL). Kemp still delivers important fixes for critical security issues like this year (7.1.35.5 and 7.1.35.6) but that’s it in long term support (LTS). This is not bad, these have been supported for a very long time but the aging 32-bit hardware has reached the point where replacing the is the only right thing to do.

With Kemp we can select new hardware, virtual machines or appliances in the cloud. So on-premises, hybrid and public cloud needs can be taken care off with the same familiar load balancer / Application delivery controller (ADC).

One technique to migrate Kemp workloads is to export the virtual services to templates and use these to configure the new solution. It is during that process we ran into an error: Kemp LoadMaster template import issue: Command serverinit needs 1 parameter(s)

Kemp LoadMaster template import issueCommand serverinit needs 1 parameter(s)

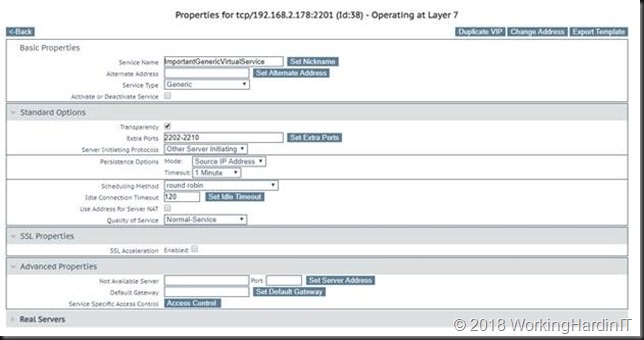

One of the applications is a mission critical one that has many different virtual services. FTP/FTPS/HTTP/HTTPS and also a bunch of TCP/UDP connectivity over ports that are very application/industry specific. A dozen virtual services, all on the same IP address with different ports and configuration needs.

We exported all of them to templates which we then used to recreate them on the replacement ADC. While doing so we used a different IP address for these newly created virtual services for testing purposes. This test IP address is changesd to the original production IP when ready to make the move and after disabling the old virtual services. That also allows for a quick exit / roll back if needed.

The process of creating a new virtual service requires a little work to be done still such as defining a gateway, SSL certificates, adding real servers, … but the bulk of the work is done for you.

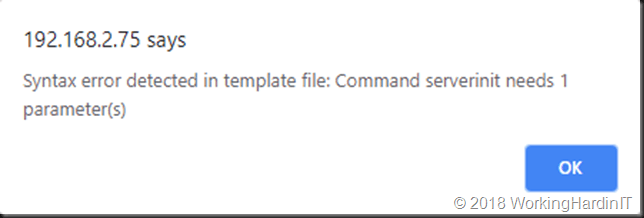

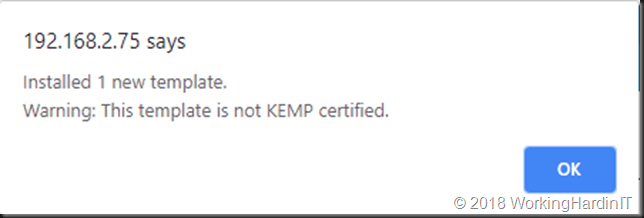

But with certain templates we got an error: Syntax error detected in template file: Command serverinit needs 1 parameter(s)

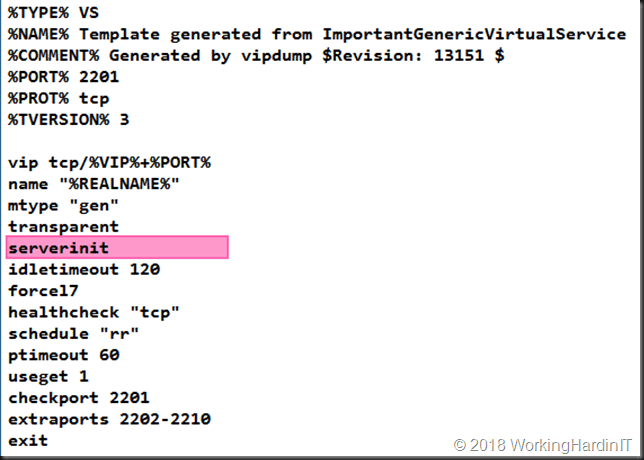

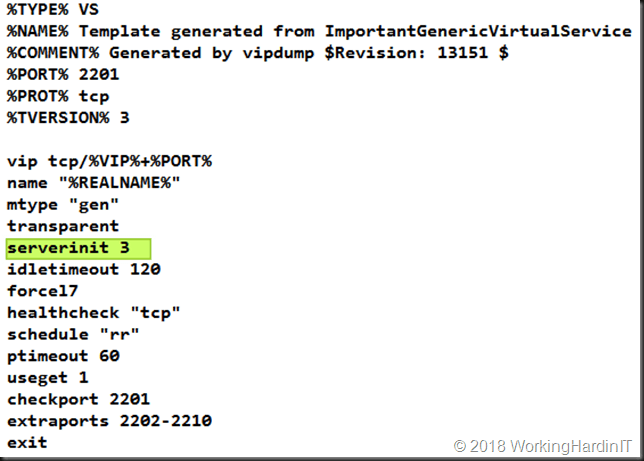

When looking at the template in a text editor we see the following:

Clearly “servinit” does not have a parameter set. So, it probably needs one, to specify which type of the Server Initiating Protocol needs to be set for our generic service.

Finding a solution

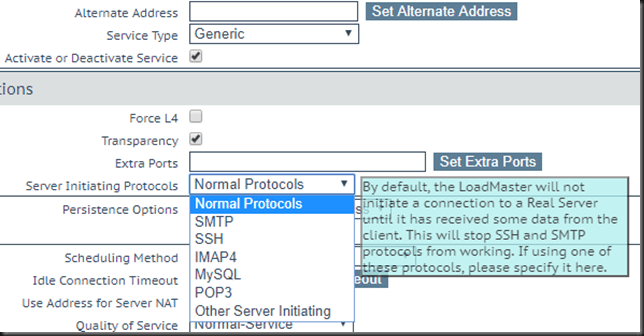

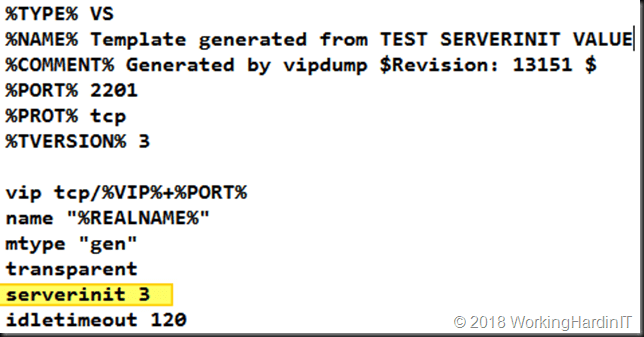

We need to find out what parameter goes with our setting and add that the template. That value is found is easy enough. Via educated trial and error. On a test virtual service on the current version ADC we set the value for the Server Initiating Protocols to our value (other server initiating) and then export this virtual service to a template. We look at the template and note the number. That way we map what server initiating protocol corresponds to what value. In our case other server initiating is “3”.

We edit our exported templates to have the correct parameter value set that we find out this way and try to import it again.

That’s all that we needed to do to get these exported templates to be imported with no further issues.

All that’s left to do is to finish configuring the virtual service and continue our migration.

Conclusion

Don’t panic. Many problems you encounter have a solution, workaround or fix. Maybe this will help someone out there. Take care and until next time.3