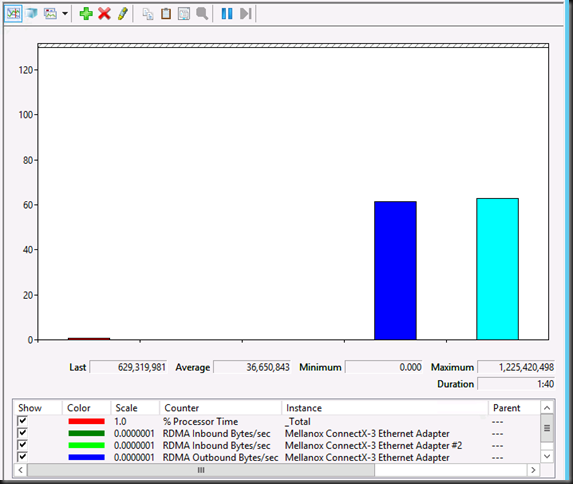

One of the big changes in Windows Server 2012 R2 is that all types of Live Migration can now leverage SMB 3.0 if the right conditions are met. That means that Multichannel & SMB Direct (RDMA) come in to play more often and simultaneously. Shared Nothing Live Migration & certain forms of Storage Live Migration are often a lot more planned due to their nature. So one can mitigate the risk by planning. Good old standard Live Migration of virtual machines however is often less planned. It can be done via Cluster Aware Updating, to evacuate a host for hardware maintenance, via Dynamic optimization. This means it’s often automated as well. As we have demonstrated many times Live Migration can (easily) fill 20Gbps of bandwidth. If you are sharing 2*10Gbps NICs for multiple purposes like CSV, LM, etc. Quality of Service (QoS) comes in to play. There are many ways to achieve this but in our example here I’ll be using DCB for SMB Direct with RoCE.

New-NetQosPolicy “CSV” –NetDirectPortMatchCondition 445 -PriorityValue8021Action 4

Enable-NetQosFlowControl –Priority 4

New-NetQoSTrafficClass "CSV" -Priority 4 -Algorithm ETS -Bandwidth 40

Enable-NetAdapterQos –InterfaceAlias SLOT41-CSV1+LM2

Enable-NetAdapterQos –InterfaceAlias SLOT42-LM1+CSV2

Set-NetQosDcbxSetting –willing $False

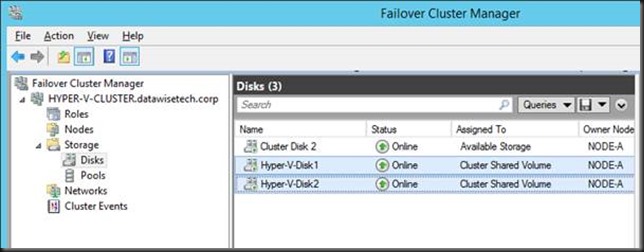

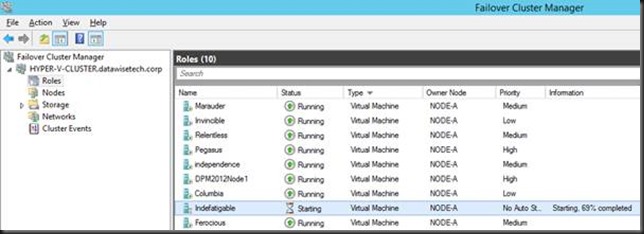

Now as you can see I leverage 2*10Gbps NIC, non teamed as I want RDMA. I have Failover/redundancy/bandwidth aggregation thanks to SMB 3.0. This works like a charm. But when leveraging Live Migration over SMB in Windows Server 2012 R2 we note that the LM traffic also goes over port 445 and as such is dealt with by the same QoS policy on the server & in the switches (DCB/PFC/ETS). So when both CSV & LM are going one how does one prevent LM form starving CSV traffic for example? Especially in Scale Out File Server Scenario’s this could be a real issue.

The Solution

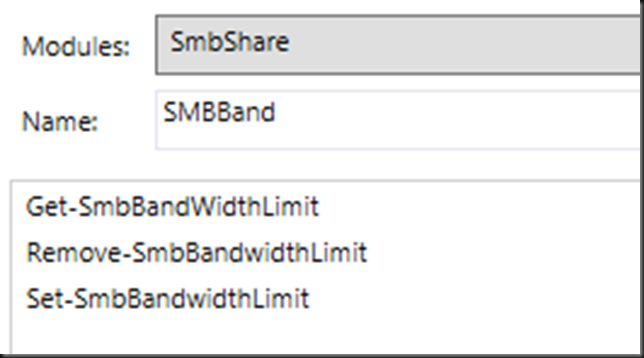

To prevent LM traffic & CSV traffic from hogging all the SMB bandwidth ruining the SOFS party in R2 Microsoft introduced some new capabilities in Windows Server 2012 R2. In the SMBShare module you’ll find:

- Set-SmbBandwidthLimit

- Get-SmbBandwidthLimit

- Remove-SmbBandwidthLimit

To use this you’ll need to install the Feature called SMB Bandwidth Limit via Server Manager or using PowerShell: Add-WindowsFeature FS-SMBBW

You can limit SMB bandwidth for Virtual machine (Storage IO to a SOFS), Live Migration & Default (all the rest). In the below example we set it to 8Gbps maximum.

Set-SmbBandwidthLimit -Category LiveMigration -BytesPerSecond 1000MB

So there you go, we can prevent Live Migration from hogging all the bandwidth. Jose Baretto mentions this capability on his recent blog post on Windows Server 2012 R2 Storage: Step-by-step with Storage Spaces, SMB Scale-Out and Shared VHDX (Virtual). But what about Fibre Channel or iSCSI environments? It might not be the total killer there as in SOFS scenario but still. As it turns out the Set-SmbBandwidthLimit also works in those scenarios. I was put on the wrong track by thinking it was only for SOFS scenarios but my fellow MVP Carsten Rachfahl kindly reminded me of my own mantra “Trust but verify” and as a result, I can confirm it even works to cap off Live Migration traffic over SMB that leverages RDMA (RoCE). So don’t let the PowerShell module name (SMBShare) fool you, it’s about all SMB traffic within the categories.

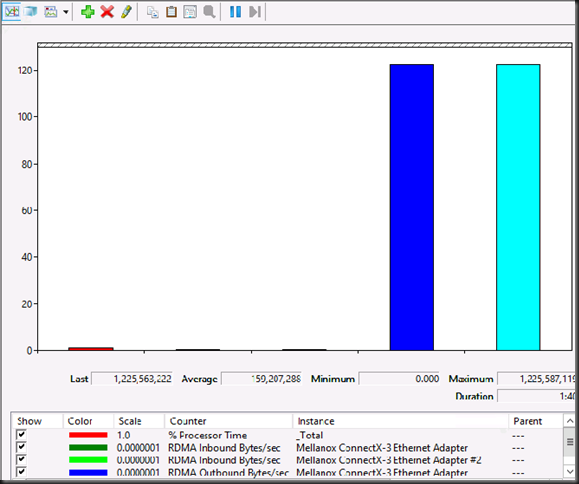

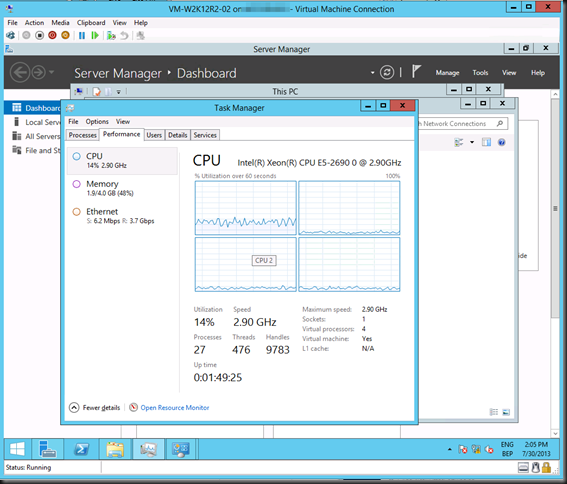

So without limit LM can use all bandwidth (2*10Gbps)

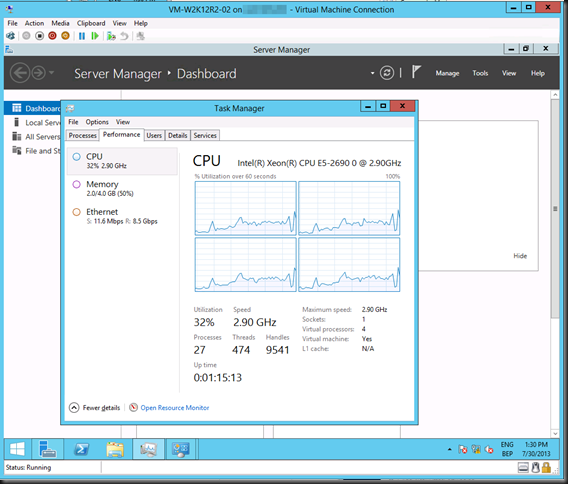

With Set-SmbBandwidthLimit -Category LiveMigration -BytesPerSecond 1250MB you can see we max out at 10Gbps (2*5Gbps).

Some Remarks

I’d love to see a minimum bandwidth implementation of this (that could include safety buffer for spikes in CSV traffic with SOFS). The hard cap limit might lead to some wasted bandwidth. In other scenarios you could still get into trouble. What if you have 2*10Gbps available but one of those dies on you and you capped Live Migration Traffic at 16Gbps. With one NIC gone you’re potentially in trouble until the NIC has been replaced. OK, this is not a daily occurrence & depending on you environment & setup this is less or more of a potential issue.