Introduction

When you’re using DELL Compellent (SC Series) storage you might be leveraging the Dell SC Series MPIO Registry Settings script they give you to set the recommended settings. That’s a nice little script you can test, verify and adapt to integrate into your set up scripts. You can find it in the Dell EMC SC Series Storage and Microsoft Multipath I/O

Dell SC Series MPIO Registry Settings script

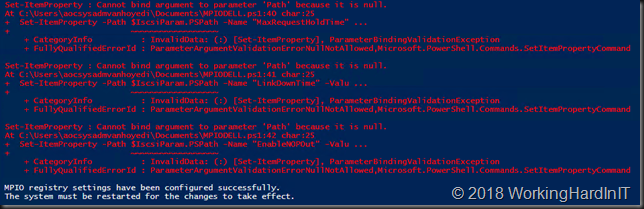

Recently I was working with a new deployment ( 7.2.40) to test and verify it in a lab environment. The lab cluster nodes had a lot of NIC & FC HBA to test all kinds of possible scenarios Microsoft Windows Clusters, S2D, Hyper-V, FC and iSCSI etc. The script detected the iSCSI service but did not update any setting but did throw errors.

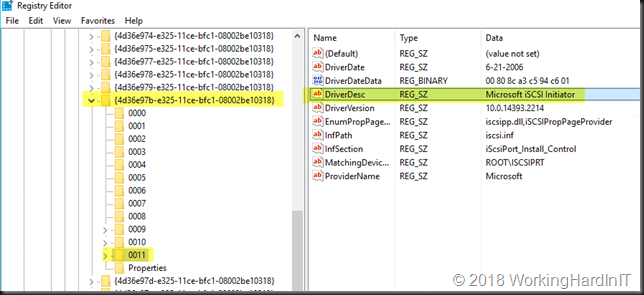

After verifying things in the registry myself it was clear that the entries for the Microsoft iSCSI Initiator that the script is looking for are there but the script did not pick them up.

Looking over the script it became clear quickly what the issue was. The variable $IscsiRegPath = “HKLM:\SYSTEM\CurrentControlSet\Control\Class\{4d36e97b-e325-11ce-bfc1-08002be10318}\000*” has 3 leading zeros out of a max of 4 characters. This means that if the Microsoft iSCSI Initiator info is in 0009 it get’s picked up but not when it is in 0011 for example.

So I changed that to only 2 leading zeros. This makes the assumption you won’t exceed 0099 which is a safer assumption, but you could argue this should even be only one leading zero as 999 is an even safer assumption.

$IscsiRegPath = “HKLM:\SYSTEM\CurrentControlSet\Control\Class\{4d36e97b-e325-11ce-bfc1-08002be10318}\00*”

I’m sharing the snippet with my adaptation here in case you want it. As always I assume nu responsibility for what you do with the script or the outcomes in your environment. Big boy rules apply.

# MPIO Registry Settings script

# This script will apply recommended Dell Storage registry settings

# on Windows Server 2008 R2 or newer

#

# THIS CODE IS MADE AVAILABLE AS IS, WITHOUT WARRANTY OF ANY KIND.

# THE ENTIRE RISK OF THE USE OR THE RESULTS FROM THE USE OF THIS CODE

# REMAINS WITH THE USER.

# Assign variables

$MpioRegPath = "HKLM:\SYSTEM\CurrentControlSet\Services\mpio\Parameters"

$IscsiRegPath = "HKLM:\SYSTEM\CurrentControlSet\Control\Class\"

#DIDIER adaption to 2 leading zeros instead of 3 as 0010 and 0011 would not be

#found otherwise.This makes the assumption you won't exceed 0099 which is a

#safer #assumption, but you could argue that this should even be only one

#leading zero as 999 is #an even #safer assumption.

$IscsiRegPath += "{4d36e97b-e325-11ce-bfc1-08002be10318}\00*"

# General settings

Set-ItemProperty -Path $MpioRegPath -Name "PDORemovePeriod" -Value 120

Set-ItemProperty -Path $MpioRegPath -Name "PathRecoveryInterval" -Value 25

Set-ItemProperty -Path $MpioRegPath -Name "UseCustomPathRecoveryInterval" -Value 1

Set-ItemProperty -Path $MpioRegPath -Name "PathVerifyEnabled" -Value 1

# Apply OS-specific general settings

$OsVersion = ( Get-WmiObject -Class Win32_OperatingSystem ).Caption

If ( $OsVersion -match "Windows Server 2008 R2" )

{

New-ItemProperty –Path $MpioRegPath –Name "DiskPathCheckEnabled" –Value 1 –PropertyType DWORD –Force

New-ItemProperty –Path $MpioRegPath –Name "DiskPathCheckInterval" –Value 25 –PropertyType DWORD –Force

}

Else

{

Set-ItemProperty –Path $MpioRegPath –Name "DiskPathCheckInterval" –Value 25

}

# iSCSI settings

If ( ( Get-Service -Name "MSiSCSI" ).Status -eq "Running" )

{

# Get the registry path for the Microsoft iSCSI initiator parameters

$IscsiParam = Get-Item -Path $IscsiRegPath | Where-Object { ( Get-ItemProperty $_.PSPath ).DriverDesc -eq "Microsoft iSCSI Initiator"} | Get-ChildItem | Where-Object { $_.PSChildName -eq "Parameters" }

# Set the Microsoft iSCSI initiator parameters

Set-ItemProperty -Path $IscsiParam.PSPath -Name "MaxRequestHoldTime" -Value 90

Set-ItemProperty -Path $IscsiParam.PSPath -Name "LinkDownTime" -Value 35

Set-ItemProperty -Path $IscsiParam.PSPath -Name "EnableNOPOut" -Value 1

}

Else

{

Write-Host "iSCSI Service is not running."

Write-Host "iSCSI registry settings have NOT been configured."

}

Write-Host "MPIO registry settings have been configured successfully."

Write-Host "The system must be restarted for the changes to take effect."