Introduction

As I mentioned in an introduction post Thinking About Windows 8 Server & Hyper-V 3.0 Network Performance there will be a lot of options and design decisions to be made in the networking area, especially with Hyper-V 3.0. When we’ll be discussing DVMQ (see DMVQ In Windows 8 Hyper-V), SR-IOV in Windows 8 (or VMQ/VMDq in Windows 2008 R2) and other network features with their benefits, drawbacks and requirements it helps to know what Receive Side Scaling (RSS) is. Chances are you know it better than the other mentioned optimizations. After all it’s been around longer than VMQ or SR-IOV and it’s beneficial to other workloads than virtualization. So even if you’re a “hardware only for my servers” die hard kind of person you can already be familiar with it. Perhaps you even "dislike” it because when the Scalable Networking Pack came out for Windows 2003 it wasn’t such a trouble free & happy experience. This was due to incompatibilities with a lot of the NIC drivers and it wasn’t fixed very fast. This means the internet is loaded with posts on how to disable RSS & the offload settings on which it depends. This was done to get stability or performance back for application servers like Exchange and others applications or services.

The Case for RSS

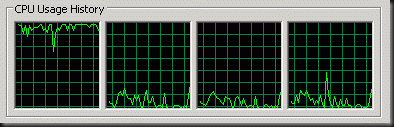

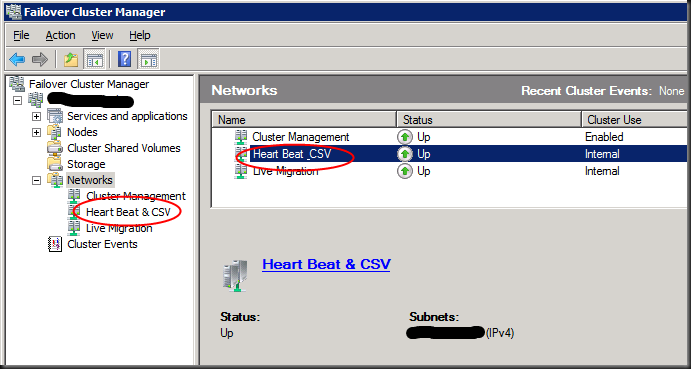

But since Windows 2008 these days are over. RSS is a great technology that gets you a lot better usage of out of your network bandwidth and your server. Not using RSS means that you’ll buy extra servers to handle the same workload. That wastes both energy and money. So how does RSS achieve this? Well without RSS all the interrupt from a NIC go to the same CPU/Core in multicore processors (Core 0). In Task Manager that looks not unlike the picture below:

Now for a while the increase in CPU power kept the negative effects at bay for a lot of us in the 1Gbps era. But now, with 10Gbps becoming more common every day, that’s no longer the case. That core will become the bottle neck as that poor logical CPU will be running at 100%, processing as much network interrupts in can handle, while the other logical CPU only have to deal with the other workloads. You might never see more than 3.5Gbps of bandwidth being used if you don’t use RSS. The CPU core just can’t keep up. When you use RSS the processing of those interrupts is distributed across al cores.

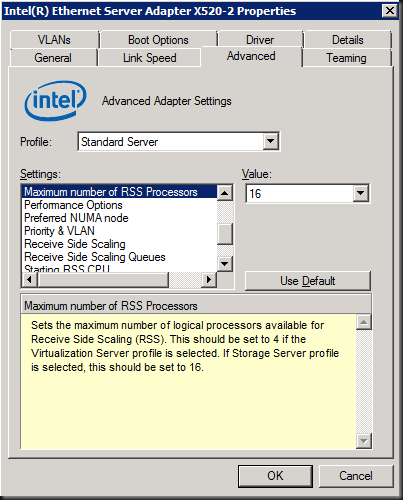

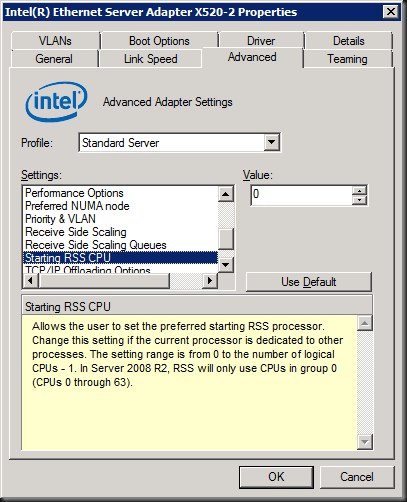

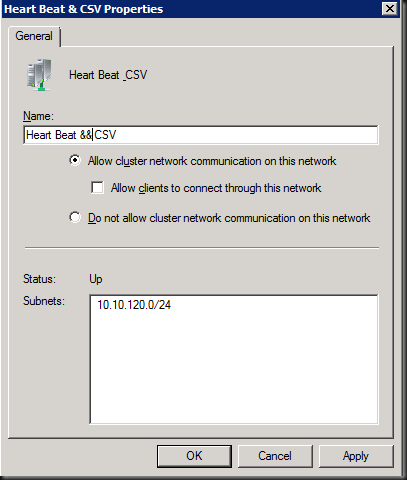

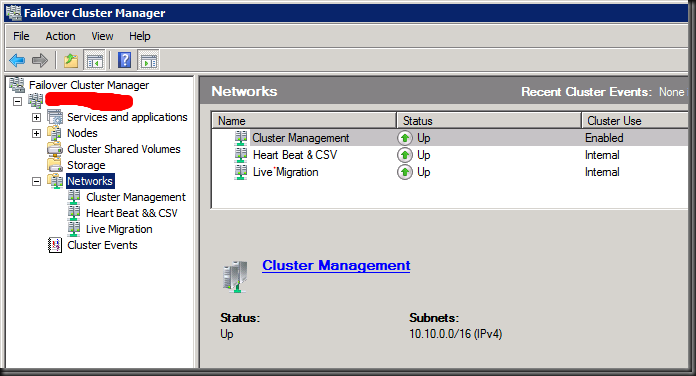

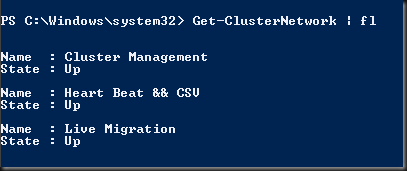

With Windows 2008 and Windows 2008 R2 and Windows 8 RSS is enabled by default in the operating system. Your NIC needs to support it and in that case you’ll be able to disable or enable it. Often you’ll get some advanced features (illustrated below) with the better NICs on the market. You’ll be able to set the base processor, the number of processors to use, the number of queues etc. That way you can divide the cores up amongst multiple NICs and/or tie NICs to specific cores.

So you can get fancy if needed and tweak the settings if needed for multi NIC systems. You can experiment with the best setting for your needs, follow the vendors defaults (Intel for example has different workload profiles for their NICs) or read up on what particular applications require for best performance.

Information On How To Make It Work

For more information on tweaking RSS you take a look at the following document http://msdn.microsoft.com/en-us/windows/hardware/gg463392. It holds a lot more information than just RSS in various scenarios so it’s a useful document for more than just this.

Another good guide is the "Networking Deployment Guide: Deploying High-Speed Networking Features". Those docs are about Windows 2008 R2 but they do have good information on RSS.

If you notice that RSS is correctly configured but it doesn’t seem to work for you it’ might be time to check up on the other adaptor offloads like TCP Checksum Offload, Large Send Offload etc. These also get turned of a lot when trouble shooting performance or reliability issues but RSS depends on them to work. If turned off, this could be the reason RSS is not working for you..