Introduction

Since Azure File Sync is now out in public preview I can chat a bit about why I am interested in it. My interest is big enough for me to have tested it in private previews even when managers weren’t really interested to do so yet. It was too early for them and it certainly didn’t help that, in many scenarios, it involves “hardware” involved in some way or another. In cloud first world that’s bad by default even if that’s only a hypervisor host and storage.

But part of my value is providing solutions that serve the clear and present needs of the business today and tomorrow. All this without burdening them with a mortgage on their future capabilities to address the needs they’ll have then. In that aspect of my role I can and have to disagree with managers every now and then. Call it a perk or a burden, but I do tell them what they need to hear, not what they want to hear. So yes, I was and I am testing Azure File Sync. You have to remember that what’s too early in technology today, is tomorrows old news. To paraphrase Ferris Bueller, technology moves pretty fast. If you don’t stop and look around once in a while, you could miss it.

If you need to get up to speed on Azure File Sync fast, take a look at https://azure.microsoft.com/nl-nl/resources/videos/azure-friday-hybrid-storage-with-azure-file-sync-langhout/.

I’m not going to discuss all of the current or announced capabilities of Azure File Sync here. There is plenty of info out there and the Program Managers are very responsive and passionate when it comes to answering you detailed questions. There’s plenty of more info in the Microsoft Ignite 2017 Sessions: https://myignite.microsoft.com/sessions/54963 and https://myignite.microsoft.com/sessions/54931.

What drove me to Testing Azure File Sync

I was in general unhappy with the StorSimple offering and other 3rd party offerings. There, I’ve said it. It was too much of a hit or miss, point solution and some of them are really odd ones out in an otherwise streamlined IT environment. I’ll keep it at that. While there are still other players in the market we have another concern. That is that at the cloud side of things we needed integration and capabilities a 3rd party just wouldn’t have the leverage for to achieve with Microsoft. The reality is that unless you are talking about cloud in combination with huge amounts of money you will not get their attention. It’s business, not personal. Let’s look at the major reasons for me to investigate Azure File Sync.

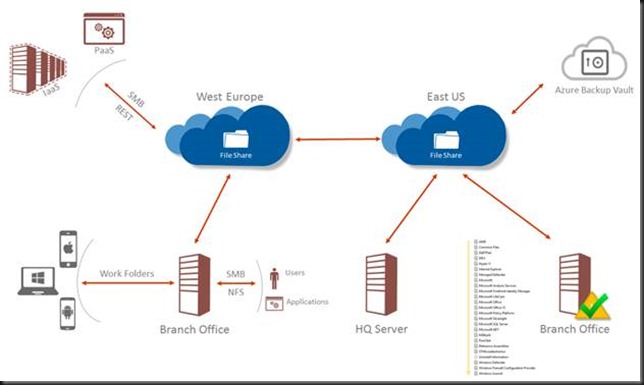

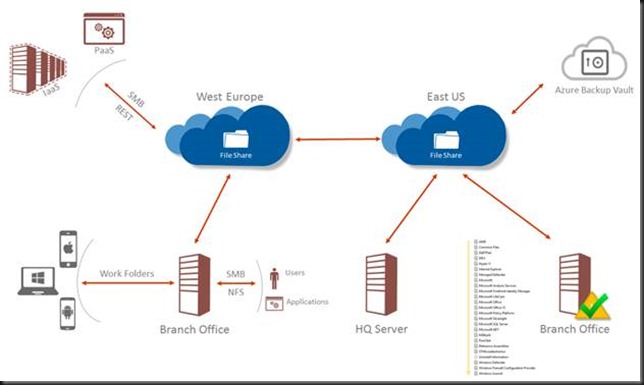

The need to access data from different locations in different ways

First of all, there is the need to access data from different locations in different ways. That is both on-premises and from Azure. In the cloud we access file share via REST APIs and that works fine for well controlled and secured application environments and services written in and for the modern workplace.

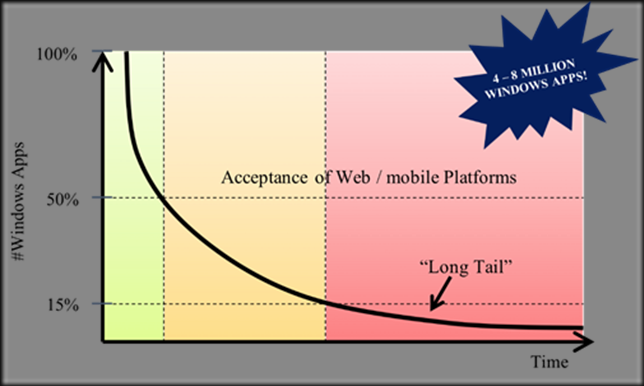

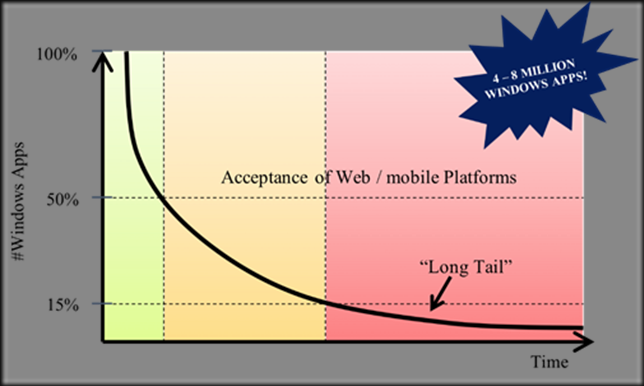

When we start involving human beings that falls apart. The security model with roles and responsibilities doesn’t map well into an access token you paste into a mapped drive of an Azure file share. Then there is the humongous amount of applications that cannot speak REST. The most often can speak NFTS and SMB which is ruled by ACLs. They are children of a client/server world and as such, when designed and written well, they shine on premises in a well-connected low latency world. Yes, that very long tail of classical applications …

Windows Applications and their long, long tail by TeamRGE.com as frequently found in RDS and Citrix VDI articles.

In cloud based RDS solutions and IAAS we also use SMB and NFTS/ACL to consume data, do don’t assume everything in the cloud is pure REST and claims based security.

I won’t even go into the need to have ACL on Azure File share, the options and need for extending AD to Azure over a VPN, ADFS, ADFS Proxies, Azure AD Pass-through, Azure AD Sync, … the whole nine yards to cover all kinds of scenarios. Sure, I hear you, AD will go away, it doesn’t matter anymore. On a long enough time scale such statements are always true. But looking at Office 365 success I’m not howling with the crowd e-mail is totally obsolete yet either. Long tails …

Anyway, when you need to consume the same data from different locations in different ways it often leads to partial or complete copies of data that might change in different places and needs to be protected in different place. That’s a lot of overhead to deal with and consistency can become a serious issue. But with this we have touched on our second driver.

Backups, data protection & recovery

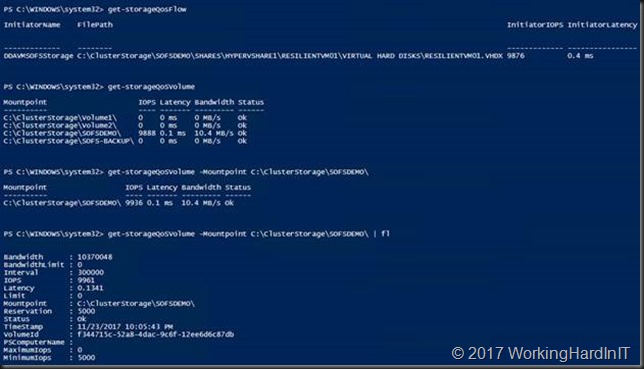

Yup, good old backups. You know the thing too many of the mediocre IT managers out there is nothing to it anymore as they solved that problem over a decade ago. But time doesn’t stand still. Plain and simple. I sometimes deal with largish file server clusters leveraging SMB3 (and ODX when available). The LUNs for those files shares are generally anything between 8 TB and 20 TB totaling 100TB and upwards per cluster. There are dozens of LUNs on any cluster with tens of thousands of folders and hundred million of files. DFS namespace helps us keep a unified namespace for the consumers. Since Windows Server 2012, that’s ages ago, we have the technology to make this a breeze. SMB 3 with continuous availability and leveraging SMB3/ODX wherever we can and makes sense. Thank you, Microsoft!

One of the selling points for Azure File Sync is dealing with storage capacity issues. That’s the least of my worries actually. We have never struggled with a lack of storage on file servers. For crying out loud, we solved that problem well over a decade ago. Yes, even on premises. So that’s not our main motivation here.

What is still not covered well enough for us is backups. That number of files, not just the data volume, is a challenge. Not just the backup target storage for it and the infrastructure. Basically, that can be built performant enough and reasonably cost effective. The big challenge with those numbers is the time it takes to deal with backups.

We have had to revert to application consistent storage snapshots and replicas of those on campus and between cities to make help deal with the fact that “traditional backup” doesn’t really scale well to those volumes. That’s great but when data is valuable the 3-2-1 rule is set in stone. So, you need more. A lot more actually perhaps as today you might want to supplement it with extra rules to include air gapping and encryption. Got ransomware anyone?

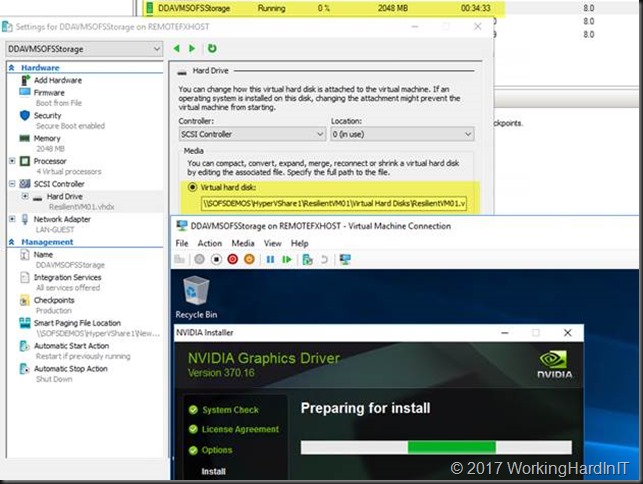

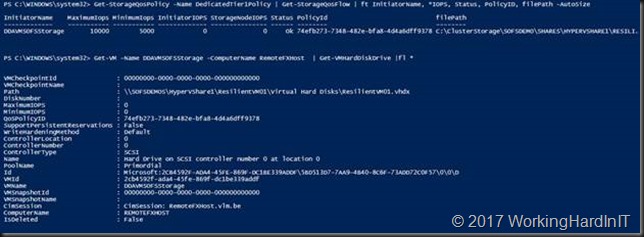

Traditional backups with agent on the physical host or in the guest are not cutting it. When you virtualize, you can leverage on host based backups of entire VMs or split it up in host bases backups of the OS, the data virtual disks etc. It’s a lot more effective to backup number of 5/15/25 TB sized VHDX files than it is to so with in guest or in server backups. Even more so when you combine this with modern change block tracking and synthetic full backups and health check/repair capabilities in backup products.

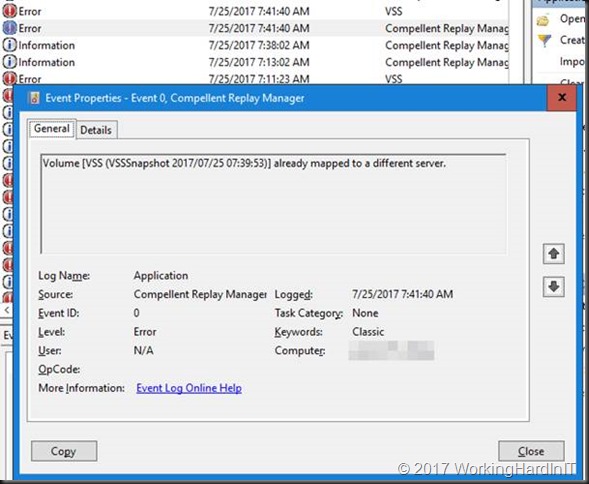

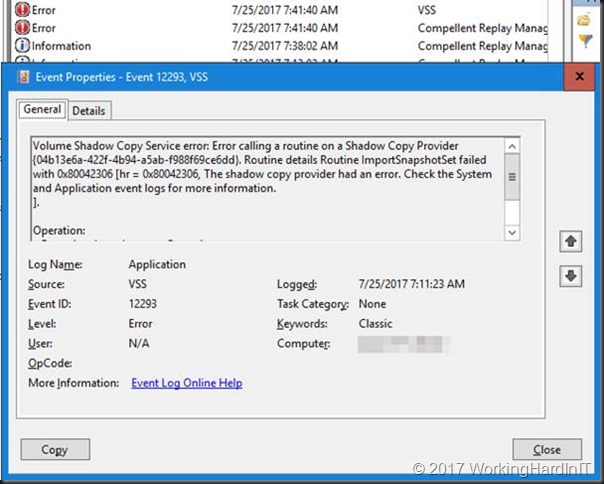

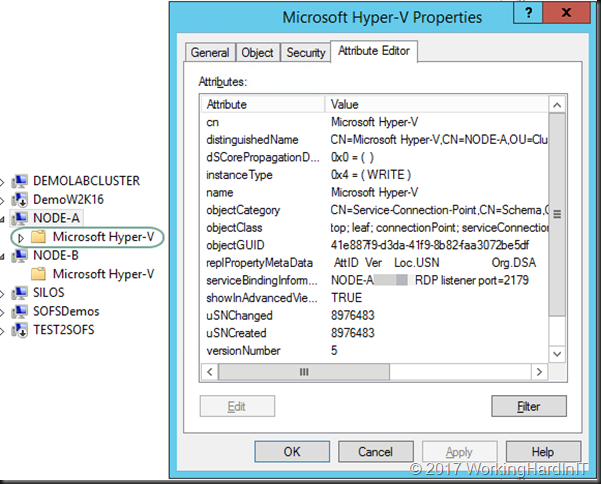

The challenge was that with true virtualized clusters, meaning shared VHDX, the backup story wasn’t a 1st class citizen. Which made people either not do it or use iSCSI, vFC to the guest and deal with the backups as if it were physical machines, losing any benefits associated with host based backups of VMs. Things have gotten better with Windows Server 2016 so we had two paths to investigate to deal with these challenges.

There are 2 approaches to data protection we are investigating

There are 2 approaches to data protection we are investigating and solutions might be a combination of them.

Virtualize it all and then backup the VM. In other words, leverage the power of host level backups with virtual machines. Now things have become a whole lot better for guest cluster backups with Windows Server 2016 but we are not quite there yet in order to call them 1st class citizens. The other concern is that the growth in data will still beat any progress we can make in speed and reliability with backups. Another concern is that people don’t want to deal with storage and are looking for storage as a service. The easiest and smartest way to achieve this is to look a public cloud offerings that delivers what’s needed and not take on the burden of managing 3rd parties to deliver this.

The first problem might be covered by Veeam Backup & Replication v10 that will allow for backing up file shares. As long as shared VHDX or VHD Sets are not full blown equal citizens in Hyper-V when it comes to backups we’ll look at another solution.

That’s fine, but what about pure volume and that 3-2-1 (multiple copies, different technologies, different locations) rule or better? The cost and overhead with a second site can be a challenge for some. Can Azure File Sync help there? It sure can. But the big bonus is that is also a big step forward into helping with the need to access data from different locations in different ways

The problem that we might still have is that we are dependent on the same type of solution if its geo-replicated. Application consistent snapshots, storage array or Windows VSS based, that are geo replicated are a very important part of our protection against data loss. That have become even more obvious with the ever-growing threat of ransomware. In this threat model you try to separate access to data and backups both logically and physically as well as technology and operations wise. Full “air gapping” is the Walhalla here. Lacking that you need at least serious delaying factors to prevent an attacker to gain access to both source data, snapshot on Windows or on storage arrays, backup targets and replicas of all the above.

We could actually use Azure File Sync as a primary of secondary backup target and combine the best of both worlds. Interesting huh!

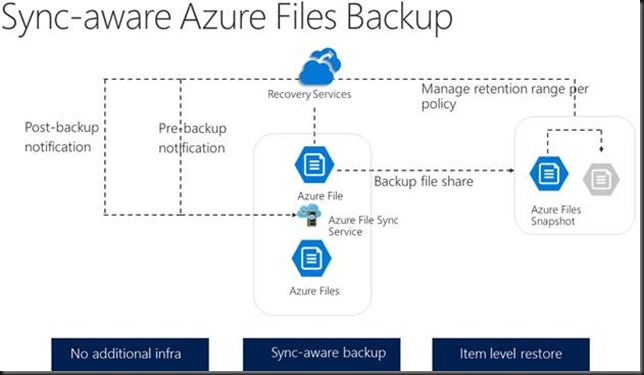

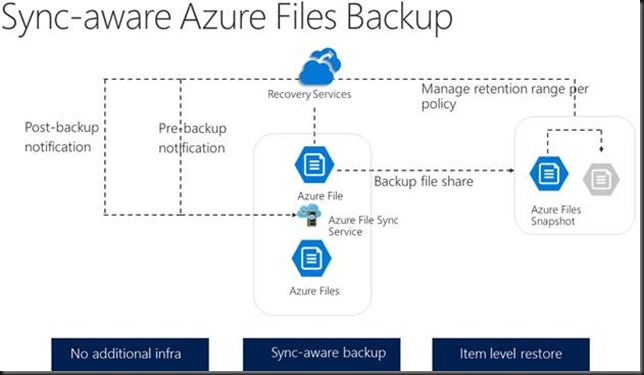

The fact that we can have snapshots of Azure File Shares is important to us for data recovery. It’s also important to have those replicated in case the Azure Datacenter West Europe goes south so to speak . The next question is whether our needs and wants are covered today in the preview?

Are all our needs and wants covered today in the preview?

The question we need to answer does it cover the needs you have well enough or better. Today Azure File Sync isn’t generally available and when it is it will be v1. So, things can and will improve.

Let’s forget of LUN size limitations (5TB) for the moment or some of our other future capability and functionality requests on the wish list. I don’t need 64TB or bigger per se but I’d like to see at least 20 to 30TB LUNs supported. Not sure if Azure File Sync snapshots will be limited by VSS to 64TB or that they break through that barrier like we can with certain hardware VSS providers.

Today we limit the size of LUNs for repair as well as data protection and recovery speeds or to minimize impact of a downed LUN. When the data is in the cloud and “only” a cache on premises these concerns might subside more and more. At Ignite 2017 Microsoft mentioned +/- 100TB sizes. So, there is an indication of that 64TB barrier being broken somehow.

The one thing we are not getting yet with Azure File Sync in preview is the multiple copies on two different technologies in two different locations (remember that 3-2-1 rule). You are and remain dependent on the Microsoft solution and maybe adherence to that rule is built into their technological design, maybe it isn’t. I don’t know right now. We have LRS for now, but not GRS. Do note that things change rather fast in the loud and what isn’t here today might be there tomorrow. GRS or ZRS is on the roadmap to be available when Azure File Sync goes into production.

Also, Microsoft realized that having snapshots in the same storage account was a risk. So, the backups will go to the recovery services vault in the future. It will also provide for long term data retention. This is important for archiving and authentic data that cannot be reproduced and as such has to be secured against altering.

Maybe you don’t trust MSFT or the cloud for 100%. If that’s the case and it’s a hard policy requirement of the business to have a protected data copy that is not dependent on the cloud provider we could propose a solution at a certain cost. You can use a file share cluster or, cheaper, a single file server in the datacenter (one location only) where everything is pinned locally (no cloud tiering) and which is used as a backup source to a backup target on different storage. The challenge there again is that you still have to deal with that massive volume of files yourself. If that’s worth the price/benefit versus the risks is not for me to decide. Yes, no, maybe so, that’s not. I deal in technological solutions and I buy and build results technological results for the needs of the organization. I do this in the context and in the landscape in which that business operates. I do not buy or build services, let alone journey’s. That’s the part I do and I do it very well . In my opinion Azure File Synch will play a major part in all this.

Other things on the wish list are:

- Global file lock mechanism and orchestration so with an error handling model (Try, Catch, Finally) so automation in different sites can deal with locked files in the application logic helping with the challenges of distributed access (read/write/modify) to data. I’m not asking to reinvent SharePoint here, don’t get me wrong but this area remains a challenge. Both for the user of data as well as for those trying to solve those challenges in code. There are just so many applications working in different ways that perfection in this arena will not be of this world and any solution will require some tweaking and flexibility to cater to differ needs. But I think that an effort here could make a real value proposition.

- Bringing ACL to Azure File Sync might enable new scenarios as well. It’s been on the wish list for a while and while MSFT is looking in to it we don’t have it yet.

- Support for data deduplication. Kind of speaks for itself and both customer & Microsoft would benefit.

- I’d love to see support for ReFSv3 as well in time because I’m still hoping Microsoft will bring the benefits of ReFSv3 too many more use cases than today. I think they got my feedback on that one loud and clear already.

So far for some of our musing that lead us to testing Azure File Sync. We’ll share more of our journey and become a bit more technical as we progress in our journey.