When implementing load balancing for RD Gateway we must take care not to forget load balancing the UDP traffic. Now your RDP Connection will still work over HTTPS alone if you forget this, but you’ll miss out on the benefits.

- Better experience of bad, unreliable network connections with high packet loss

- Better experience with high end graphics and in general a better graphical experience over WAN links.

As many people have load balanced their gateways since Windows Server 2008 (R2) when UDP was not into play yet and as things work without people might forget. The most important thing you need to know is that when leveraging UDP for RDP 8/8.1 the UDP session traffic has to leverage Direct Server Return (DSR) for the real servers configuration when we configure load balancing for a RD gateway farm with a KEMP Loadmaster. I’m focusing on the UDP part here, not the HTTPS part. That’s been done enough and the Kemp info on that is sufficient. The UDP part could do with some extra info.

The reason for this is that when UDP is leveraged for high end graphics we want to avoid sending all that graphical network traffic the load balancer. There is no real added value being performed there in this UDP use case but the load might get quite high. This is where DSR is leveraged wen configuring the Loadmaster. That means we also need to configure our real servers to uses Direct Return as the forwarding method. When you forget this you’ll lose UDP with RDP 8.1 but you might not notice immediately. If you’re not looking for it as the HTTP connection alone will let you connect and work, albeit with a reduced experience.

To read more on why it’s done this way (even if it seems complex and has drawbacks) see http://kemptechnologies.com/ca/white-papers/direct-server-return-it-you/ you’ll notice that for graphics it is great idea. By selecting Direct Server Return as the forwarding method (see later) changes the destination MAC address of the incoming packet on the fly (very fast) to the MAC address of one of the real servers. When the packet reaches the real server it must think it owns the VS IP address, which it doesn’t. So we use the loopback adapter to let the real server reply as if it does but we don’t respond to ARPs as that would cause issues with the load balancer who has the real IP of the virtual service. That’s where the 254 metric we configure in the demo below comes into play. Note that the real server responds over it normal NIC. Which is great and it helps with firewall rules not ruining the party. That’s why with DSR which leverages the the loopback adapter on the RD Gateway servers also requires you to configure the weak host / strong host behavior for the network configuration on those servers, it’s not answering itself! I’ll not go into details on this here but basically since Windows Vista and Windows Server 2008 the security model has change from weak host to strong host. This means that a system (that is not acting as a router) cannot send or receive any packets on a given interface unless the destination/source IP in the packet is assigned to the interface. In the “weak host” model, this restriction does not apply. Read more about this here. Let’s walk through this UDP/DSR/weak host setup & configuration.

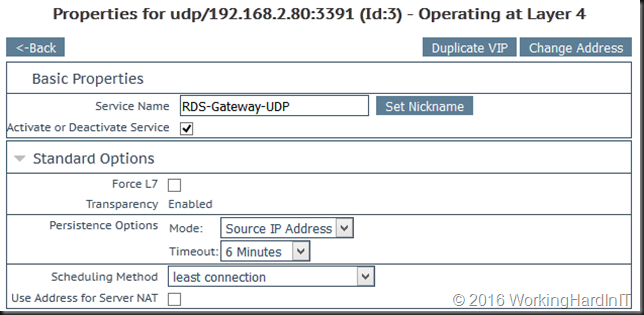

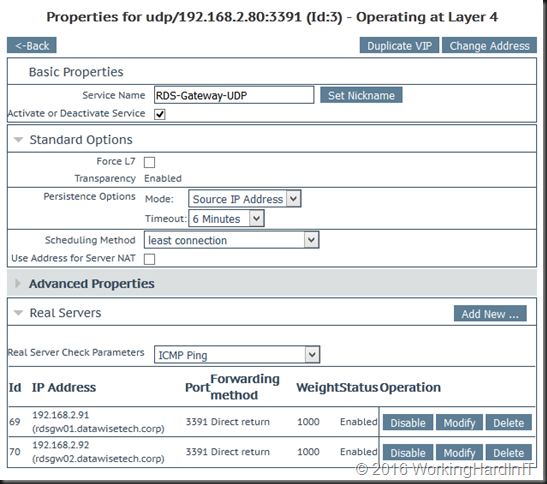

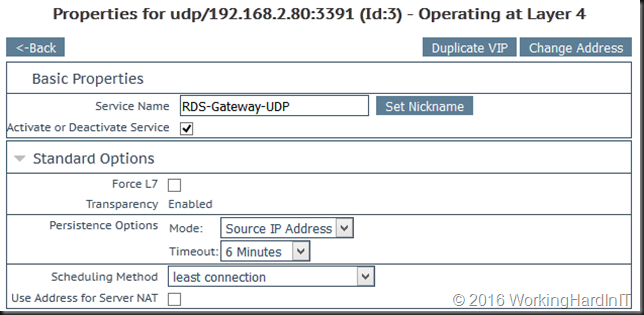

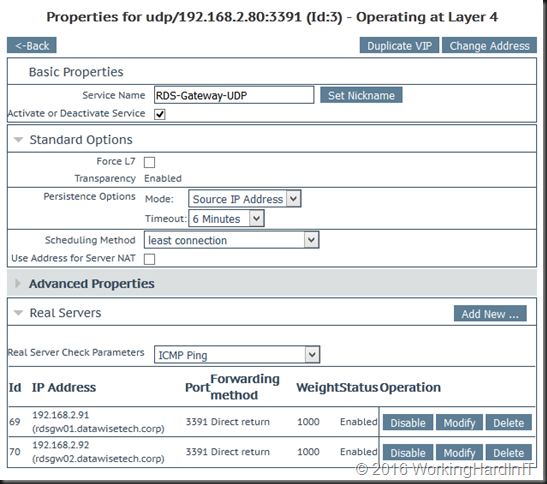

On your Loadmaster you’ll create a virtual service for UDP traffic.

- Select Virtual Services > Add New.

- Enter the IP address of your RD Gateway Farm

- Set 3391 as the Port.

- Select udp for the Protocol.

- Click Add this Virtual Service.

Open up the Standard Options to configure those

- We don’t need layer 7 as the UDP connections are tied to the HTPP connection and they will spawn and die with that one.

- We select Source IP Address as the Persistence Mode as the RD Gateway needs persistence to guarantee the connection stay together on the same RD Gateway server. Set the time out value no to high so it isn’t remembered to long.

- We select least connections as that’s the best option in most cases, let the farm node with the least load take on new connections. This is handy after down time for example.

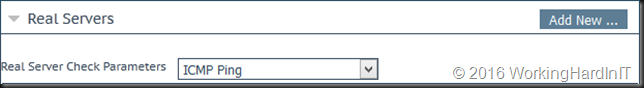

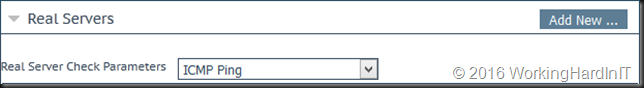

Now head over to the Real Servers section

- Make sure the Real Server Check parameters is set to ICMP ping, which is what the LoadMaster uses to check if the RD Gateway servers are alive.

- Click Add New to add an RD Gateway server, you’ll do this for each farm member.

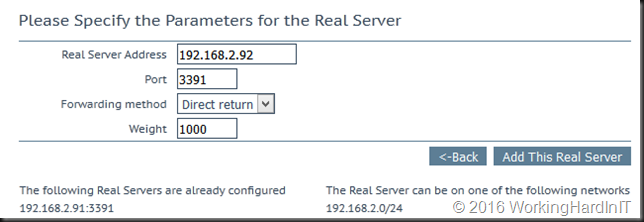

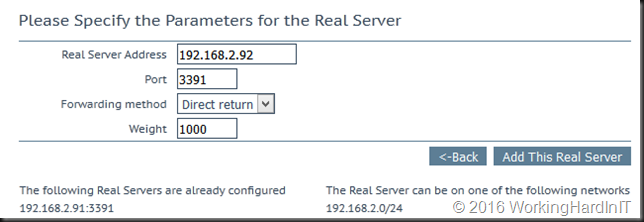

- Enter the Real Server Address for each RD Gateway.

- Enter 3391 as the Port.

- Select Direct return as the Forwarding method.

- Click Add This Real Server.

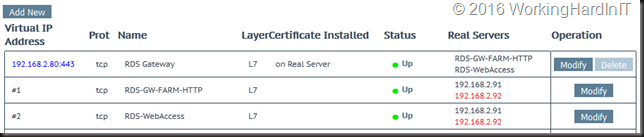

When you’re done it looks like this:

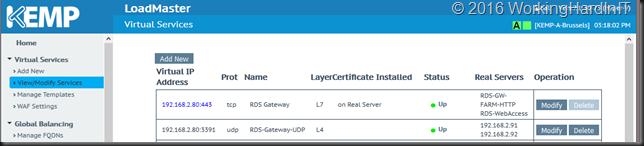

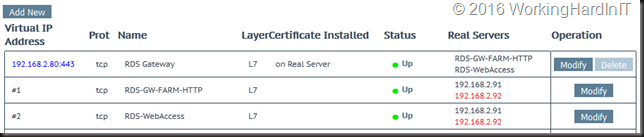

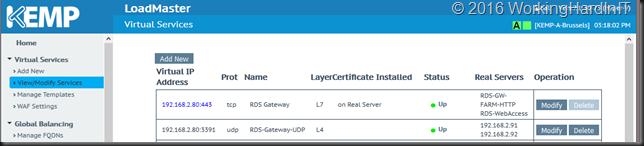

So now we need to check if the real servers are seen as on line and healthy …

If one RD Gateway server is down or has an issue you see this … no worries the LoadMaster sends all clients to the other farm member server.

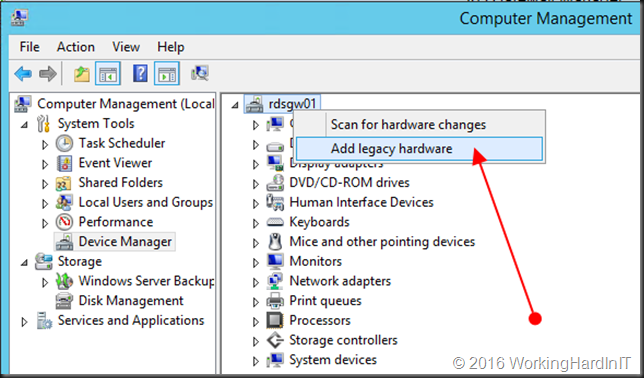

Configure the RD Gateway farm servers to work with DSR

We’re not done yet, we need to configure our RD Gateway servers in the farm to work with DSR.

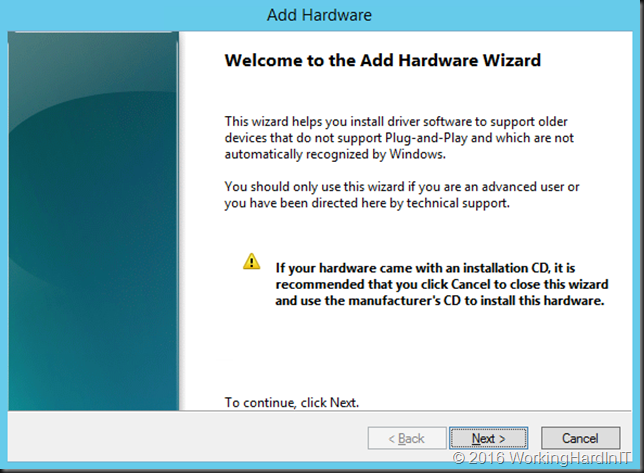

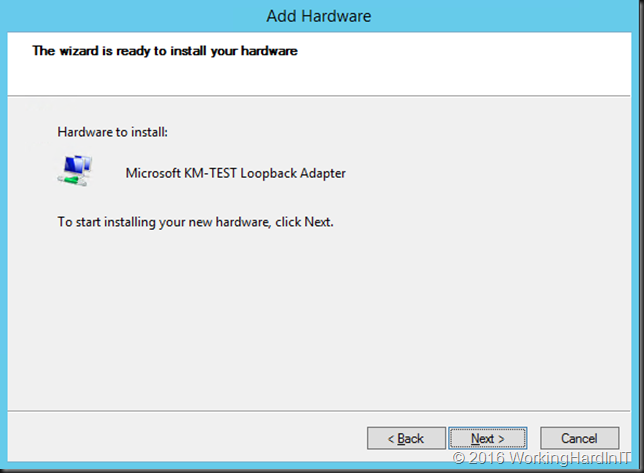

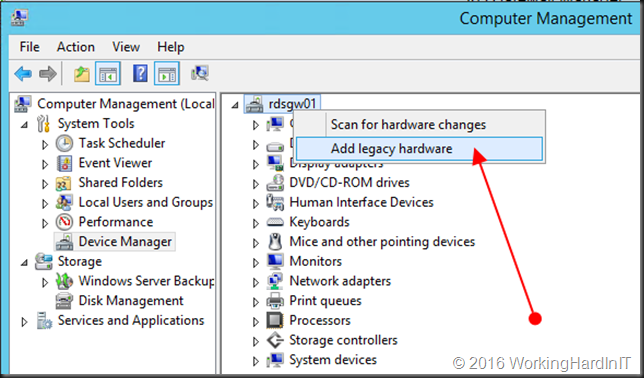

Go to Device Manager, right-click on the computer name and select Add legacy hardware

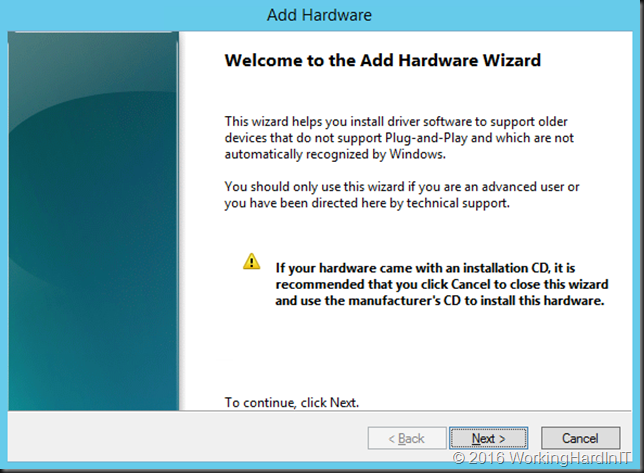

Click next on the welcome part of the wizard …

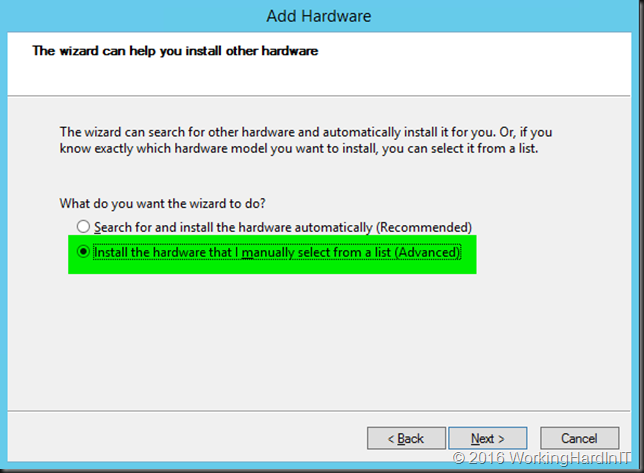

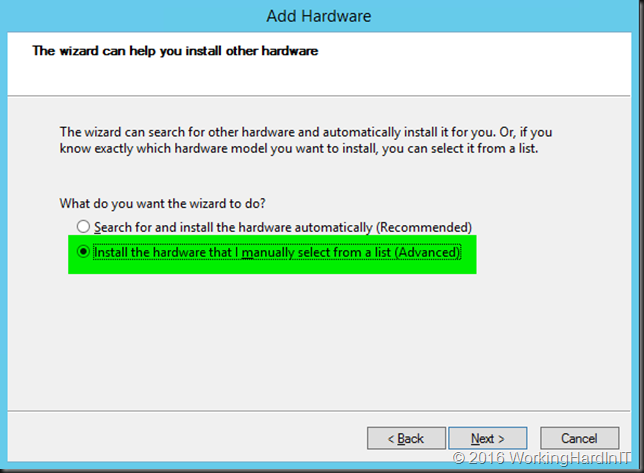

Select “Install the hardware that I manually select from a list (Advanced)” and click Next …

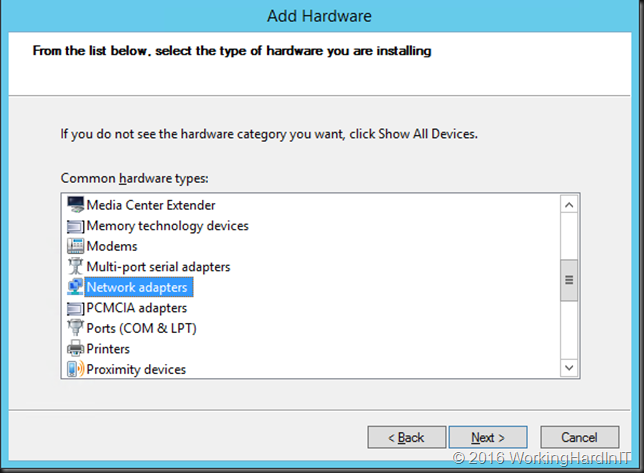

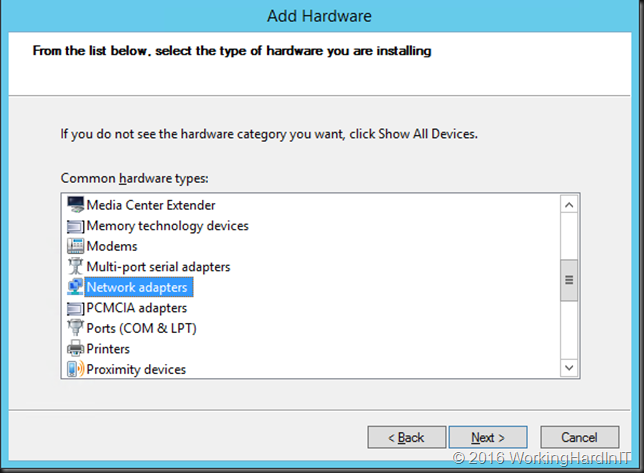

Scroll down to network adapters, select it and click Next …

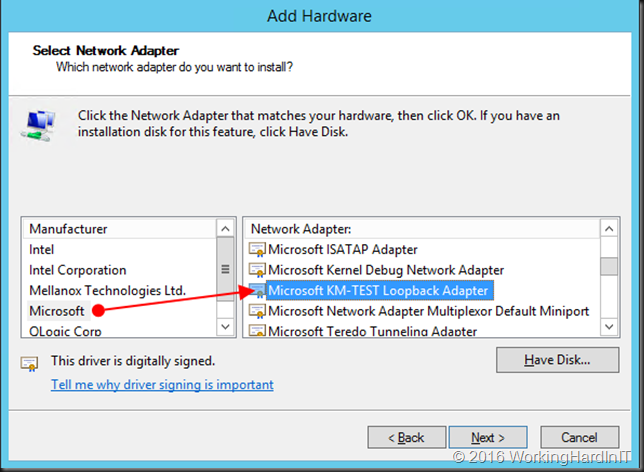

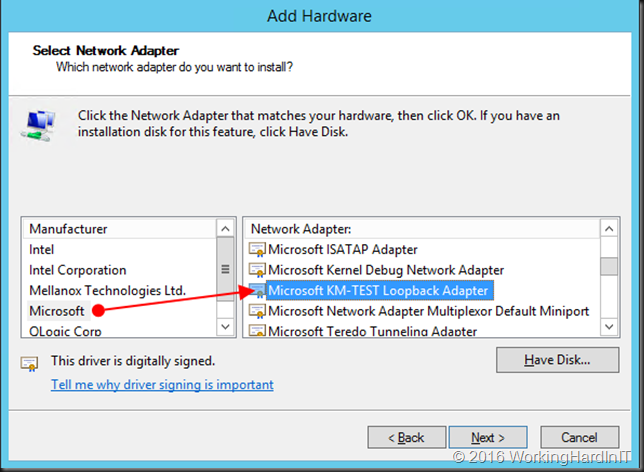

Under Manufacturer choose Microsoft and as Network Adapter scroll down to Microsoft KM-TEST Loopback Adapter, select it and click Next.

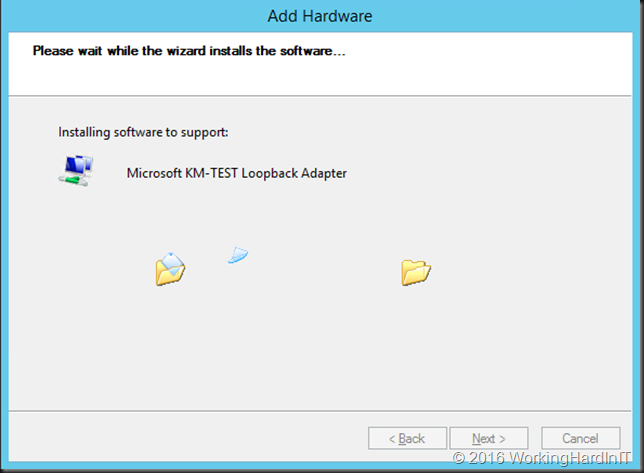

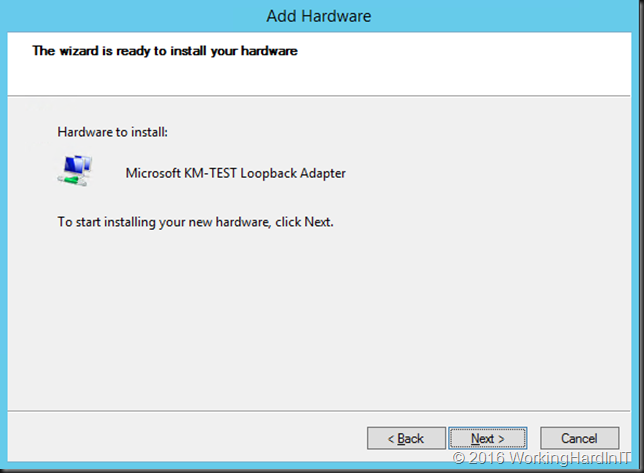

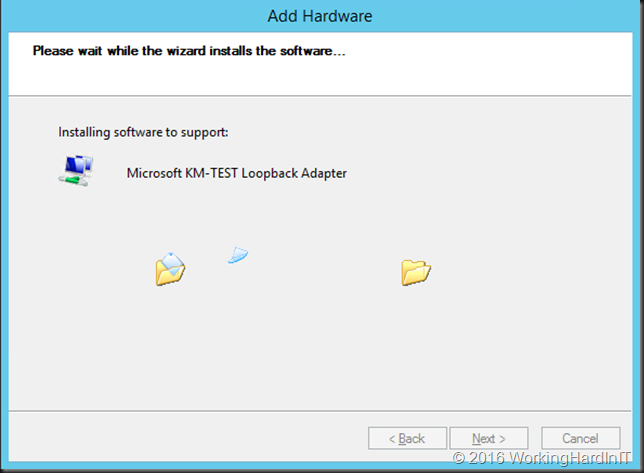

Click Next to install it …

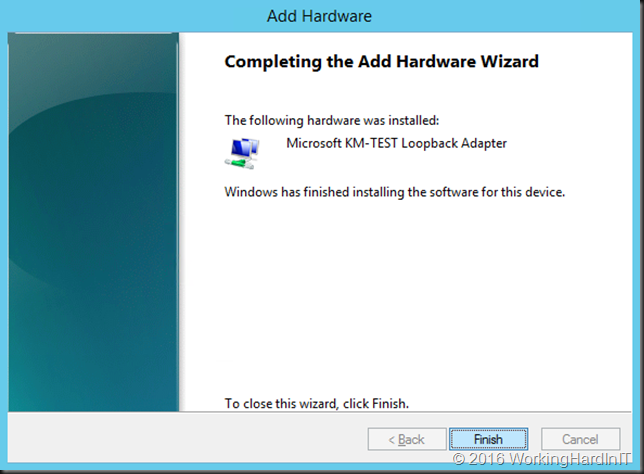

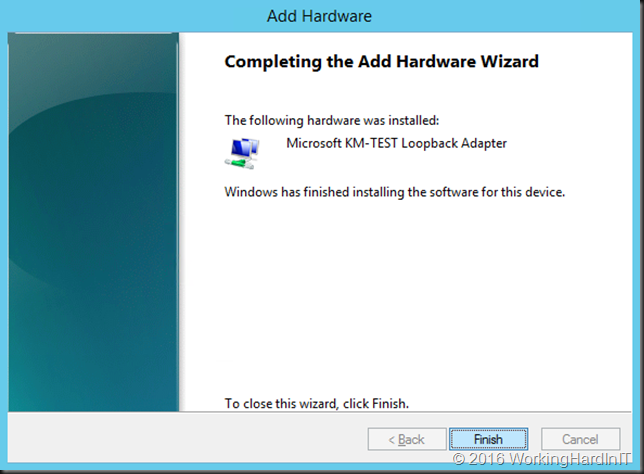

Click next to close the Wizard.

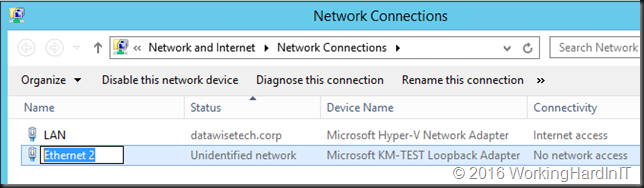

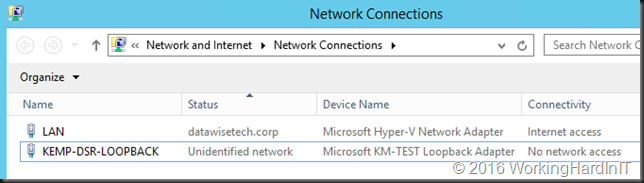

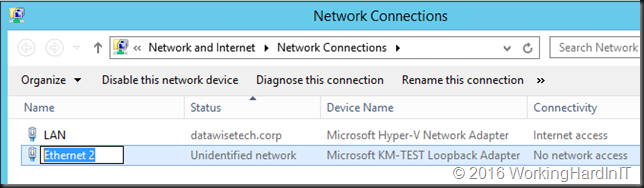

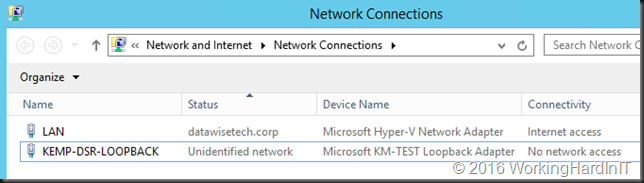

Now go to and change the name so you can easily identify the loopback adapter …

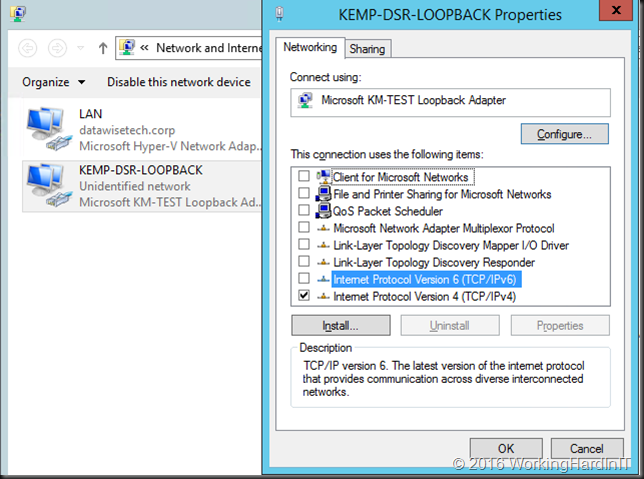

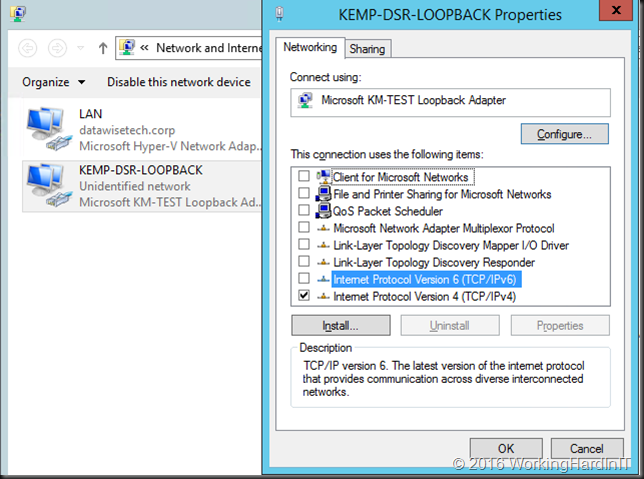

In the properties of the loopback adapter we disable everything we don’t need. In this case, we only need IPV4 and nothing else. We also need to configure the TCP/IP settings for the loopback adapter. So open up the TCP/IP v4 properties of that NIC …

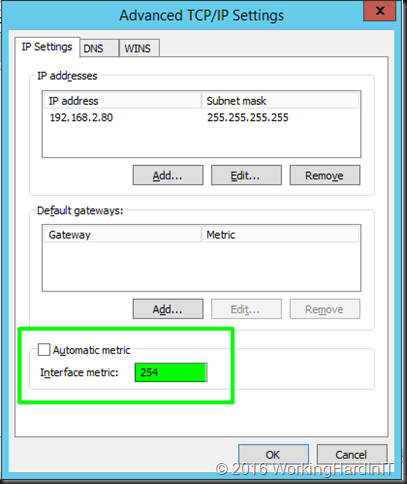

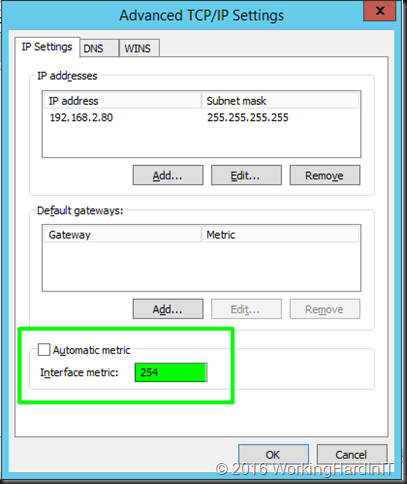

Enter the IP address of the Virtual Service for UDP on the load master and, very important enter a subnet mask of 255.255.255.255 for the loopback address. It’s a subnet of 1 host, the VIP IP address. Do not enter a gateway!

Now go to the advanced setting and deselect Automatic metric and fill out 254. This step prevents the server to respond to ARP requests for the MAC of the VIP with the MAC of the loop back adapter.

Also uncheck “Register this connection”s address in DNS” to avoid any name resolution problems for the real servers.

Finally disable NETBIOS over TCP/IP.

What we are doing with all the above is preventing any issues with normal network traffic to this real servers being affected by the loopback adapter who’s one and only function is to enable DSR and nothing else. It’s a bit “paranoid” but it pays to be and prevent problems.

Dealing with Strong Host / Weak Host setting in W2K8 and higher

We now still need to deal with the strong host security model and allow the LAN interface to receive traffic from the KEMP and allow the KEMP to receive and send traffic form/to the LAN interface. This is done by executing the following commands:

netsh interface ipv4 set interface LAN weakhostreceive=enabled

netsh interface ipv4 set interface KEMP-DSR-LOOPBACK weakhostreceive=enabled

netsh interface ipv4 set interface KEMP-DSR-LOOPBACK weakhostsend=enabled

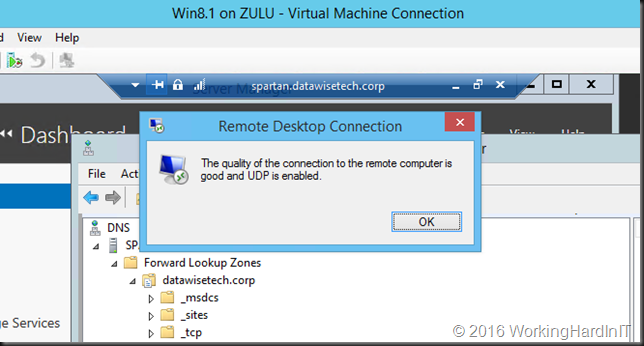

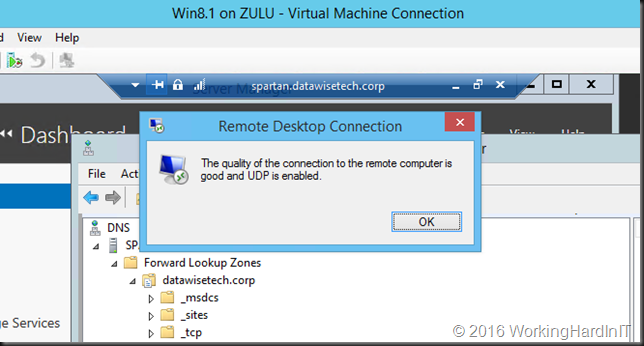

That’s it. You should now have HTTP/UDP connections in your RD Gateway monitoring when using a load balancer and set it up correctly. Remember if this isn’t configured correctly you’ll still connect but you lose the benefits the UDP connections offer.

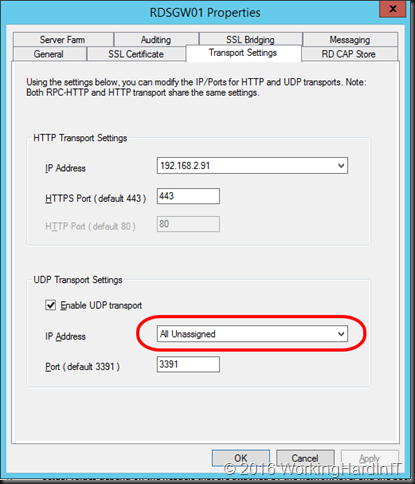

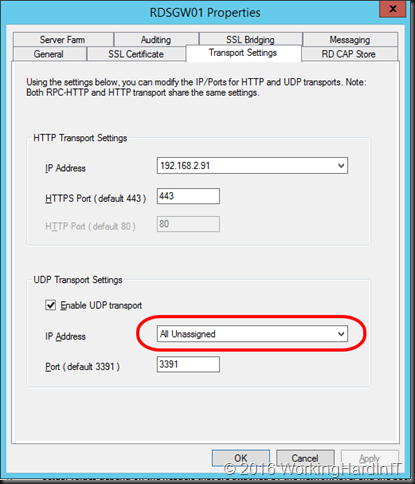

Now another thing you need to be aware of in your RD Gateway configuration is that for UDP to work with DSR is that the UDP Transport Settings need to be configured for “all unassigned” IP addresses. Other wise DRS won’t work and you’ll lose UDP. This make sense, you’ll receive traffic on the VIP on your real servers. It’s just like DSR with a web server where in IIS you’ll bind both the LAN and the loopback adapter to port 80 or 443 for the site.

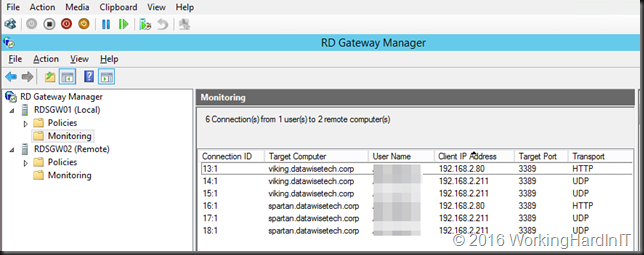

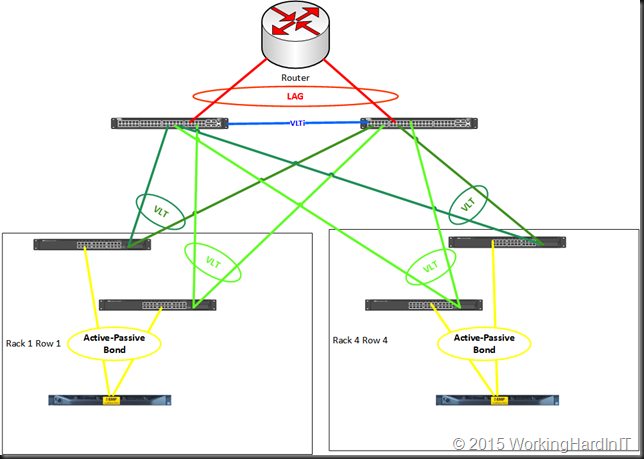

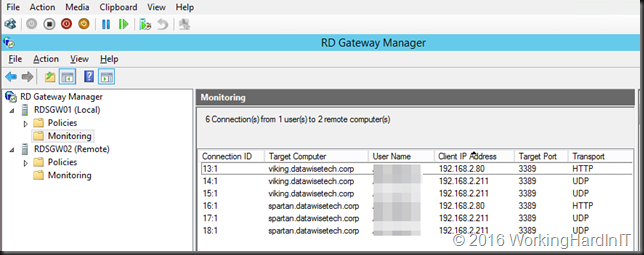

We can see that one client is connected via RDSGW01 to two servers (Viking and Spartan) leveraging HTTP and UDP. The load balancing is done via the KEMP Loadmasters in geo-redundant fashion.

Yes, my geo load balanced RD Gateway Server farms are providing UDP support for the servers and clients we RDP in to.

Combined with those servers and clients being spread amongst the sites provides for enough business continuity to keep the shop running when a site fails, so it’s more than just connectivity!