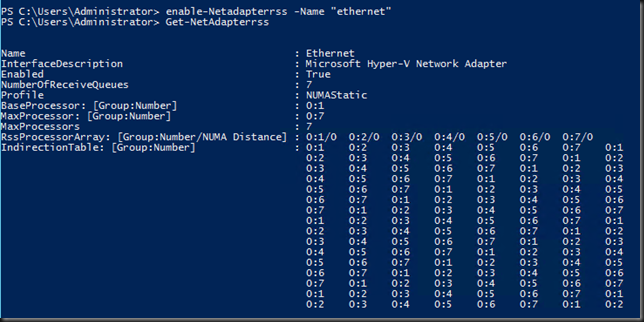

When optimizing your RSS/VMQ settings for maximum performance you’ll normally (I hope) do this in PowerShell. Save that script with some comments on why you configure it that way and make it part of your Hyper-V host deployment scripts

Why? Automation is king but you’ll need it again for sure. Why? Well there is this “tendency” that NIC firmware/driver updates reset your RSS/VMQ optimizations back to their defaults.That’s a bit of a bummer if you have to redo all the work instead of having a script ready to go. I have seen many a deployment where the configuration was missing after firmware/driver upgrades so please, check!

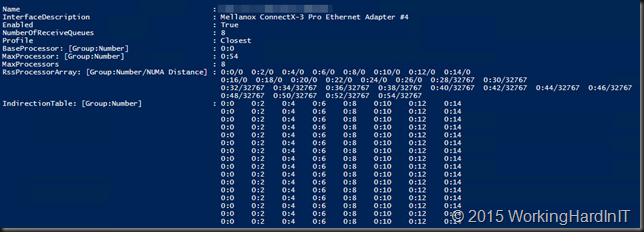

Figure: Where has my optimized configuration gone after a driver/firmware upgrade?

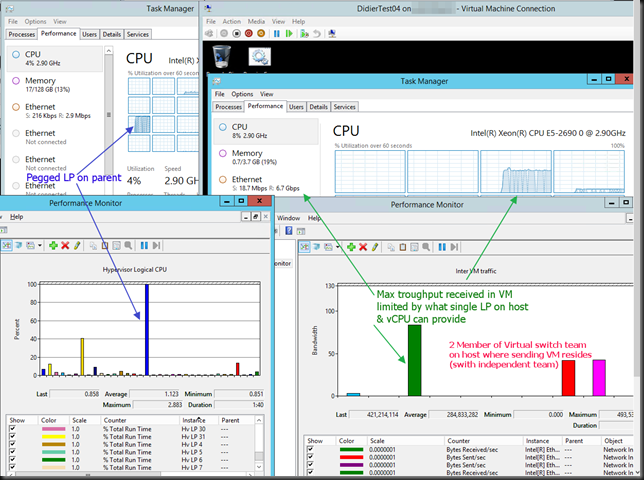

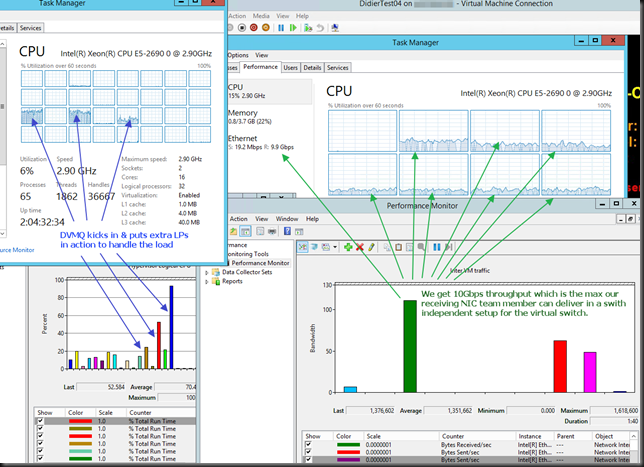

The good news is this isn’t a show stopper issue as things will keep working, but without your optimizations and with VMQ, depending on your NIC team setup for the vSwitch issues might occur. When doing NIC teaming for your virtual switch it’s important to get it right. With switch dependent teaming (LACP/Static) the NICs in the team need to use overlapping processor sets (Min Queues). When doing switch independent teaming the NICs in the team need to use non-overlapping processor sets. So you need to configure each NIC in your team to use the different processors (Sum of Queues).

On top of that you might want to / should separate RSS/VMQ cores from each other. SMB Direct for CSV/LM will also help achieve this as there we leverage CPU offloading to the NIC.