Introduction

Recently I had an interesting discussion on how to leverage Discrete Device Assignment (DDA) for GPU needs when it’s only needed for a certain number of virtual machines. Someone had read my blogs on leveraging DDA that made here optimistic and enthusiastic. But she noticed in the lab she could not leverage DDA on a VM running on a cluster and she could not use storage QoS policies on a stand-alone Hyper-V host with local storage. So, what could she do?

Well for one, her findings are correct. Microsoft did not enable DDA on clustered virtual machines. It doesn’t make sense as the GPU hardware is tied to the virtual machine and any high availability, both planned (live migration) or unplanned (failover) isn’t possible and available anyway. It just cannot be done. I hear you, when you say “but they pulled it off for SR-IOV for networking”. Sure, but please keep in mind that network cards with Ethernet and TCP/IP allows for different approaches than high end video.

My favorite deployment for VMs with Discrete Device Assignment for GPU

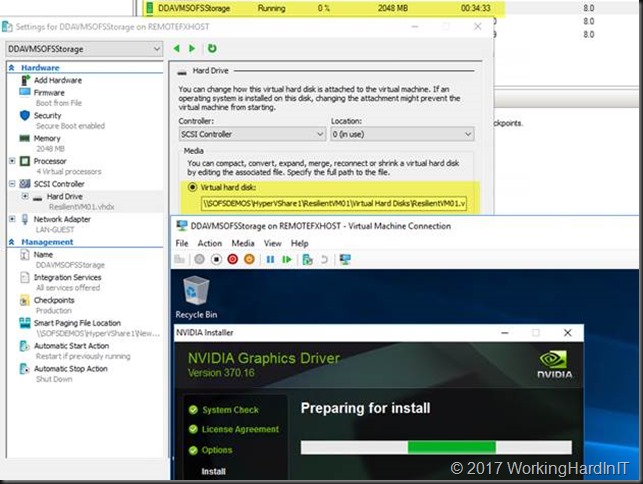

My favorite deployment for VMs with Discrete Device Assignment (DDA) for GPU leveraged SMB3 SOFS shares for the virtual hard disks and stand-alone Hyper-V hosts that are member servers in the domain. Let me explain why.

Based on what we discussed above we have some options. One work around is running the DDA virtual machines not high available on local storage on a cluster node. But that would mean you would have a few VMs on all the nodes and that all those nodes must have a DDA capable GPU. Or if you limit the number of nodes that have such a GPU you’ll have a few odd balls in your cluster. You’ll need to manage some extra complexity and must save guard against assigning a GPU via DDA that is already in use for RemoteFX. That cause all kinds of unpleasantness, nothing too deadly but not something you want to do if on your production VDI clusters for fun. It’s a bit like not running a domain controller on a CSV and not making it highly available. If that’s the only option you have you can do that, and I do when needed as Microsoft has improved a lot of things to make this a better and less risky experience. But I prefer to have either physical one or host it on a separate non-clustered Hyper-V host if that’s an option because not all storage solutions and environments have all capabilities needed to make that fool proof.

Also note that running other storage on a S2D node isn’t supported. You have your OS on the boot disks and the disks used in storage spaces. Odd ones out aren’t supposed to be there as S2D will try to recruit them. You can get do it when using traditional shared storage

What I also don’t like about that is that if the cluster storage is not SMB3 SOFS you don’t get the benefit of storage QoS policies in Windows Server 2016, that only works with CSV. So optionally you could leave the non-clustered VM on a CSV. But that’s perhaps a bit confusing and some people might think some forgot to make the machine high available etc.

My preferred setup to get high available storage for virtual machines with DDA needs that benefits from what storage QoS polies have to offer for VDI is to use standalone Hyper-V hosts that have DDA capable GPUs and leverage SMB3 SOFS shares for the virtual Machines.

The virtual machines cannot be high available anyway so you lose nothing there. The beauty is that in this case, as you leverage a Windows Server 2016 SOFS cluster for Hyper-V storage over SMB3 shares, you do get Storage QoS policies.

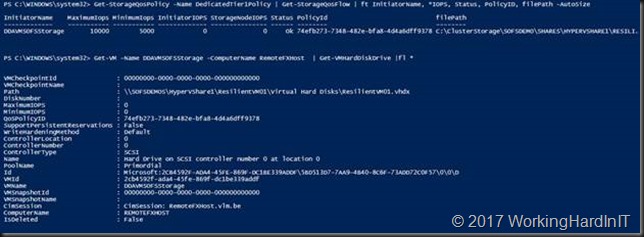

#On a SOFS node

Get-StorageQosPolicy -Name DedicatedTier1Policy | Get-StorageQosFlow | ft InitiatorName, *IOPS, Status, PolicyID, filePath -AutoSize

#Query for the VM disks on the Hyper-V node

Get-VM -Name DDAVMSOFSStorage -ComputerName RemoteFXHost | Get-VMHardDiskDrive |fl *

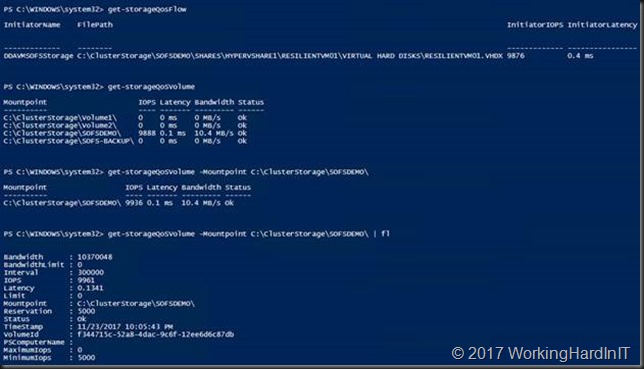

#We generate some IO and get some stats on a SOFS node

get-storageQosFlow

get-storageQoSVolume -Mountpoint C:\ClusterStorage\SOFSDEMO\

get-storageQoSVolume -Mountpoint C:\ClusterStorage\SOFSDEMO\ | fl

You can start out with one Hyper-V node and add more when needed, that scale out. Depending on the needs of the virtual machines and specs of the servers (Memory, CPU cores) and the capability and number of GPU in the video cards you get some scale up as well.

To learn more about DDA go here: https://blog.workinghardinit.work/?s=DDA&submit=Search

To learn more about storage QoS policies go here:

- Hyper-V Storage QoS in Windows Server 2016 Works on SOFS and on LUNs/CSV

- Maximum bandwidth in Hyper-V storage QoS policies

- Take a look at Storage QoS Policies in Windows Server 2016

Some more considerations

By going disaggregated. You can leverage a SOFS share for both virtual machines running on a Hyper-V cluster or on stand-alone (non-clustered) Hyper-V that are domain members. The SOFS cluster can be leveraging S2D, traditional storage spaces with shared SAS (JBODs) or even a FC, iSCSI or shared SAS SANS if that the only option you have. That’s all OK as long as it’s SOFS running on Windows Server 2016 and the Hyper-V hosts (stand alone or clustered) are a running 2016 as well (needed for Storage QoS policies and DDA). There is no need for the Hyper-V host to be part of a cluster to get the best results you need. If I use SOFS for both scenarios I can use the same storage array, but I don’t need to. I could also use separate storage arrays. If the Hyper-V cluster is leveraging CSV instead of SOFS I will need to use a separate one for SOFS as its ill advised to mix Hyper-V workloads with the SOFS role. Keep things easy, clear and supportable. I’ll borrow a picture I got from a Microsoft PM recently, do seek out the bad ideas.