An error occurred connecting to the cluster

This morning I woke up to a bunch of failed backup notifications of our trusted Veeam Backup & Replication v9.5 update 2 solution. After 3:30 AM the backups of one particular cluster started failing.

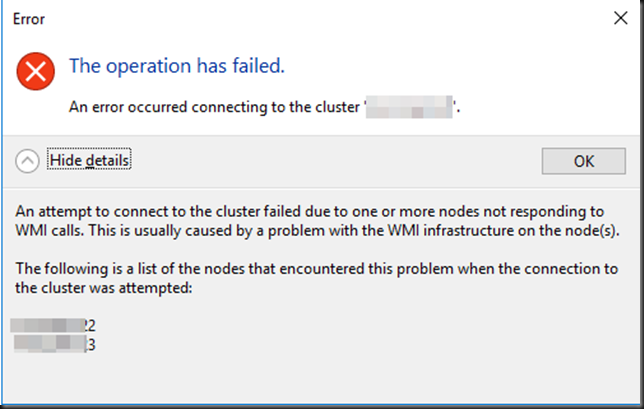

I went to have a look but I could not connect to the 3 node cluster.

I logged on to the cluster nodes themselves and did a quick verification of network connectivity, DNS etc. That was all fine. WMI services were running on all nodes but on node 2 and 3 they were not functional.

Cleary we have a WMI issue. And sure enough, no Hyper-V manager available on those 2 nodes but we did have it on the one properly functioning node.

We tested some PowerShell WMI queries (get-wmiobject mscluster_resourcegroup -computer NodeToTest -namespace “ROOT\MSCluster“) to the cluster and this confirmed that WMI was toast on those two nodes.

Fixing the issue

The good news was that all the VMs were all up and running – a few that had RHS.exe issues – but were still alive pure Hyper-V wise. That explains why they didn’t have any support calls come in. So if we can fix this without causing down time this would be great. To try this we decided to restart the WMI service.

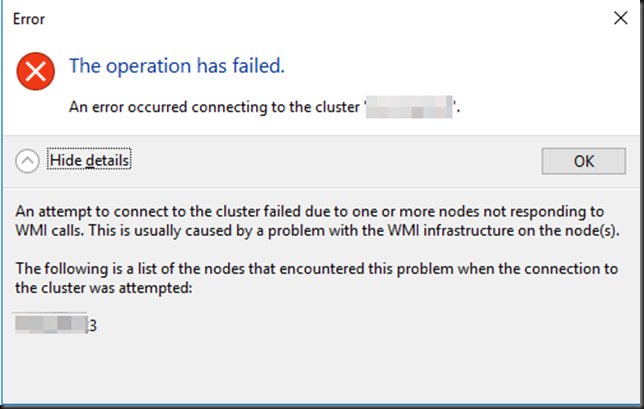

On problematic node 2 this worked. It restarted depending services as well such as Hyper-V Virtual Machine Management, User Access Logging Service, IP Helper and the Veeam Installer Service and the Veeam Hyper-V Integration Service. We got connectivity back via Hyper-V manager but the Failover Cluster manager GUI remained an issue but now only complained about node 3.

We wanted to avoid rebooting node 3 to avoid downtime to the VMs. So what we did there is stop the depending services that we could stop. It was vmms.exe that was stuck in shutdown we just killed the process manually with stop-Process -name “vmms” -force

That allowed the WMI service to be restarted. We then started the depending services manually and we got back the connectivity to Hyper-V Manager on node 3.

The Failover Cluster manager GUI could also connect again to the cluster. We checked the cluster for other issues. When done and found OK we live migrated the VMs node per node and did a reboot of every node one by one. This to have cleanly started nodes and to see if any trouble some event were logged during the startup. Normal operations were resumed.

Do note that there is a blog on TechNet about a similar issue but with a different error message. That was caused by missing cluswmi.mof file due to an ill advised use of run mofcomp.exe *.mof. This was not the case here. A reboot of the misbehaving nodes would have done the trick as well (as blogged here Trouble Connecting to Cluster Nodes? Check WMI! ) but we avoided as much downtime as possible here by going the route we did.