We’ll go back to basics today. Some times the obvious, no matter how evident it is to us technologists, is challenged. Recently we got the remark that we were wasting CPU cycles by assigning to many vCPU to certain virtual machines on our Hyper-V cluster. So we had to explain that high availability has a price. On top of that we had to explain that things are not as wasteful as they seem in a virtual environment.

The case

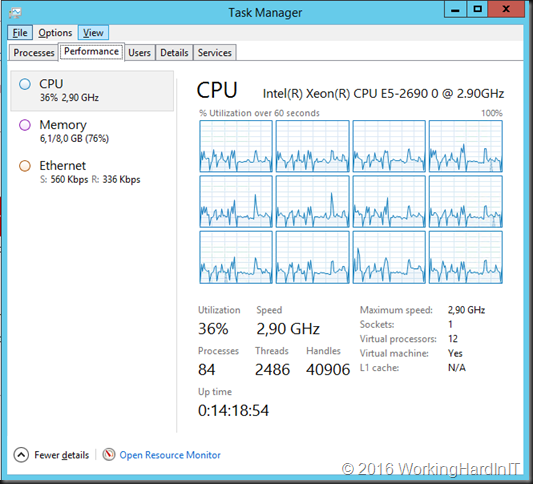

Here’s one of the “offending” virtual machines. They assumed that we are wasting at least 50% of 12 CPUs.

This is one node in a dual node load balancing (active-active) and highly available solution. This provides for zero down time during scheduled maintenance and very little downtime during system failures.

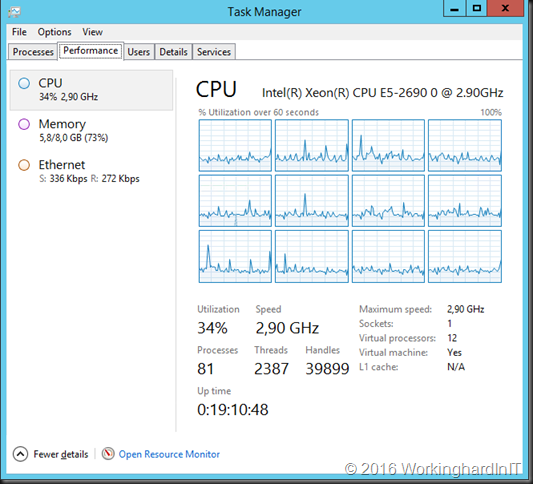

And here’s the second node (yes the 1st node has been down for scheduled maintenance more recently that node 2).

In a 2 node HA solution you need to make sure that one node can handle the entire workload. This is the absolute border line of an N+1 solution. This means you can lose 1 node. N determines the number of nodes needed to guarantee an agreed upon service level and the number defines how many nodes failures can be tolerated before affecting the service.

In the above example there’s a need to have the CPU resources on each node to run the entire workload on one node without having an effect on the service. Therefore, when both nodes are up this might seem like a waste to the uninitiated. It is however a required to achieve the high availability goal. A constant CPU usage over 75 % will lead to a reduction in service quality in this case and even compromise the usability of the that service.

I did not even dive into the dangers of designing purely based on averages during this “explanation”. That was one step to much for the level of the discussion.

It’s also important to note that Hyper-V CPU scheduling is highly intelligent and is far less susceptible to the waste of CPU cycles via over provisioning of vCPU than some other solutions are or used to be. Knowing the capabilities and inner working of the technology used is also important in all this. More nodes generally also make “over provisioning” less of an issue. When you have 10 nodes and you lose 1, you only have lost 10% of the capabilities, not 33% like in a 3 node cluster.

Ideally you have 3 node so that even during an issue with one node you still maintain redundancy. However if you want acceptable services during a 2 node failure you’ll need to go to N+2, meaning that you need 2 nodes to provide the services and handle losing 2 nodes gracefully. In that case you’ll need 4 node and so on. The larger the node count the wiser it is to go to a N+2 model and ideally you’ll provide separate failure domains over which the nodes are distributed. An example of this is having a redundant geo-load balanced web farm of 32 virtual machine nodes spread over 2 locations and running on separate hardware failover clusters in each location. As you can see the higher the stakes and demands the faster the cost and potential complexity rises. You can offload some of the complexity by leveraging a public cloud like Azure, but the costs will still be there. There is no such thing as a free lunch, some are quite easy and affordable for what you get.

Conclusion

High Availability has a price. I did mention that already, right? To be able to keep your services running at a level that is both workable and acceptable to your customers and stake holders you will need to over provision to a degree. There is no magic here. When your solutions are being scrutinized by people with no real background, experience and context in high availability you might need to explain this.