Introduction

You might have noticed that I’m pretty impressed by what Microsoft is doing with ReFS v3 in Windows Server 2016. You can read some of my musing on it in ReFS vNext Block Cloning and ODX and take a look at a comparison between ReFS & ODX speeds when creating VHDX files in Lightning Fast Fixed VHDX File Creation Speed With ReFS on Windows Server 2016 .

Note that this is also leveraged for accelerated checkpoint merges, VHDX resizing etc.

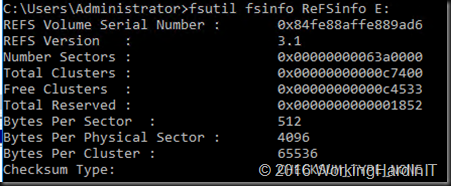

Now it goes without saying that Hyper-V (they’re the tip of the spear at MSFT) and other Microsoft products would take advantage of the capabilities of ReFS. But now we know that Veeam Backup & Replication 9.5 has made use of ReFS to help with the resilience of their backups, the speed of their Synthetic Full backups and the space required.

To a Hyper-V MVP and a Veeam vanguard it was obvious these two combined just had to lead to way for others to follow.

Veeam Leads the way by leveraging ReFS v3 capabilities

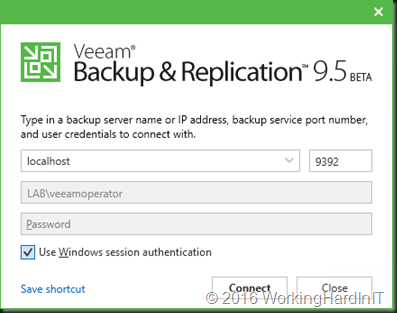

Veeam Backup & Replication 9.5 will leverage ReFS v3 …

and by doing so they deliver the following benefits:

- Shorted backup windows and a reduced backup storage load on the repository

- Reduced backup target storage capacity which is reducing or eliminating the need for deduplication in many scenarios.

- Better backup data protection by leveraging the ReFS native capabilities to protect against bit rot which was one of the prime goals for which Microsoft designed ReFS.

How is this done?

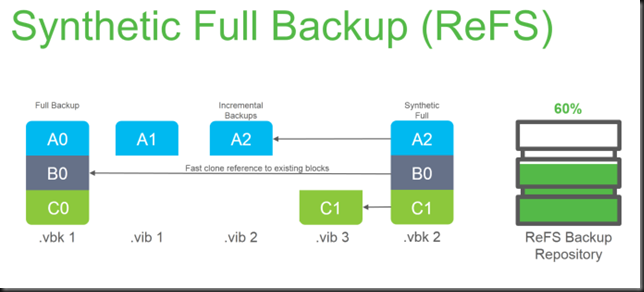

ReFS v3 has “fast cloning” technology which Veeam is leveraging. This results in up to 10 times faster creation and transformation of synthetic full backup files! ReFS fast cloning allows for creating new files without physically moving data blocks between files. This is what delivers even shorter backup windows and lower backup storage load on the repository or repositories.

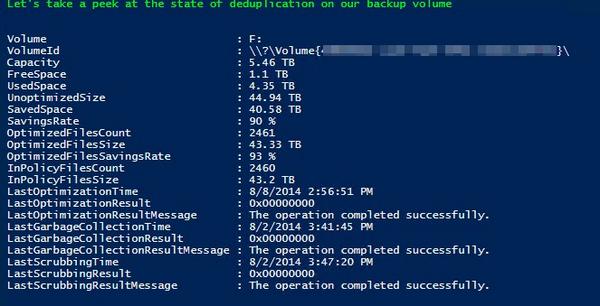

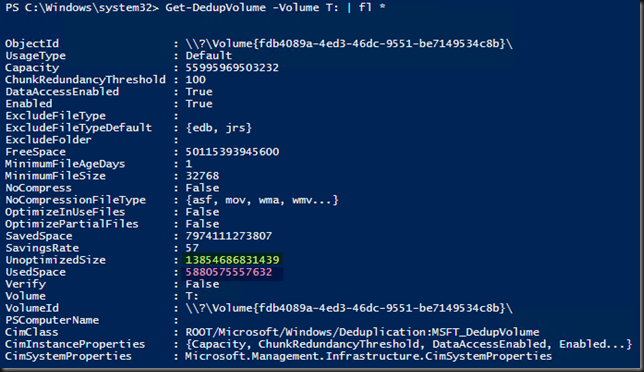

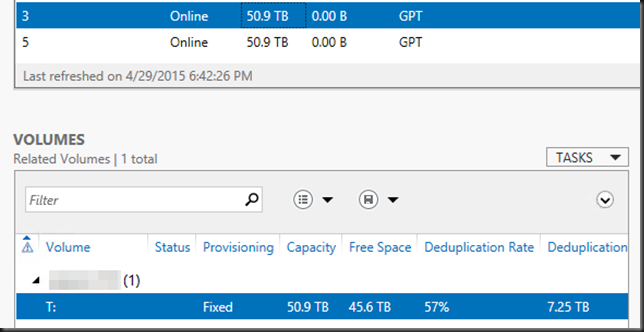

They use what they call “Spaceless full backup technology” which allows multiple full backup files to reside on the same ReFS volume that share the same physical data blocks. As a result they need less storage capacity which can reduce or eliminate the need (and cost) of deduplication appliances whilst leveraging commodity storage.

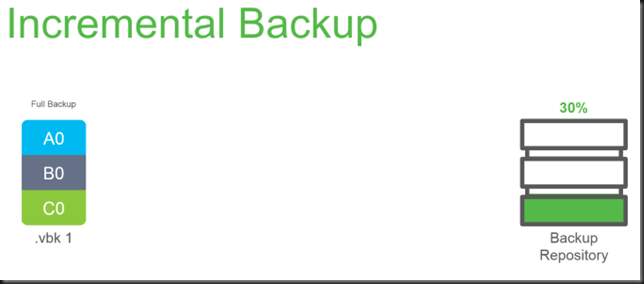

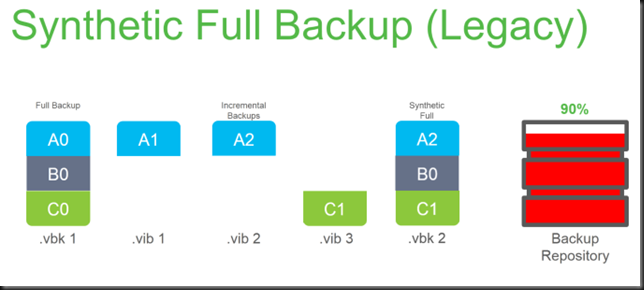

Lest see how this is done. A “legacy” full backup is created an consumes 30% storage capacity. Then we make incremental backups.

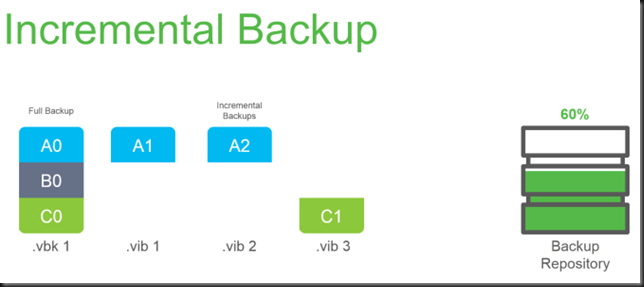

3 incremental backups add 3 * 10% of delta to the needed backup storage capacity which adds up to 60%.

We create a synthetic full backup and the copies of the data require another 30% of space (90%).

No let’s compare this to v9.5 that leverages a Windows Server 2016 ReFS formatted backup target repository. Instead of copying data ReFS references already existing data block for a new file. This saves on IO, space and time!

Is this safe? What if those data blocks that are reference multiple times are corrupted? Well Veeam does have protection against that in place already! But it goes the extra mile as ReFS has the capabilities to protect against that itself or it’s power would also become its biggest weakness.

Veeam’s data integrity streams integration leverages ReFS data integrity scanner and even proactive error correction when used in combination with Storage Spaces to protect backup files from bit rot and allows for more reliable forever-incremental archiving. This helps make the spaceless full backup technology trustworthy & safe alongside the health checking & error fixing capabilities already available in Veeam Backup & Replication.

Conclusion

I’m impressed by the forward looking and fast adoption of the capabilities of ReFS v3 by Veeam and I’m testing Backup & Replication v9.5 Beta today in the lab. They have more up their sleeve by the way as they have some interesting work with PowerShell Direct to make backups ever more resilient in ever more scenarios. More on that later.

Anyone who said Veeam would lose its edge in the world of Hyper-V backups when Microsoft introduced their own native change block tracking (resilient change tracking) has clearly never dealt with Veeam seriously and professionally. I have and I’m always happy to chat to them as they have serious technical skills combined with vision and business acumen that makes sure they’re leaders in the business of backup. It makes me proud to be a Veeam vanguard and a MVP with a specialization in Hyper-V.