Introduction

The main purpose of this post is, as mentioned in the title, to think. This is not a design or a technical reference. When it comes to designing a virtualization solution for your private cloud their are a lot of components to consider. Storage, networking, CPU, memory all come in to play and there is no one size fits all. It all depends on your needs, budget in combination with how good your insight into your future plans & requirements are. This is not and easy task. Virtualizing 40 webservers is very different from virtualizing SQL Server, Exchange or SharePoint. Server virtualization is different from VDI and VDI itself comes in many different flavors.

- So what workloads are you hosting? Is it a homogeneous or a heterogeneous environment?

- What kind of applications are you supporting? Client-Server, SOA/Web Services, Cloud apps?

- What storage performance & features do you need to support all that?

- What kind of network traffic does this require?

- What does your business demand? Do you know, do they even know? Can you even know in a private cloud environment?

- Do you have one customers or many (multi tenancy) and how are they alike or different in both IT needs and business requirements.

The needs of true public cloud builders are different from those running their own private clouds in their own data centers or in a mix of those with infrastructure at a hosting provider. On top of that an SMB environment is different from large enterprises and companies of the same size will differ in their requirements enormously due to the nature of their business.

I’ve written about virtualization and CPU considerations before (NUMA, Power Save settings for both OS & 10Gbps network performance) before. I’ve also discussed a number of posts about 10Gbps networking and different approaches on how to introduce it with out breaking the bank. In 2012 I intend to blog some more on networking and storage options with Windows 8 and Hyper-V 3.0. But I still need to get my hands on the betas and release candidates of Windows 8 to do so. You’ll notice I don’t talk about Infiniband. Well I just don’t circulate in the ecosystems where absolute top notch performance is so important that they can justify and get that kind of budget to throw at those needs.

To set the scene for these blog posts I’ll introduce some considerations around networking options with Hyper-V. There are many features and options both in hardware, technologies, protocols, file systems. Even when everything is intended to make live simpler people might get lost in all the options and choices available.

Windows Server 8 NIC features – The Alphabet Soup

- Data Center Bridging (DCB)

- Receive Segment Coalescing (RSC)

- Receive Side Scaling (RSS)

- Remote Direct Memory Access (RDMA)

- Single Root I/O Virtualization (SR-IOV)

- Virtual Machine Queue (VMQ)

- IPsec offload (IPsecTO)

A lot of this stuff has to do with converged networks. These offer a lot of flexibility and the potential for cost savings along the way. But convergence & cost savings are not a goal. They are means to an end. Perhaps you can have better, cheaper and more effective solutions leveraging you existing network infrastructure by adding some 10Gbps switches & NICs where they provide the best bang for the buck. Chances are you don’t need to throw it all out and do a fork lift replacement. Use what you need from the options and features. Be smart about it. Remember my post on A Fool With A Tool Is Still A Fool, don’t be that guy!

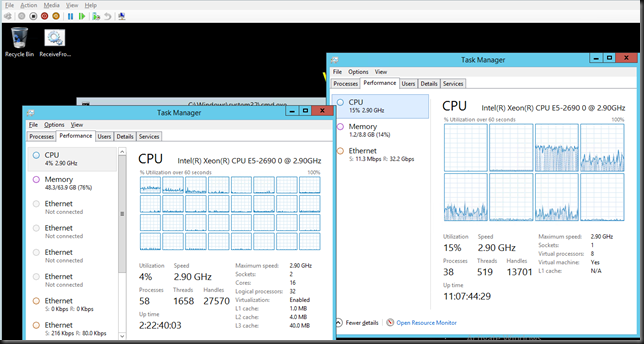

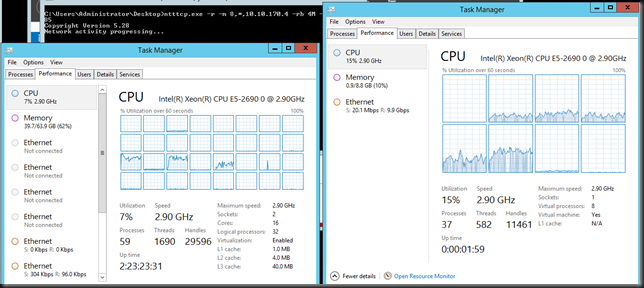

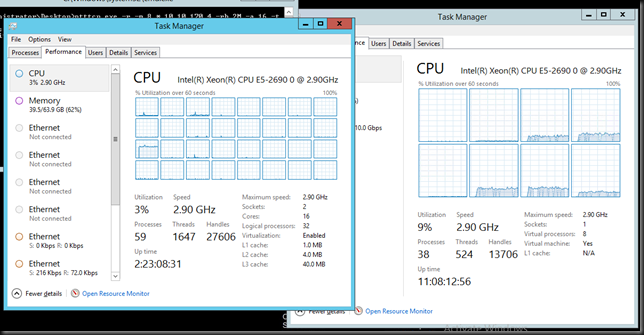

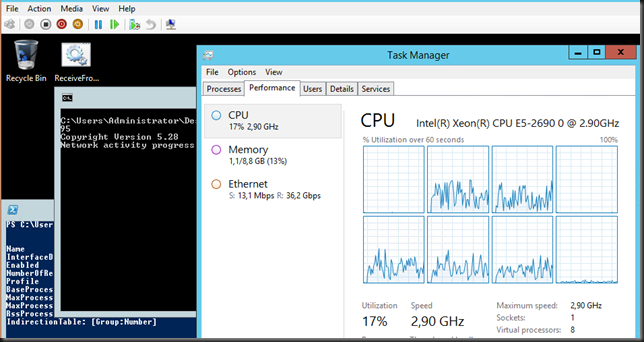

Now let’s focus on couple of the features here that have to do with network I/O performance and not as much convergence or QOS. As an example of this I like to use Live migration of virtual machines with 10Gbps. Right now with one 10Gbps NIC I can use 75% of the bandwidth of a dedicated NIC for live migration. When running 20 or more virtual machines per host with 4Gbps to 8Gbps of memory and with Windows 8 giving me multiple concurrent Live Migrations I can really use that bandwidth. Why would I want to cut it up to 2 or 3Gbps in that case. Remember the goal. All the features and concepts are just tools, means to and end. Think about what you need.

But wait, in Windows 8 we have some new tricks up our sleeve. Let’s team two 10Gbps NICs put all traffic over that team and than divide the bandwidth up and use QOS to assure Live Migration gets 10Gbps when needed but without taking it away from others network I/O when it’s not needed. That’s nice! Sounds rather cool doesn’t it and I certainly see a use for it. It might not be right if you can’t afford to loose that bandwidth when Live Migration kicks in but if you can … more power & cost savings to you. But there are other reasons not to put everything on one NIC or team.

RSS, VMQ, SR-IOV

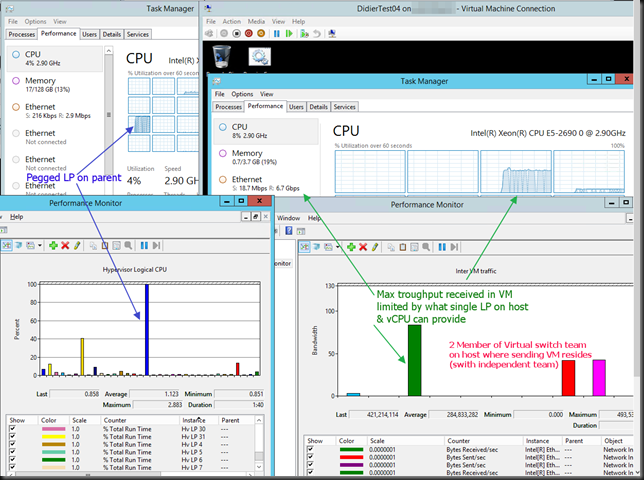

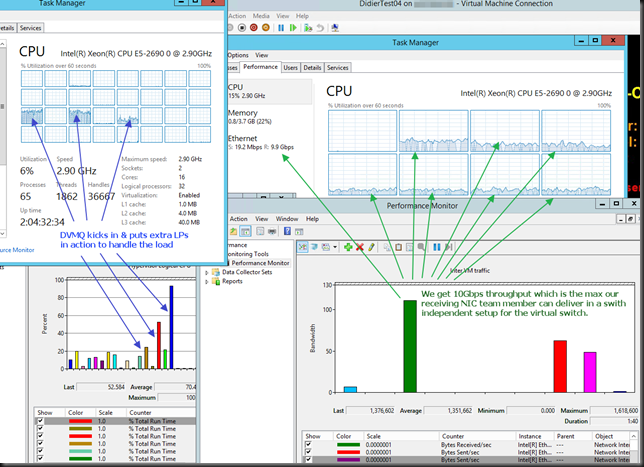

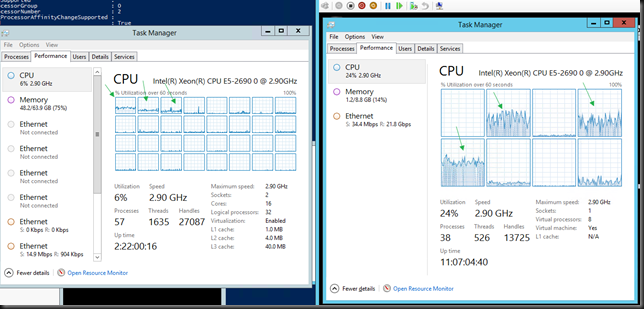

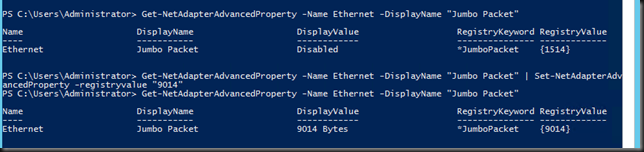

One thing all these have in common is that they are used to reduce the CPU load / bottleneck on the host and allow to optimize the network I/O and bandwidth usage of your expensive 10Gbps NICs. Both avoiding having a CPU bottleneck and optimizing the use of the available bandwidth mean you get more out of your servers. That translates in avoiding buying more of them to get the same workload done.

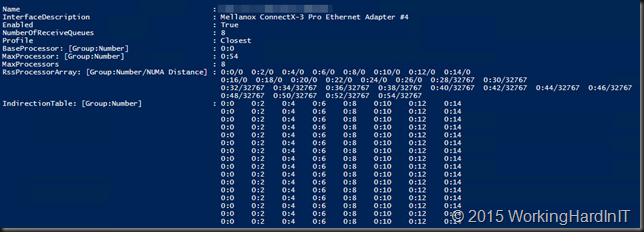

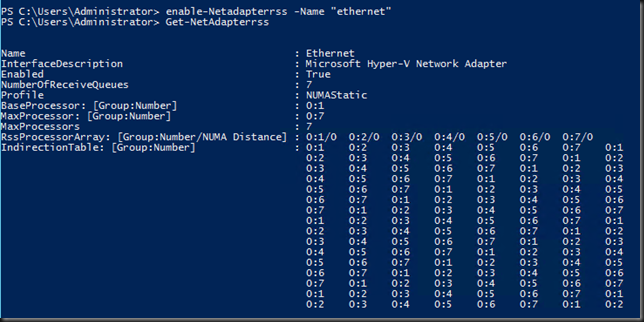

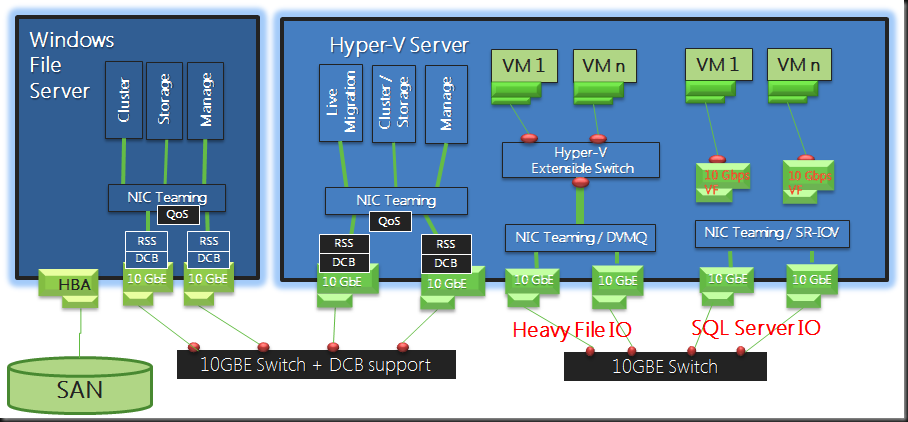

RSS is targeted at the host network traffic. VMQ and SR-IOV are targeted at the virtual machine network traffic but in the end the both result in the same benefits as stated above. RSS & VMQ integrate well with other advanced windows features. VMQ for examples can be used with the extensible Hyper-V switch while RSS can be combined with QOS & DCB in storage & cluster host networking scenarios. So these give you a lot of options and flexibility. SR-IOV or RDMA is more focused on raw performance and doesn’t integrate so well with the more advanced features for flexibility & scalability. I’ll talk some more on this in future blog posts.

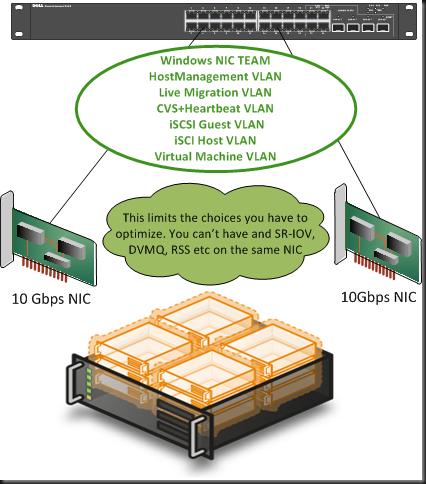

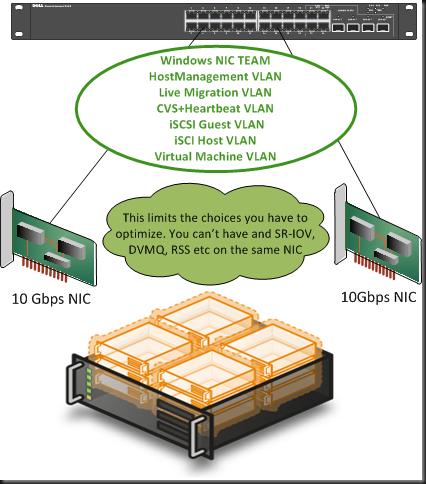

Now with all these features that have there own requirements and compatibilities you might want to reconsider putting all traffic over one pair of teamed NICs. You can’t optimize them all in such a scenario and that might hurt you. Perhaps you’ll be fine, perhaps you won’t.

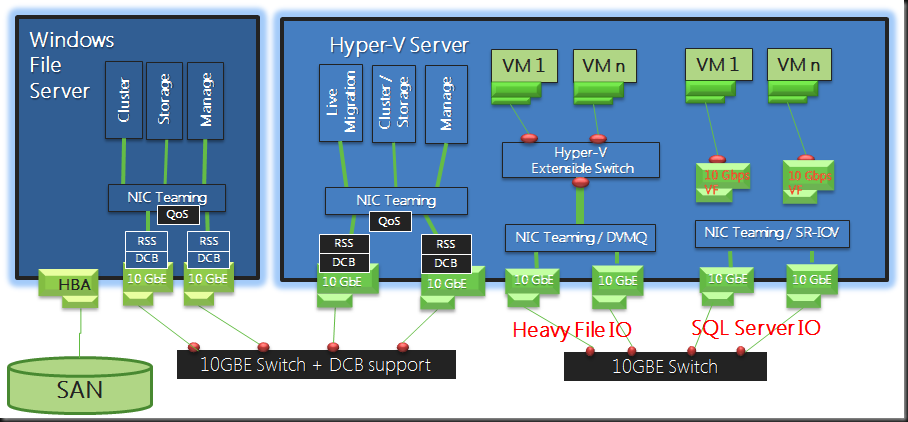

So what to use where and when depends on how many NICs you’ll use in your servers and for what purpose. For example even in a private cloud for lightweight virtual machines running web services you might want to separate the host management & cluster traffic from the virtual machine network traffic. You see RSS & VMQ are mutually exclusive. That way you can use RSS for the host/cluster traffic and DVMQ for the virtual machine network. Now if you need redundancy you might see that you’ll already use 2*2NIC with Windows 8 NLB in combination with two switches to avoid a single point of failure. Do your really need that bandwidth for the guest servers? Perhaps not but, you might find that it helps improve density because of better better host & NIC performance helping you avoiding the cost of buying extra servers. If you virtualize SQL servers you’d be even more interested in all this. The picture below is just an illustration, just to get you to think, it’s not a design.

I’m sure a lot of matrices will be produced showing what features are compatible under what conditions, perhaps even with some decision charts to help you decide what to use where and when.