Let’s play a bit with a Windows Server 2012 R2 Hyper-V cluster with 2*10Gbps Intel X520 teamed switch independent and in dynamic mode, which is optimal for DVMQ. On these NICs we enabled Jumbo frames (and on the switches of course). That team is used to create a virtual switch for consumption by the virtual machines. The switches used are 2*DELL PowerConnect 8132F. So we have full fault tolerance. But the important thing to note is that this is commodity, quality hardware that we can leverage for great results.

Now we’ll compare 2 scenarios. In both test a sending VM will try to saturate a receiving VMs network bandwidth. Due to how this NIC teaming setup works, that’s about 10Gbps in a two member 2*10Gbps team. We work around this by leaving both VMs on the same host, so the traffic doesn’t need to pass across the wire. The VMs have VMQ & vRSS enabled with the host team members having VMQ enabled.

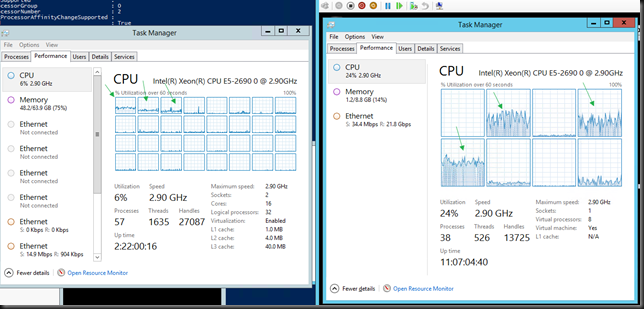

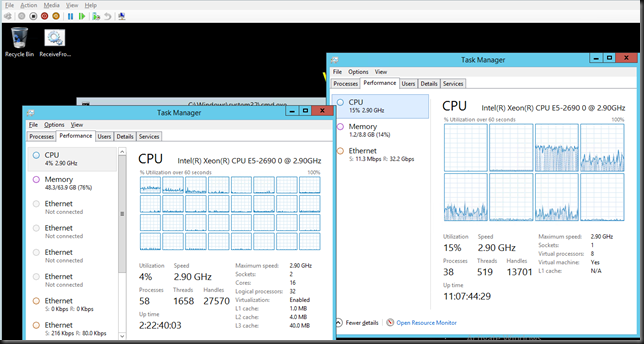

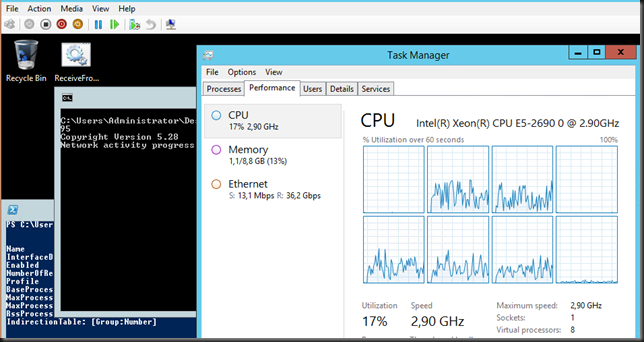

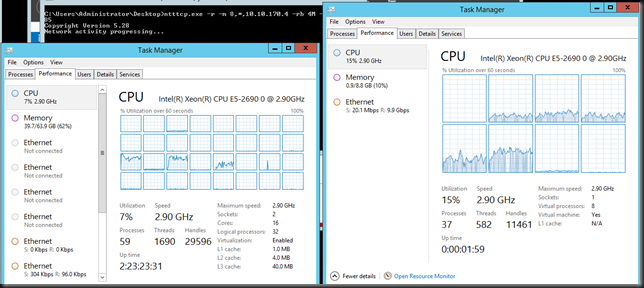

Jumbo frames disabled inside the VM (Both VMs on same host)

Without Jumbo Frames enabled in the Guest VM and all other things being equal the very best we can achieve non-sustained is 21Gbps (average +/- 17Gbps) receiving traffic in a VM. Not bad, not bad at all.

In the picture you can see the host with DVMQ doing it’s job at the left while vRSS is at work in the VM. Pretty clear.

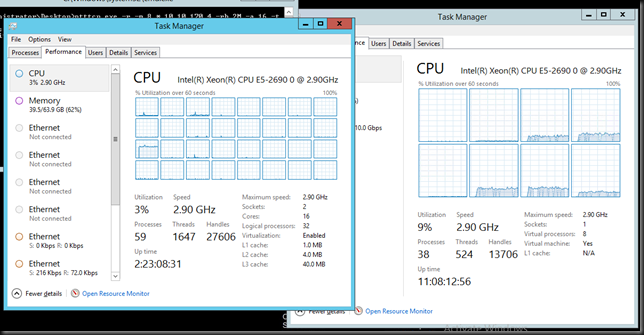

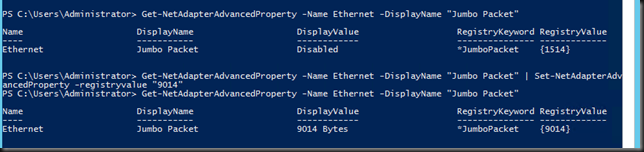

Jumbo frames enabled inside the VM

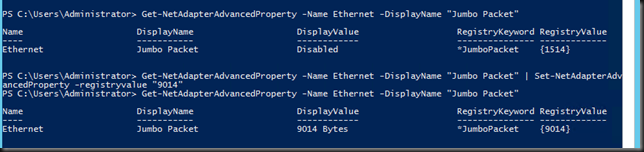

Now, let’s enable Jumbo frames in the VM. Fire up PowerShell or use the GUI.

Don’t forget to do this on both the sending and the receiving VM  . Here we get +/- 30Gbps receiving traffic inside of the VM. A nice improvement isn’t it? Not just that but we consume less CPU resources as well! Sweet

. Here we get +/- 30Gbps receiving traffic inside of the VM. A nice improvement isn’t it? Not just that but we consume less CPU resources as well! Sweet

Useful Power at your finger tips or just showing off?

vRSS & DVMQ is one of may scalability & performance improvements in Windows 2012 R2. And yes, Jumbo Frames inside the VM do make a difference but in a 10Gbps environment it’s not “in your face” that visible. The 10Gbps limit of a single NIC team member makes this your bottle neck. But it DOES help to reduce CPU cycles in that case. Just look at the two screen shots below.

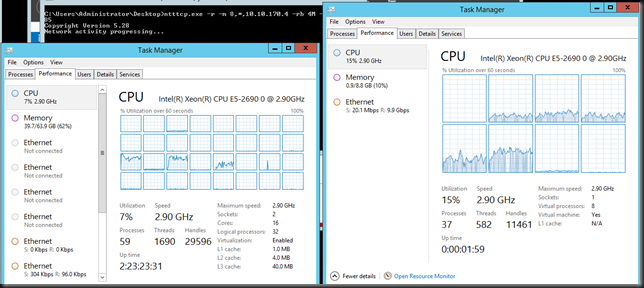

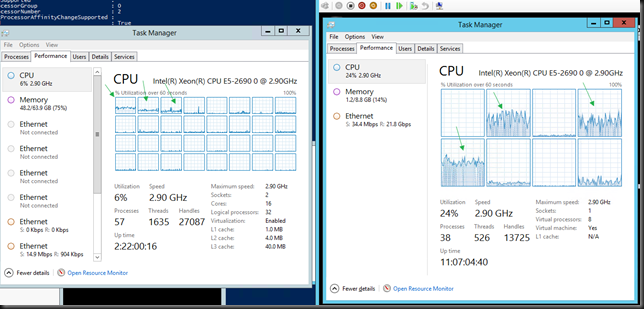

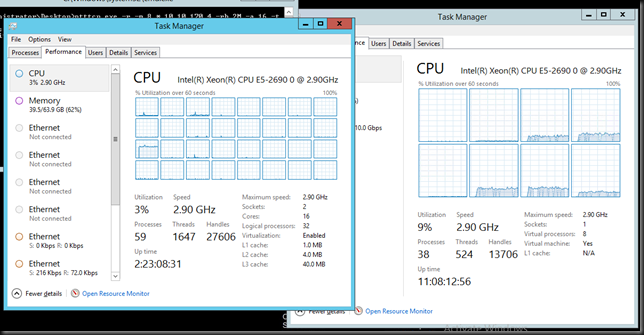

No Jumbo frames in VM (sending & receiving VMs on different hosts)

We’re consuming 7% CPU resources on the host and 15% in the VM.

Jumbo Frames in VM (sending & receiving VMs on different hosts)

We’ve dropped down to 3% on the host and to 9% inside the VM. All bits help I say!

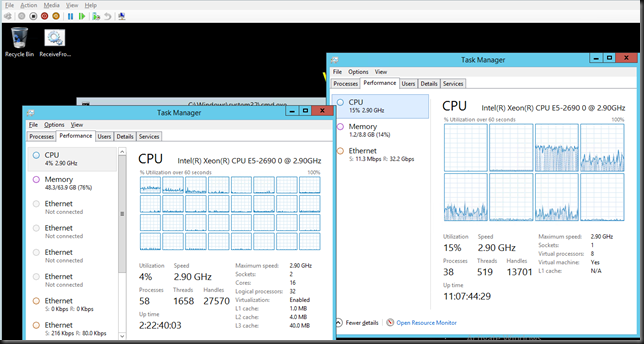

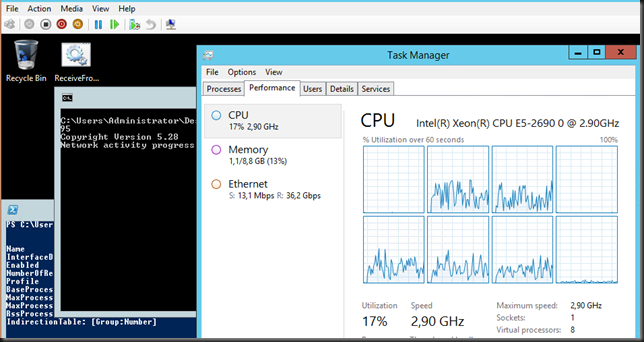

How far can we push this?

Again if you take the NIC Team bottleneck out of the way you can see some serious differences. Take a look at the screenshot below, that’s 36,2Gbps inside of a VM courtesy of vRSS during some other experiments. Tallyho!

So let’s face it, I guess we’ll need some faster memory (DDR4) and multiple 40Gbps/100Gbps Cards to see what the limits of Windows Server 2012 R2 are or to find out if we reached it. Right now the operating system is giving hardware people a run for their money.

Also note that if you have a number of VMs doing a lot of network IO you’ll be using quite a number of CPU cycles. While vRSS & DVMQ make this scale you might want to consider leveraging SMB Direct for the various tastes of live migration as this will definitely help you out on that front as the NIC will do the heavy lifting.

Reality Check

But perhaps we also need little reality check. While 100Gbps and DDR4 is very nice you might not need it for your current needs. When the environment is built right you’ll find that your apps are usually your limiting factor before the hardware, let alone Windows Server 2012 R2. So why is knowing this important? Well I verify Microsoft claims so I can talk from experience and not just from what I read in a Microsoft presentation. Secondly you can trust that your investment in Windows Server 2012 R2 is going to carry you long and far. It’s future proofed and that’s god for you. Both when your when needs grow exponential and for the longevity of your environment. Third, we can leverage this to virtualize high through put environments and get the best possible results and ROI.

Also, please, please test & find out, verify what settings suit your environment best and just just blindly enable stuff. Good luck!