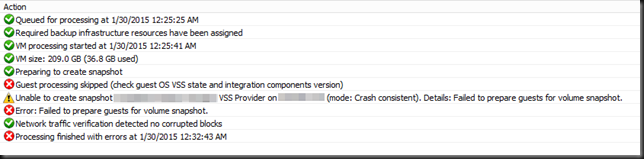

So you migrate over 200 VMs from a previous version of Hyper-V to Windows Server 2012 R2 fully patched and life looks great, full of possibilities etc. However one thing get’s back to your e-mail inbox consistently: a couple of Windows Server 2003 R2 SP2 (x64) and Windows XP SP3 (x86) virtual machines. The VEEAM backups consistently fail. Digging into that the cause is pretty obvious … it tells you where to problem lies.

Ah they forgot the upgrade the IS components you might conclude. Let’s see if we try an upgrade. Yes they are offered and you run them … looks to be going well too. But then you’re greeted by "An error has occurred: One of the update processes returned error code 1603”.

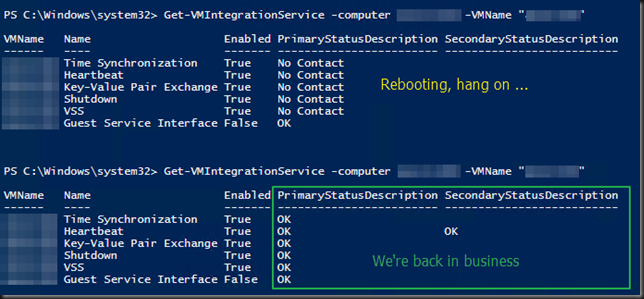

Darn! Now you can go and do all kinds of stuff to find out what part of the integrations services are messed up as most day to day operations work fine (registry, explore, versions, security settings …) or be smart a leverage the power of PowerShell. It’s easy to find out what is not right via a simple commandlet Get-VMIntegrationService

We’ll that’s obvious. So how to fix this. I uninstalled the IS components, rebooted the VM, reinstalled the IS components … which requires another reboot. While the VM is rebooting you can take a peak at the integration services status with Get-VMIntegrationService

That’s it, all is well again and backups run just fine. Lessons learned here are that SCOM was completely happy with the bad situation … that isn’t good ![]() .

.

So there’s the solution for you but it’s kind of “omen” like that it happened to three Windows 2003 virtual machines (both x64 and x86). You really need to get off these obsolete operating systems. Staying will never improve things but I guarantee you they will get worse.

See you at a next blog ![]()