Introduction

What is the the lure of having a ransomware fund all about? It’s the idea that just paying is the best way to deal with a ransomware incident.While preventing as many ransomware attacks as possible is great, it is not something that will be 100% effective. Detecting an incident as early as possible is key to minimizing the effects. This even in the event of successful and early detection some data has been compromised (encrypted). The nature and function of that data will determine the blast radius and the fall out. To recover from that the attack needs to be stopped by finding and eliminating the points of infection.Next to that, the proven ability to restore data and do so fast is a key capability when it comes to recovering form a ransomware attack. If you don’t you’ll either need to eat the loss or try to pay up.

Dealing with Ransomware step by step

- Prevention is not 100% effective. Don’t bank on it.

- Early detection

- Swift & adequate response

- Quarantine, wipe (nuke from orbit) of contaminated systems & data

- See if a free decryption solution is available via the security community or your police services cyber crime department

- Restore your data. You must have multiple options. You must have implemented the 3-2-1 rule. But beware, your off site, air gapped copy cannot be too old. You need to have fairly recent backups in there to have a decent RPO that is meaningful to the business.

- Bring data, systems and services back into production.

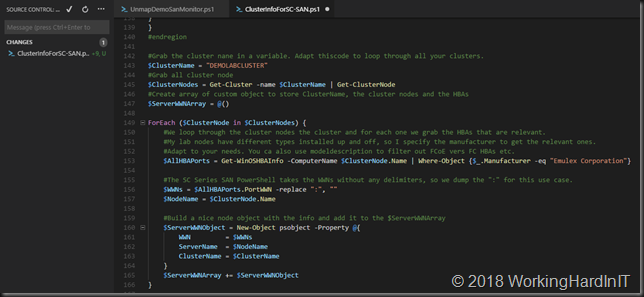

Now make sure you can do this for end user files, server data (images, VMs, Databases, configuration files, backups) regardless of where it is (on-premises, private, hybrid & public cloud) what delivery model it comes in (Physical, virtual, IAAS, PAAS, SAAS, Serverless).

The lure of having a Ransomware Fund (Isn’t it cheaper to pay?)

Now some bean counter might come up with the idea that paying is cheaper (and easier) than prevention, let alone backup & restore capabilities.

Some would even consider it a “cost of doing business”. This is the the lure of having a ransomware Fund. Ouch, well I know many parts of the world are a lot less save than mine but this is a path down a slippery slope so dangerous you will fall down sooner or later. Let’s look at why that is.

The lure of having a Ransomware Fund

First, let’s not forget about the down time caused no matter how you resolve it. So prevention and early detection are key. You might not even survive if you pay and get your data back.

Secondly, while I love the idea of prevention and early detection this doesn’t mean that you can get rid of your backup and restore capabilities. Prevention is an mitigation strategy, it doesn’t eradicate the issue. Early detection minimizes the immediate and secondary damage in many cases. But not in all cases and it is also not perfect.

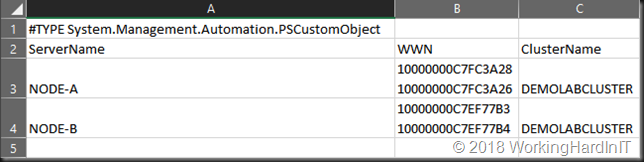

Third, when you pay your ransom how sure are you you’ll get your decryption key and be able to access your data? Well it seems only in 50% of the cases. Now, some ransomware “businesses’’ have a better customer service than many commercial companies and governments. But that doesn’t mean all of them do and by definition they are not honest people. Unless you consider ransomware “Encryption As A Service” that helps you with GDPR. I think not. You might think that a smart ransomware player delivers not to ruin future revenue streams by acquiring a bad reputation. Probably true, but they to can make mistakes, you can make mistakes, you can become road kill of vandals or of criminals who desire or are hired to incur havoc on a certain industry.

Finally, you might end up being a repeat victim as you have shown the willingness & ability to pay. Don’t forget that ransomware is not like mobster protection money. It will not protect you from others or the same ones doing it again.

Conclusion

Banking on having an emergency stash of Bitcoin (ransomware fund) just to pay ransomware isn’t your best option. It might be a last resort faced with the alternative of bankruptcy but even then it remains a costly and risky gamble.

I know that for some people in IT, backups seem outdated and from a gone by era, a solution to a problem form yesterday. I kid you not. Well, I advise you to think again and act upon what you concluded.