Introduction

What is so important about my perspective on work and life? Well, nothing at all unless you’re me. As an IT expert I spend way to much time in front of screens. It’s an occupational hazard. It’s not that I don’t talk to other people. I do, quite a lot. I do so for my work but also, a lot of the time, outside of my day job. That’s essential to prevent tunnel vision and echo chambers. But a big part of my time is spent working on projects (design, architecture, implementation). The remainder goes to assisting others, learning and experimenting or troubleshooting. That’s a never ending story, rinse and repeat. This never ending cycle which can lead to loss of perspective. Not just the loss of your professional perspective, but work & life wise. The rat race goes fast and in IT everything comes and goes faster than ever. You can work very hard and not get ahead. You might make lots of money but have no time to enjoy it. And it can all be over in a second. You can spend you whole life working for something, just to have it taken away by illness, accident, natural or man made disaster or crime. Sobering thoughts, to say the least.

My perspective on work and life

While I love the IT business from silicon to the clouds I also adore the wonderful scenery that real clouds help create in the great outdoors.That’s why it’s good to take a break and go on a “walk about”. When looking out over the Grand Canyon, hiking in Yellow Stone valleys or in Great Basin with its 5000 year and older Bristlecone pines you can’t feel but insignificant. Both the big picture and over time. On a geological scale what’s a couple of million years any way, let alone less. So every now and then I get my proverbial behind out of the IT cloud, data center and out of the mind numbing open landscape offices. I go watch wild life, hike through landscapes formed by many hundreds of millions of years of natures forces at work.

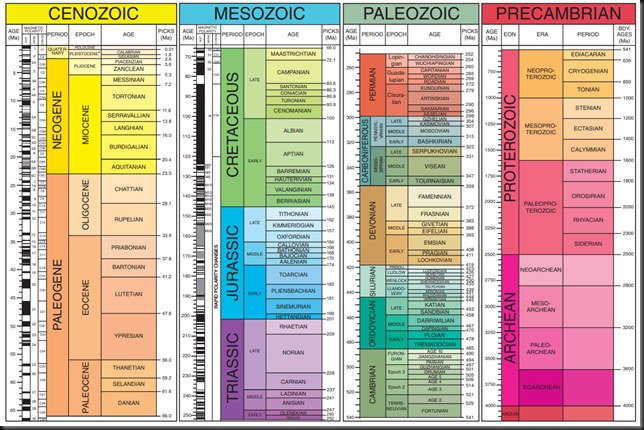

It’s a mind set where the little aid above, the GSA (American Geological Society) geologic time scale becomes relevant to appreciate & try to understand the natural beauty around me.

Some advise

Don’t take life and work too serious, step out of the “rat race” now and then. Changing my priorities and my perspective on work and life during time off is a good thing. During vacations it sure is a lot different during such periods. I love it. Seeing the Rocky Mountains scenery as you drive to a hike in a comfy Ford Explorer is a just magnificent.

From the majestic Rockies & the Pacific North & South West, the views during a road trip are stunning. The hikes amazing & the serenity is soothing to the soul. I feel great when exploring them. Take a long week-end, go on a road trip, hike around and recharge your batteries. If you’re able to work remotely, do so and explore your local natural resources during your down time or breaks.

Get over that fear of missing out and realize that “promotions” or work are less important than yourself best interest. No one will pay you double when you work twice as hard or give you back tour time. It’s a typical example of diminishing retruns. Remember that you don’t get a second life. Live this one. Don’t pointless rush through it from birth to death. You won’t be THAT rich and THAT famous (or infamous) enough to be remembered. You’ll probably be forgotten within one or two generations. So enjoy yourself a bit. Even when Rome does burn down during your absence, that’s were new empires can grow.

Image courtesy of @rawpixel at

Image courtesy of @rawpixel at