Introduction

Have you considered when Windows data deduplication and cluster operating system rolling upgrades from Windows Server 2012 R2 to Windows Server 2016 Clusters are discussed we often hear people talk about Hyper-V or Scale Out File Server clusters, sometimes SQL but not very often for a General-Purpose File Share server with continuous availability. Which is the kind I’ve done quite a number of actually.

Being active in an industry that produces and consumes file data in large quantities and sizes we have been early implementers of Windows cluster for General-Purpose File Shares with continuous availability. This provides us the benefits of SMB 3, ODX for both the clients and IT Operations for workloads that are not suited for Scale Out File Server deployments.

As such we have dealt with a number of Windows 2012 R2 GPFS with GA cluster that we wanted to move to Windows Serer 2016. Partially to keep the environment up to date and partially because we want to leverage the new Windows deduplication capabilities that this OS version offers. The SOFS and Hyper clusters that I upgraded didn’t have data deduplication enabled.

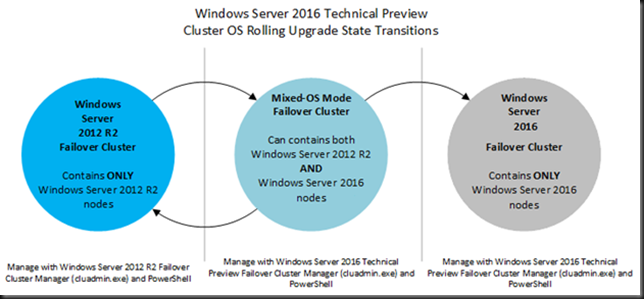

The process to perform this upgrade is straight forward and has been documented well by others as well as by me in regards to issues we saw in the field . We even dove behind the scenes a bit in Cluster Operating System Rolling Upgrade Leaves Traces. I have also presented on this topic in public at conferences around Europe (Ireland, Germany and Belgium) as part of our community contributions. No surprises there.

Test your assumptions

This is a scenario you can perform without any downtime for your clients when all things go well. And normally it should. I have upgraded a couple of Scale Out File Server (SOFS) and General-Purpose File Server (GPFS) cluster with Continuous availability now and those went very well. Just make sure your cluster is perfectly healthy at the start.

Naturally there are some check you need to make that are outside of Microsoft scope:

I’m pretty sure you have good backups for your file data and you should check this works with Windows Server 2016 and how it reacts during the upgrade while the server is in mixed mode. Perhaps you will or won’t be able to run backups or restore data. Check and know this.

Verify your storage solution supports and words with Windows Server 2016. It sounds obvious but I have seen people forget such details.

Another point of attention is any Anti-Virus you might have running on the file server cluster nodes. Verify that this is fully supported on Windows Server 2016. On top of that validate that the Anti-Virus still works well with ODX so you don’t run into surprises there. Don’t assume anything.

Check if the server and it components (HBA, NICs, BIOS, …) its firmware and drivers support Windows Server 2016. Sure, the rolling upgrade allows for some testing before committing but that doesn’t mean you should go ahead blindly into the unknown.

Make sure your nodes are fully patched before and after the upgrade of a cluster node.

As the file server cluster is already leveraging SMB 3 with continuous availability al the prerequisites to make that work are already take care of. If you are upgrading a File server cluster without continuous availability and are planning to start using this, that’s another matter and you’ll need to address any issues. You can do this before or after moving to Windows Server 2016. This means you’d move to a solution before you upgrade or after you have performed the upgrade to Windows Server 2016.

You can take a look at my blogs on this subject from the Windows 2012 R2 time frame such as More Tips On Dealing With Removing Short File Names When Migrating To a SMB3 Transparent Failover File Server Cluster, Migrate an old file server to a transparent failover file server with continuous availability and SMB 3, ODX, Windows Server 2012 R2 & Windows 8.1 perform magic in file sharing for both corporate & branch offices

Data deduplication takes some extra consideration

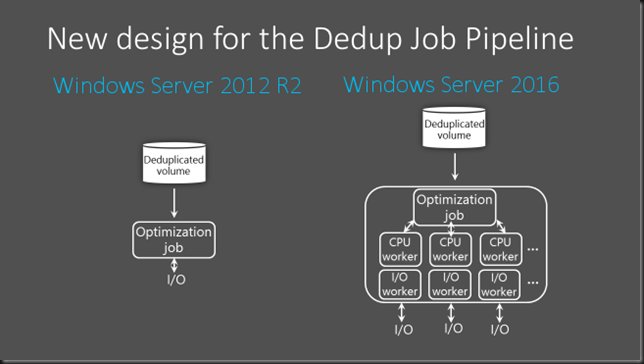

I have blogged before on how Windows Server 2016 Data Deduplication performs and scales better than it did it Windows Server 2012 R2. This also means that it works at least partially different than it did Windows Server 2012 R2. You can see this in some of the updates that came out in regards to a data corruption bug with data deduplication which only affected Windows Server 2016.

Given this difference, what would happen if you fail over a LUN with deduplication enable from Windows Server 2012 to Windows Server 2016 and vice versa? That’s the question I had to consider when combining Windows data deduplication and cluster operating system rolling upgrades for the first time.

Windows Server 2016 is backward compatible and will work just fine with a LUN that from and Windows Server 2012 Server that has Windows data deduplication enabled. The reverse is not the case. Windows Server 2012 R2 is not forward compatible. When dealing with data deduplication in an Operating System Rolling Upgrade scenario I’m extra careful as I cannot guarantee any LUN movement scenario will go well. With a standalone server

Once I have failed over a LUN to Windows Server 2016 node in a mixed cluster I avoid moving it back to a Windows Server 2012 R2 node in that cluster. I only move them between Windows Server 2016 nodes when needed.

I move through the rolling upgrade as fast as I can to minimize the time frame in which a LUN with data deduplication could end up moving from a Windows Server 2016 o a Windows Server 2012 cluster node.

Should I need to reverse the Operating System Rolling Upgrade to end up with a Windows Server 2012 R2 cluster again I’ll make absolutely sure I can restore the data from LUNs with data deduplication from backup and/or a snapshot from a SAN or such. You cannot guarantee that this will work out fine. So be prepared.

For “standard” non deduplicated NTFS LUNs you can fail back if needed. When data deduplication is enabled you should try to avoid that and be prepared to restore data if needed.

Final advise is always the same

Even when you have tested your upgrade scenario and made sure your assumptions are correct you must have a way out. And as always, “One is none, two is one”.

As always during such endeavors you need to make sure that you have a roll back scenario in things do not work out. You must also have a fail back plan for when things turn really bad. For most scenarios has the ability to return to the original situation built in. But things can go wrong badly and Murphy’s Law does apply. So also have the backups and restore verified just in case.

The last thing you need after a failed upgrade is telling your customer or employer “it almost worked” but unfortunately, they’ve lost that 200TB of continuous available data. Better next time doesn’t really cut it.