In the past 12 hours I’ve seen the first mentions of people no longer being able to connect over RDP via a RD Gateway to their clients or servers. I also got a call to ask for help with such an issue. The moment I saw the error message it rang home that this was a known and documented issue with CredSSP encryption oracle remediation, which is both preventable and fixable.

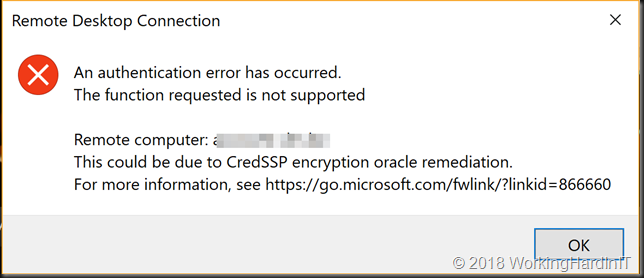

The person trying to connect over RD Gateway get the following message:

[Window Title]

Remote Desktop Connection

[Content]

An authentication error has occurred.

The function requested is not supported

Remote computer: target.domain.com

This could be due to CredSSP encryption oracle remediation.

For more information, see https://go.microsoft.com/fwlink/?linkid=866660

[OK]

Follow that link and it will tell you all you need to know to fix it and how to avoid it.

A remote code execution vulnerability (CVE-2018-0886) exists in unpatched versions of CredSSP. This issue was addressed by correcting how CredSSP validates requests during the authentication process.

The initial March 13, 2018, release updates the CredSSP authentication protocol and the Remote Desktop clients for all affected platforms.

Mitigation consists of installing the update on all eligible client and server operating systems and then using included Group Policy settings or registry-based equivalents to manage the setting options on the client and server computers. We recommend that administrators apply the policy and set it to “Force updated clients” or “Mitigated” on client and server computers as soon as possible. These changes will require a reboot of the affected systems. Pay close attention to Group Policy or registry settings pairs that result in “Blocked” interactions between clients and servers in the compatibility table later in this article.

April 17, 2018:

The Remote Desktop Client (RDP) update update in KB 4093120 will enhance the error message that is presented when an updated client fails to connect to a server that has not been updated.

May 8, 2018:

An update to change the default setting from Vulnerable to Mitigated (KB4103723 for W2K16 servers) and KB4103727 for Windows 10 clients. Don’t forget the vulnerability also exists for W2K12(R2) and lower as well as equivalent clients.

The key here is that with the May updates change the default for the new policy setting changes the default setting from to mitigated.

Microsoft is releasing new Windows security updates to address this CVE on May 8, 2018. The updates released in March did not enforce the new version of the Credential Security Support Provider protocol. These security updates do make the new version mandatory. For more information see “CredSSP updates for CVE-2018-0886” located at https://support.microsoft.com/en-us/help/4093492.

This can result in mismatches between systems at different patch levels. Which is why it’s now more of a wide spread issue. Looking at the table in the article and the documented errors it’s clear enough there was a mismatch. It was also clear how to fix it. Patch all systems and make sure the settings are consistent. Use GPO or edit the registry settings to do so. Automation is key here. Uninstalling the patch works but is not a good idea. This vulnerability is serious.

Now Microsoft did warn about this change. You can even read about it on the PFE blog https://blogs.technet.microsoft.com/askpfeplat/tag/encryption-oracle-remediation/. Nevertheless, many people seem to have been bitten by this one. I know it’s hard to keep up with everything that is moving at the speed of light in IT but this is one I was on top of. This is due to the fact that the fix is for a remote vulnerability in RDS. That’s a big deal and not one I was willing let slide. You need to roll out the updates and you need to configure your policy and make sure you’re secured. The alternative (rolling back the updates, allowing vulnerable connections) is not acceptable, be vulnerable to a known and fixable exploit. TAKE YOUR MEDICIN! Read the links above for detailed guidance on how to do this. Set your policy on both sides to mitigated. You don’t need to force updated clients to fix the issue this way and you can patch your servers 1st followed by your clients. Do note the tips given on doing this in the PFE blog:

Note: Ensure that you update the Group Policy Central Store (Or if not using a Central Store, use a device with the patch applied when editing Group Policy) with the latest CredSSP.admx and CredSSP.adml. These files will contain the latest copy of the edit configuration settings for these settings, as seen below.

Registry

Path: HKLM\Software\Microsoft\Windows\CurrentVersion\Policies\System\CredSSP\Parameters

Value: AllowEncryptionOracle

Date type: DWORD

Reboot required: Yes

Here’s are the registry settings you need to make sure connectivity is restored

Everything patched: 0 => when all is patched including 3rd party CredSSP clients you can use “Force updated clients”

server patched but not all clients: 1 =>use “mitigated”, you’ll be as secure as possible without blocking people. Alternatively you can use 2 (“vulnerable”) but avoid that if possible as it is more risky, so I would avoid that.

[HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\Policies\System\CredSSP][HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\Policies\System\CredSSP\Parameters]

“AllowEncryptionOracle”=dword:00000001

So, check your clients and servers, both on-premises and in the cloud to make sure you’re protected and have as little RDS connectivity issues as possible. Don’t forget about 3rd party clients that need updates to if you have those! Don’t panic and carry on.