Introduction

As 25Gbps (SFP28) is on route to displace 10Gbps (SFP+) from its leading role as the work horse in the datacenter. That means that 10Gbps is slowly but surely becoming “the LOM option”. So it will be passing on to the role and place 1Gbps has held for many years. What extension slots are concerned we see 25Gbps cards rise tremendously in popularity. The same is happening on the switches where 25-100Gbps ports are readily available. As this transition takes place and we start working on acquiring 25Gbps or faster gear the question about SFP+ and SFP28 compatibility arises for anyone who’s involved in planning this.

Who needs 25Gbps?

When I got really deep into 10Gbps about 7 years ago I was considered a bit crazy and accused of over delivering. That was until they saw the speed of a live migration. From Windows Server 2012 and later versions that was driven home even more with shared nothing and storage live migration and SMB 3 Multichannel SMB Direct.

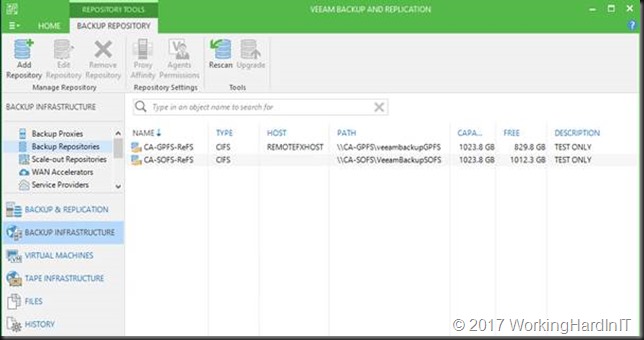

On top of that storage spaces and SOFS came onto the storage scene in the Microsoft Windows server ecosystem. This lead us to S2D and storage replica in Windows Server 2016 and later. This meant that the need for more bandwidth, higher throughput and low latency was ever more obvious and clear. Microsoft has a rather extensive collection of features & capabilities that leverage SMB 3 and as such can leverage RDMA.

In this time frame we also saw the strong rise of All Flash Array solutions with SSD and NVMe. Today we even see storage class memory come into the picture. All this means even bigger needs for high throughput at low latency, so the trend for ever faster Ethernet is not over yet.

What does this mean?

That means that 10Gbps is slowly but surely becoming the LOM option and is passing on to the role 1Gbps has held for many years. In our extension slots we see 25-100Gbps cards rise in popularity. The same is happening on the switches where we see 25, 50, 100Gbps or even higher. I’m not sure if 50Gbps is ever going to be as popular but 25Gbps is for sure. In any case I am not crazy but I do know how to avoid tech debt and get as much long term use out of hardware as possible.

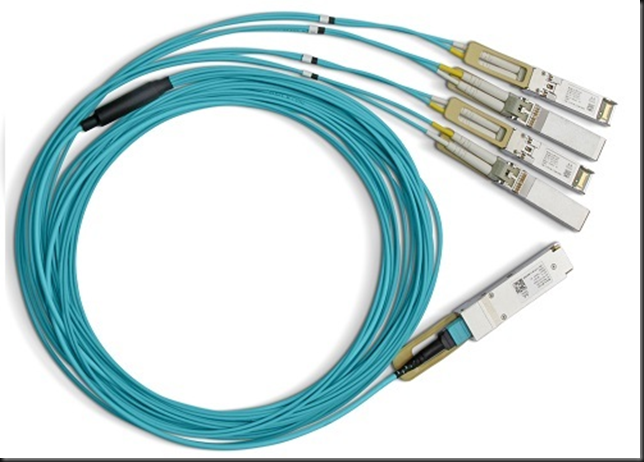

When it comes to the optic components SFP+ is commonly used for 10Gbps. This provides a path to 40Gbps and 100Gbps via QSFP. For 25Gbps we have SFP28 (1 channel or lane for 25Gbps). This give us a path to 50Gbps (2225Gbps – two lanes) and to 100Gbps (4*25Gbps – 4 lanes) via QSFP28. In the end this a lot more economical. But let’s look at SFP+ and SFP28 compatibility now.

SFP+ and SFP28 compatibility

When it comes to SFP+ and SFP28 compatibility we’re golden. SFP+ and SFP28 share the same form factor & are “compatible”. The moment I learned that SFP28 share the same form factor with SFP+ I was hopeful that they would only differ in speed. And indeed, that hope became a sigh of relief when I read and experimentally demonstrated to myself the following things I had read:

- I can plug in a SFP28 module into an SFP+ port

- I can plug in a SFP+ module into an SFP28 port

- Connectivity is established at the lowest common denominator, which is 10Gbps

- The connectivity is functional but you don’t gain the benefits SFP28 bring to the table.

Compatibility for migrations & future proofing

For a migration path that is phased over time this is great news as you don’t need to have everything in place right away from day one. I can order 25Gbps NIC in my servers now, knowing that they will work with my existing 10Gbps network. They’ll be ready to roll when I get my switches replaced 6 months or a year later. Older servers with 10Gbps SFP+ that are still in production when the new network gear arrives can keep working on new SFP28 network gear.

- SFP+: 10Gbps

- SFP28: 25Gbps but it can go up to 28 so the name is SFP28, not 25. Note that SFP28 can handle 25Gbps, 10Gbps and even 1Gbps.

- QSFP28: 100Gbps to 4*25Gbps or 2*50Gbps gives you flexibility and port density.

- 25Gbps / SFP28 is the new workhorse to deliver more bandwidth, better error control, less cross talk and an economical sound upgrade path.

Do note that SFP+ modules will work in SFP28 ports and vice versa but you have to be a bit careful:

- Fix the ports speed when you’re not running at the default speed

- On SFP28 modules you might need to disable options such as forward error correction.

- Make sure a 10Gbps switch is OK with a 25Gbps cables, it might not.

If you have all your gear from a vendor specializing in RDMA technology like Mellanox this detects this all this and takes care of everything for you. Between vendors and 3rd party cables pay extra attention to verifying all will be well.

SFP+ and SFP28 compatibility is also important for future proofing upgrade paths. When you buy and introduce new network gear it is nice to know what will work with what you already have and what will work with what you might or will have in the future. Some people will get all new network switches in at once while others might have to wait for a while before new servers with SFP28 arrive. Older servers might be around and will not force you to keep older switches around just for them.

SFP28 / QSFP28 provides flexibility

Compatibility is also important for purchase decision as you don’t need to match 25Gbps NIC ports to 25Gbps switch ports. You can use the QSFP28 cables and split them to 4 * 25Gbps SFP28.

The same goes for 50Gbps, which is 100Gbps QSFP to 2 * 50Gbps QSFP.

This means you can have switch port density and future proofing if you so desire. Some vendors offer modular switches where you can mix port types (Dell EMC Networking S6100-ON)

Conclusion

More bandwidth at less cost is a no brainer. It also makes your bean counters happy as this is achieved with less switches and cables. That also translates to less space in a datacenter, less consumption of power and less cooling. And the less material you have the less it cost in operational expenses (management and maintenance). This is only offset partially by our ever-growing need for more bandwidth. As converged networking matures and becomes better that also helps with the cost. Even where economies of scale don’t matter that much. The transition to 25Gbps and higher is facilitated by SFP+ and SFP28 compatibility and that is good news for all involved.