Introduction

While writing Bicep recently, I was stumped by the fact that my deployment kept failing. I spent a lot of time troubleshooting many possible ideas on what might be causing this. As JSON is involved and I am far from a JSON syntax guru, I first focused on that. Later I moved to how I use JSON in Bicep and PowerShell before finally understanding the problem was due to the fact that ConvertFrom-Json is not serializable.

Parameters with Bicep

When deploying resources in Azure with Bicep, I always need to consider who has to deliver or maintain the code and the parameters. It has to be somewhat structured, readable, and understandable. It can’t be one gigantic listing that confuses people to the point they are lost. Simplicity and ease of use rule my actions here. I know when it comes to IaC, this can be a challenge. So, when it comes to parameters, what are our options here?

- I avoid hard-coding parameters in Bicep. It’s OK for testing while writing the code, but beyond that, it is a bad idea for maintainability.

- You can use parameter files. That is a considerable improvement, but it has its limitations.

- I have chosen the path of leveraging PowerShell to create and maintain parameters and pass those via objects to the main bicep file for deployment. That is a flexible and maintainable approach. Sure, it is not perfect either, but neither am I.

Regarding Bicep and PowerShell, we can also put parameters in separate files and read those to create parameters. Whether this is a good idea depends on the situation. My rule of thumb is that it is worth doing when things become easier to read and maintain while reducing the places where you have to edit your IaC files. In the case of Azure Firewall Policy Rules Collection Groups, Rules collections, and Rules, it can make sense.

Bicep and JSON files

You can read file content in Bicep using. With the json() function, you can tell Bicep that this is JSON. So far, so good. The below is perfectly fine and works. We can loop through that variable in a resource deployment.

var firewallChildRGCs = [

json(loadTextContent('./AFW/Policies/RGSsAfwChild01.json'))

json(loadTextContent('./AFW/Policies/RGSsAfwChild02.json'))

json(loadTextContent('./AFW/Policies/RGSsAfwChild03.json'))

]

However, I am not entirely happy with this. While I like it in some aspects, it conflicts with my desire not needing to edit a working Bicep file once it is in use. So what do I like about it?

It keeps Bicep clean and concise and limits the looping to iterate over the Rules Collection Groups, thus avoiding the nested looping for Rules collections and Rules. Why is that? Because I can do this

@batchSize(1)

resource firewallChildPolicyWEUColGroups 'Microsoft.Network/firewallPolicies/ruleCollectionGroups@2022-07-01' = [for (childrcg, index) in firewallChildRGCs: {

parent: firewallChildPolicyWEU

name: childrcg.name

dependsOn: [firewallParentPolicyWEUColGroups]

properties: childrcg.properties

}]

As you can see, I loop through the variable and pass the JSON into the properties. That way, I create all Rule Collections and Rules without needing to do any nested looping via “helper” modules to get this done.

The drawback, however, is that the loadTextContent function in Bicep cannot use dynamic parameters or variables. As a result, the paths to the files need to be hard coded into the Bicep file. That is something we want to avoid. But until that is possible, it is a hard restriction. That is because parameters are evaluated during runtime (bicep deployment), whereas loadTextContent in Bicep happens while compiling (bicep build). So, in contrast to the early previews of Bicep, where you “transpiled” the Bicep manually, it is now done for you automatically before the deployment. You think this can work, but it does not.

PowerShell and JSON files

As mentioned above, I chose to use PowerShell to create and maintain parameters, and I want to read my JSON files there. However, it prevents me from creating large, long, and complex to maintain PowerShell objects with nested arrays. Editing these is not straightforward for everyone. On top of that, it leads to the need for nested looping in Bicep via “helper” modules. While that works, and I use it, I find it more tedious with deeply nested structures and many parameters to supply. Hence I am splitting it out into easier-to-maintain separate JSON files.

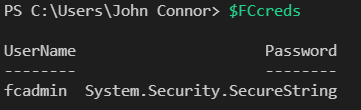

Here is what I do in PowerShell to build my array to pass via an Object parameter. First, I read the JSON filers from my folder.

$ChildFilePath = "../bicep/nested/AfwChildPoliciesAndRules/*"

$Files = Get-ChildItem -File $ChildFilePath -Include '*.json' -exclude 'DONOTUSE*.json'

$Files

$AfwChildCollectionGroupsValidate = @() # We use this with ConvertFrom-Json to validate that the JSON file is OK, but cannot use this to pass as a param to Bicep

$AfwChildCollectionGroups = @()

Foreach ($File in $Files) {

try{

$AfwChildCollectionGroupsValidate += (Get-Content $File.FullName -Raw) | ConvertFrom-Json

# DO NOT PUT JSON in here - the PSCustomObject is not serializable and passing this param to Bicep will than be empty!

$AfwChildCollectionGroups += (Get-Content $File.FullName -Raw) # A string is serializable!

}

Catch

{

write-host -ForegroundColor Red "ConvertFrom-Json threw and error. Check your JSON in the RCG/RC/R files"

Exit

}

}

I can then use this to roll out the resources, as in the below example.

// Roll out the child Rule Collection Group(s)

var ChildRCGs = [for (rulecol, index) in firewallChildpolicy.RuleCollectionGroups: {

name: json(rulecol).name

properties: json(rulecol).properties

}]

Initially, the idea was that by using ConvertFrom-Json I would pass the JSON to Bicep as a parameter directly.

$AfwChildCollectionGroups += (Get-Content $File.FullName -Raw) | ConvertFrom-Json

So not only would I not need to load the files in Bicep with a hard-coded path, I would also not need to use json() function in Bicep.

// Roll out the child Rule Collection Group(s)

var ChildRCGs = [for (rulecol, index) in firewallChildpolicy.RuleCollectionGroups: {

name: rulecol.name

properties: rulecol.properties

}]

However, this failed on me time and time again with properties not being found and what not. Below is an example of such an error.

Line |

30 | New-AzResourceGroupDeployment @params -DeploymentDebugLogLeve …

| ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

| 2:46:20 PM - Error: Code=InvalidTemplate; Message=Deployment template validation failed: 'The template variable 'ChildRCGs' is not valid: The language expression property 'name' doesn't exist, available properties are ''.. Please see

| https://aka.ms/arm-functions for usage details.'.

It did not make sense at all. That was until a dev buddy asked if the object was serializable at all. And guess what? ConvertFrom-Json creates a PSCustomObject that is NOT serializable.

You can check this quickly yourself.

((Get-Content $File.FullName -Raw) | ConvertFrom-Json).gettype().IsSerializable

Will print False

While

((Get-Content $File.FullName -Raw)).gettype().IsSerializable

Will print True

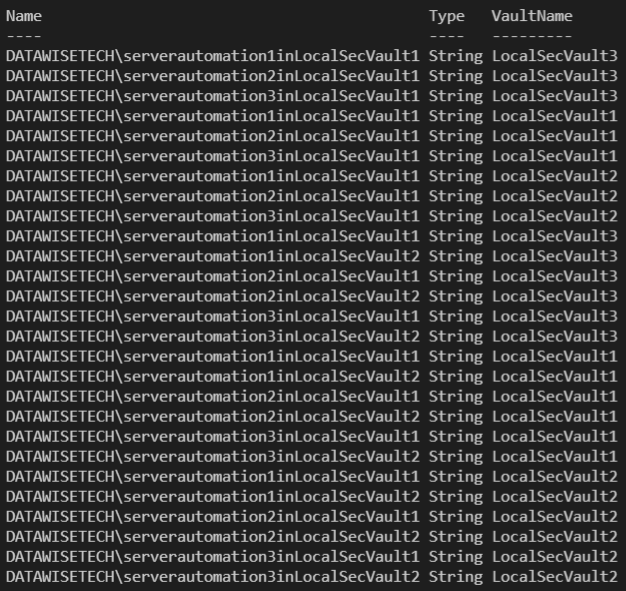

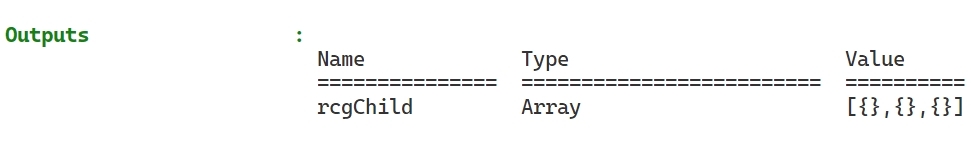

With some more testing and the use of outputs, I can even visualize that the parameter remained empty! The array contains three empty {} where I expected the JSON.

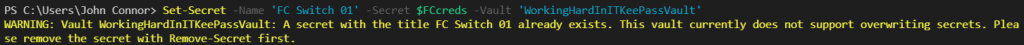

I usually do not have any issues with this in my pure PowerShell scripting. But here, I pass the object from PowerShell to Bicep, and guess what? For that to work, it has to be serializable. Now, when I do this, there are no warnings or errors. It just seems to work until you use the parameter and get errors that, at first, I did not understand. But the root cause is that in Bicep, the parameter remained empty. Needless to say, I wasted many hours trying to fix this before I finally understood the root cause!

As you can see in the code, I still use ConvertFrom-Json to test if my JSON files contain any errors, but I do not pass that JSON to Bicep as that will not work. So instead, I pass the string and still use the json() function in Bicep.

Hence, this blog post is to help others not make the mistake I made. It will also help me remember ConvertFrom-Json is not serializable.