The issue

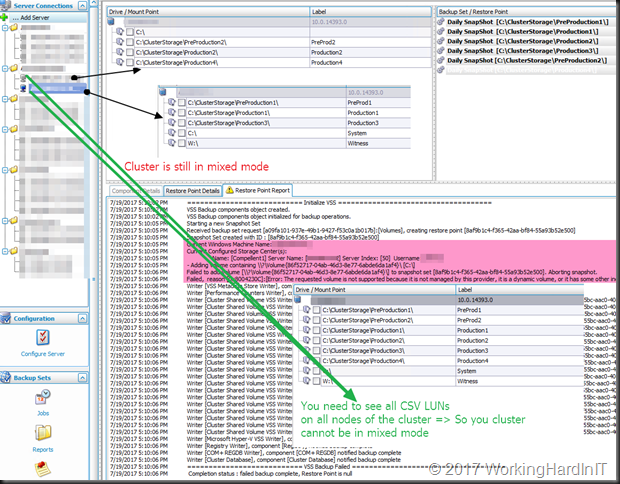

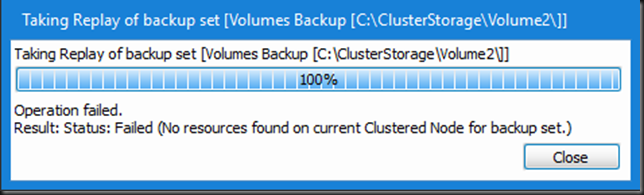

We have a largish Windows Server 2016 Hyper-V cluster (9 nodes) that is running a smooth as can be but for one issue. The off-host backups with Veeam Backup & Replication v9.5 (based on transportable hardware snapshots) are failing. They only fail for the LUNs that are currently residing on a few of the nodes on that cluster. So when a CSV is owned by node 1 it will work, when it owned by node 6 it will fail. In this case we had 3 node that had issues.

As said, everything else on these nodes, cluster wise or Hyper-V wise was working 100% perfectly. As a matter of fact, they were the perfect Hyper-V clusters we’d all sign for. Bar that one very annoying issue.

Finding the cause

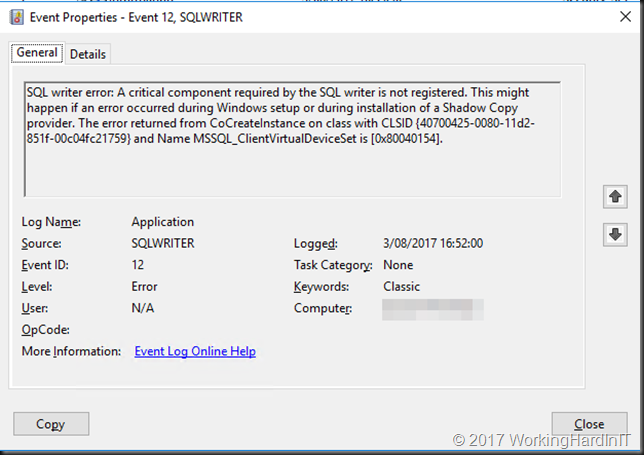

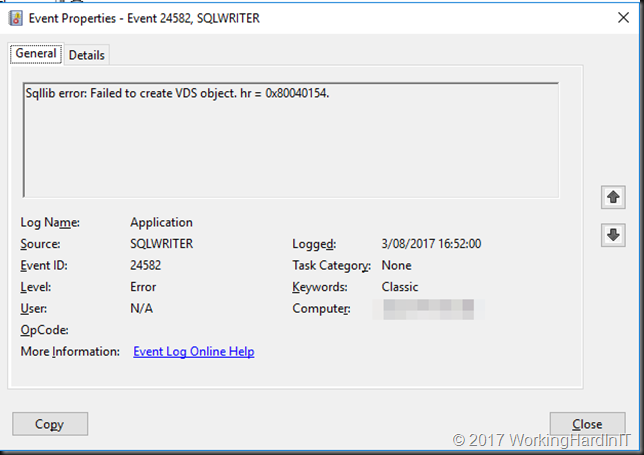

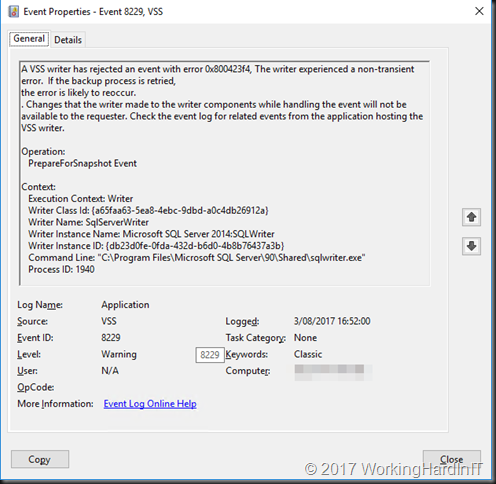

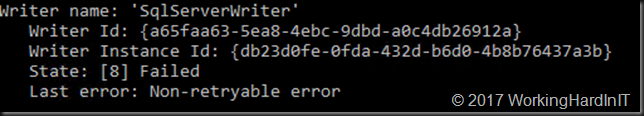

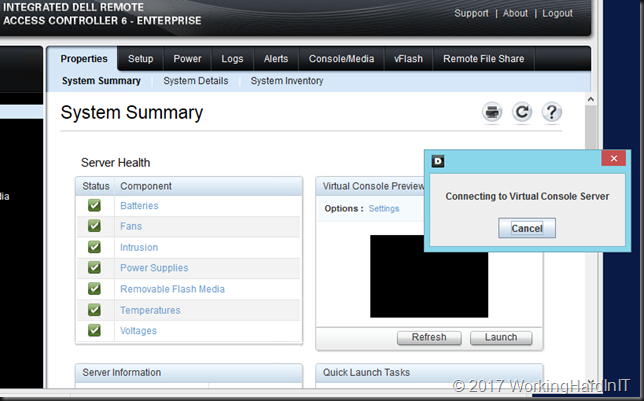

When looking at the application log on the off-host backup proxy it’s quite clear that there is an issue with the hardware VVS provider snapshots.

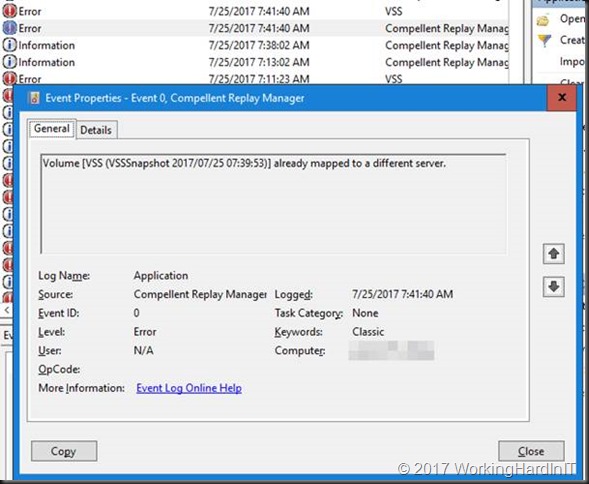

We get event id 0 stating the snapshot is already mounted to different server.

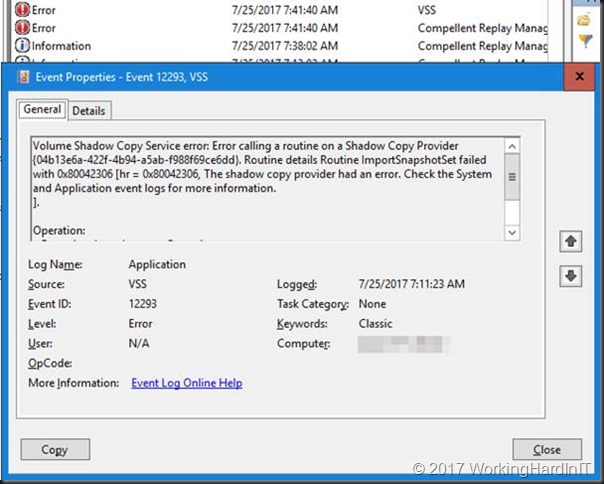

Followed by event id 12293 stating the import of the snapshot has failed

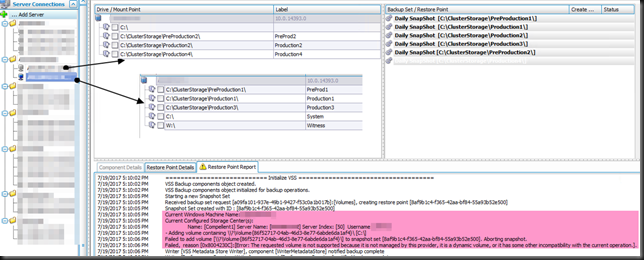

When we check the SAN, and monitor a problematic host in the cluster we see that the snapshot was taken just fine. what was failing was the transport to the backup repository server. It also seemed like an attempt was made to mount the snapshot on the Hyper-V host itself, which also failed.

What was causing this? We dove into the Hyper-V and cluster logs and found nothing that could help us explain the above. We did find the old very cryptic and almost undocumented error:

Event ID 12660 — Storage Initialization

Updated: April 7, 2009

Applies To: Windows Server 2008

This is preliminary documentation and subject to change.

This aspect refers events relevant to the storage of the virtual machine that are caused by storage configuration.

Event Details

|

Product: |

Windows Operating System |

|

ID: |

12660 |

|

Source: |

Microsoft-Windows-Hyper-V-VMMS |

|

Version: |

6.0 |

|

Symbolic Name: |

MSVM_VDEV_OPEN_STOR_VSP_FAILED |

|

Message: |

Cannot open handle to Hyper-V storage provider. |

Resolve

Reinstall Hyper-V

A possible security compromise has been created. Completely reimage the server (sometimes called a bare metal restoration), install a new operating system, and enable the Hyper-V role.

Verify

The virtual machine with the storage attached is able to launch successfully.

This doesn’t sound good, does it? Now you can web search this one and find very little information or people having serious issues with normal Hyper-V functions like starting a VM etc. Really bad stuff. But we could start, stop, restart, live migrate, storage live migrate, create checkpoints etc. at will without any issues or even so much as a hint of issues in the logs.

On top of this event id Event ID 12660 did not occur during the backups. It happens when you opened up Hyper-V manager and looked at the setting of Hyper-V or a virtual machine. Everything else on these nodes, cluster wise or Hyper-V wise was working 100% perfectly Again, this is the perfectly behaving Hyper-V cluster we’d all sign for. If it didn’t have that very annoying issue with a transportable snapshot on some of the nodes.

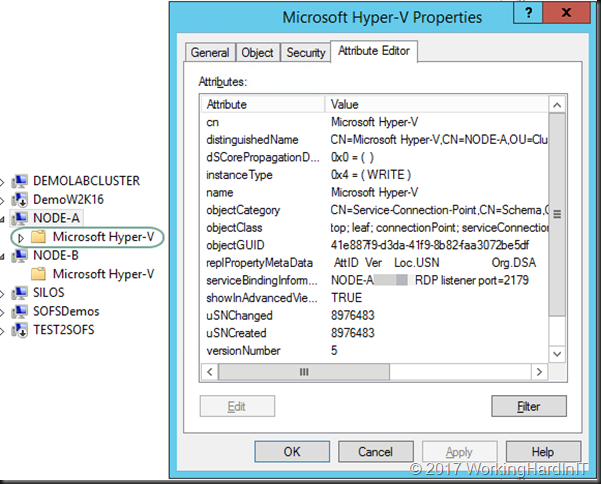

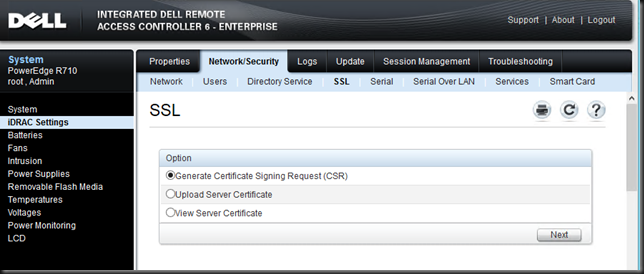

We extended our search outside if of the Hyper-V cluster nodes and then we hit clue. On the nodes that owns the LUN that was being backup and that did show the problematic transportable backup behavior noticed that the Hyper-V Service Connection Point (SCP) was missing.

We immediately checked the other nodes in the cluster having a backup issue. BINGO! That was the one and only common factor. The missing Hyper-V SCP.

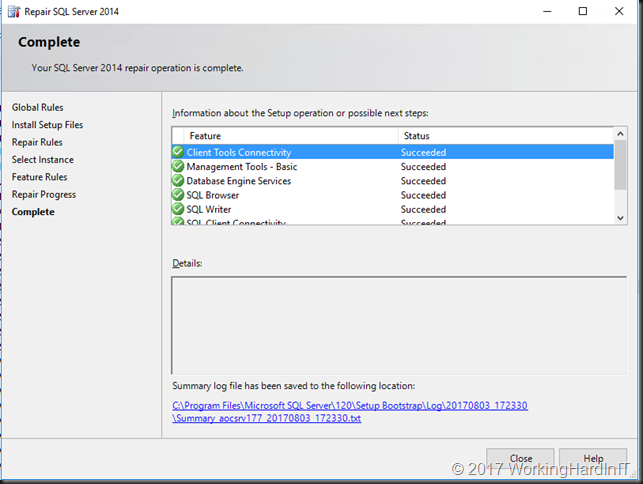

Fixing the issue

Now you can create one manually but that leaves you with missing security settings and you can’t set those manually. The Hyper-V SCP is created and attributes populates on the fly when the server boots. So, it’s normal not to see one when a server is shut down.

The fastest way to solve the issue was to evacuate the problematic hosts, evict them from the cluster and remove them from the domain. For good measure, we reset the computer account in AD for those hosts and if you want you can even remove the Hyper-V role. We then rejoined those node to the domain. If you removed the Hyper-V role, you now reinstall it. That already showed the SCP issue to be fixed in AD. We then added the hosts back to the cluster and they have been running smoothly ever since. The Event ID 12660 entries are gone as are the VSS errors. It’s a perfect Hyper-V cluster now.

Root Cause?

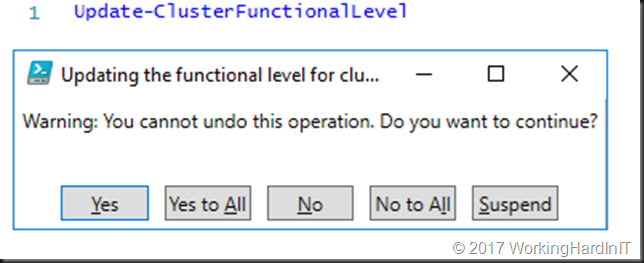

We’re think that somewhere during the life cycle of the hosts the servers have been renamed while still joined to the domain and with the Hyper-V role installed. This might have caused the issue. During a Cluster Operating System Rolling Upgrade, with an in-place upgrade, we also sometime see the need to remove and re-add the Hyper-V role. That might also have caused the issue. We are not 100% certain, but that’s the working theory and a point of attention for future operations.