I saw people team two 10GBps NICs for live migration and use TCP/IP. They leveraged LACP for this as per my blog Teamed NIC Live Migrations Between Two Hosts In Windows Server 2012 Do Use All Members . That was a nice post but not a commercial to use it. It was to prove a point that LACP/Static switch dependent teaming did allow for multiple VMs to be live migrated in the same direction between two node. But for speed, max throughput & low CPU usage teaming is not the way to go. This is not needed as you can achieve bandwidth aggregation and redundancy with SMB via Multichannel. This doesn’t require any LACP configuration at all and allows for switch independent aggregation and redundancy. Which is great, as it avoids stacking with switches that don’t do VLT, MLAG, …

Even when your team your NICs your better off using SMB. The bandwidth aggregation is often better. But again, you can have that without LACP NIC teaming so why bother? Perhaps one reason, with LACP failover is faster, but that’s of no big concern with live migration.

We’ll do some simple examples to show you why these choices matter. We’ll also demonstrate the importance of an optimize RSS configuration. Do not that the configuration we use here is not a production environment, it’s just a demo to show case results.

But there is yet another benefit to SMB. SMB Direct. That provides for maximum throughput, low latency and low CPU usage.

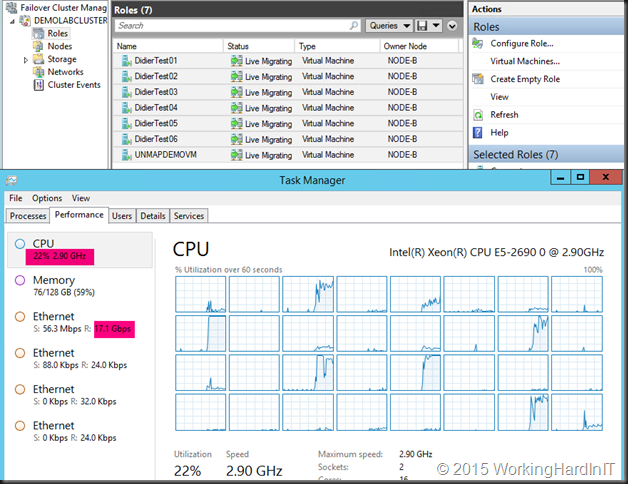

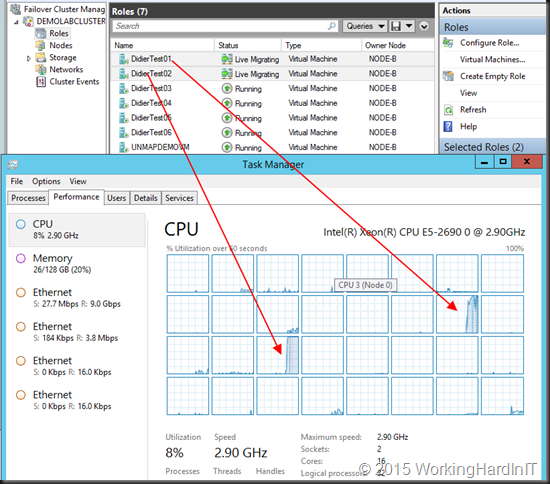

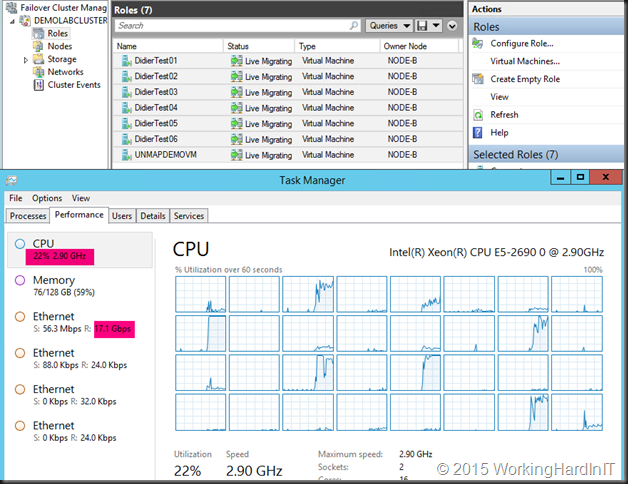

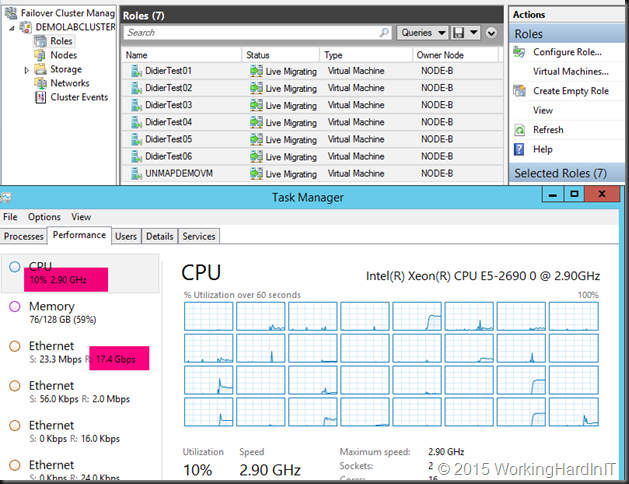

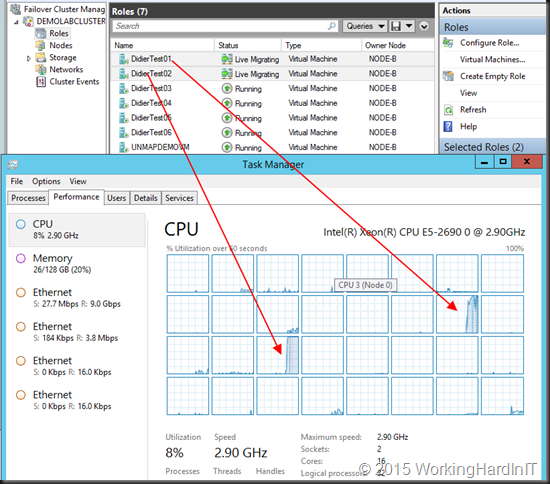

LACP NIC TEAM with 2*10Gbps with TCP

With RSS setting on the inbox default we have problems reaching the best possible throughput (17Gbps). But that’s not all. Look at the CPU at the time of live migration. As you can see it’s pretty taxing on the system at 22%.

If we optimize RSS with 8 RSS queues assigned to 8 physical cores per NIC on a different CPU (dual socket, 8 core system) we sometimes get better CPU overhead at +/- 12% but the throughput does not improve much and it’s not very consistent. It can get worse and look more like the above.

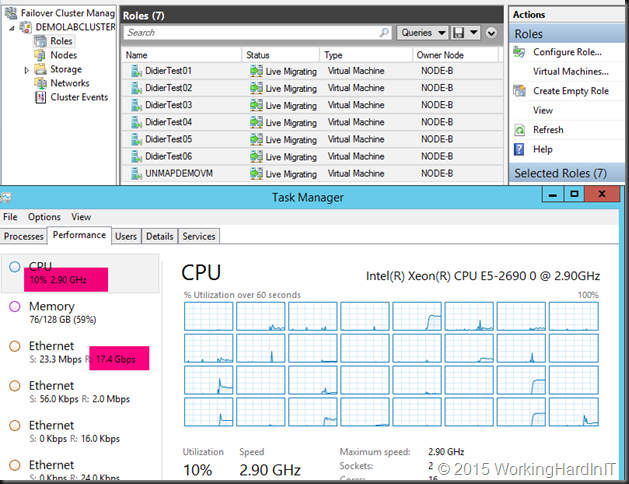

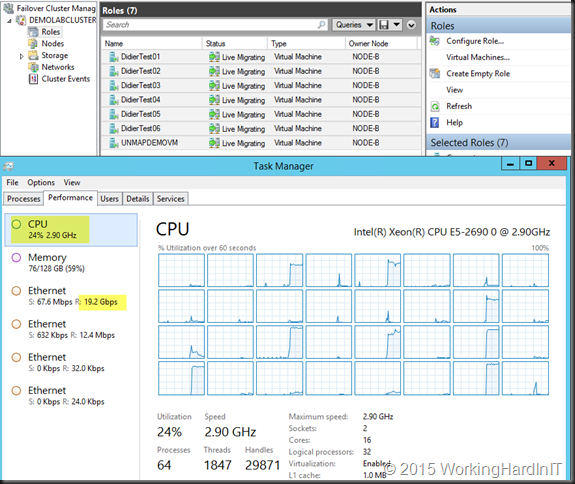

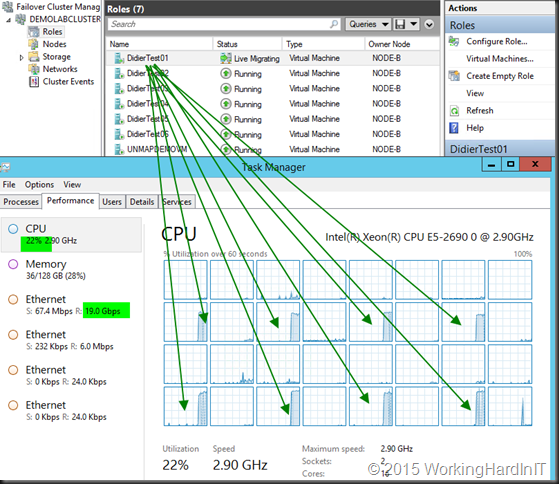

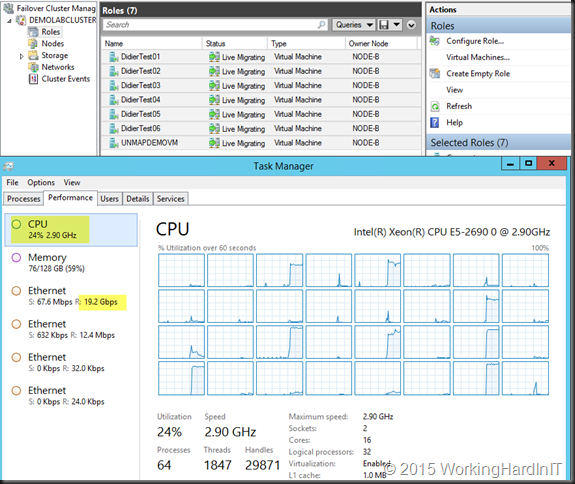

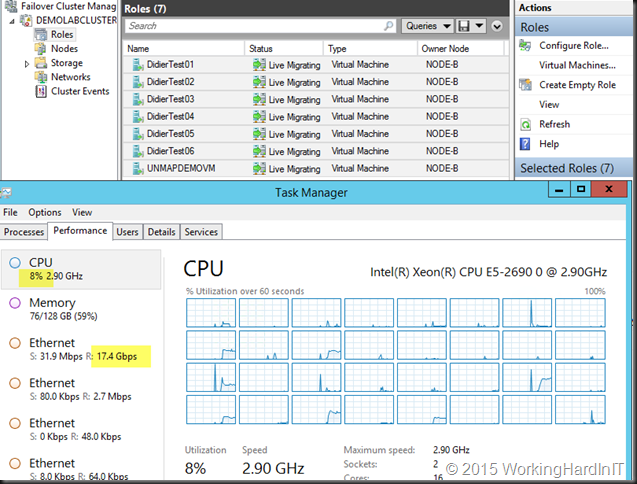

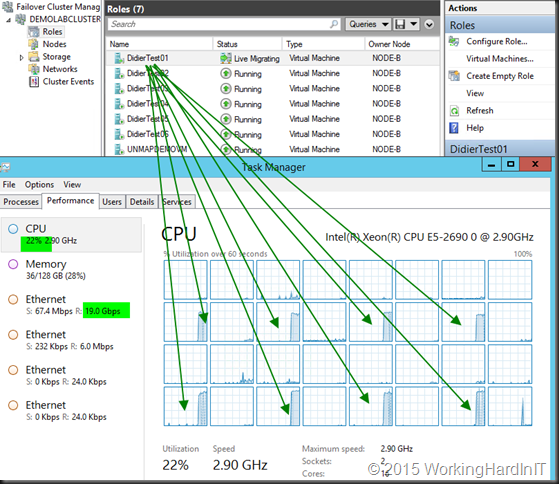

LACP NIC TEAM with 2*10Gbps with SMB (Multichannel)

With the default RSS Settings we still have problems reaching the best possible throughput but it’s better (19Gbps). CPU wise, it’s pretty taxing on the system at 24%.

If we optimize RSS with 8 RSS queues assigned to 8 physical cores per NIC on a different CPU (dual socket, 8 core system) we get better over CPU overhead at +/- 8% but the throughput actually declined (17.5 %). When we run the test again we were back to the results we saw with default RSS settings.

Is there any value in using SMB over TCP with LACP for live migration?

Yes there is. Below you see two VMs live migrate, RSS is optimized. One core per VM is used and the throughput isn’t great, is it. Depending on the speed of your CPU you get at best 4.5 to 5Gbps throughput per VM as that 1 core per VM is the limiting factor. Hence see about 9Gbps here, as there’s 2 VMs, each leveraging 1 core.

Now look at only one VM with RSS is optimized with SMB over an LACP NIC team. Even 1 large memory VM leverages 8 cores and achieves 19Gbps.

What about Switch Independent Teaming?

Ah well that consumes a lot less CPU cycles but it comes at the price of speed. It has less CPU overhead to deal with in regards to LACP. It can only receive on one team member. The good news is that even a single VM can achieve 10Gbps (better than LACP) at lower CPU overhead. With SMB you get better CPU distribution results but as the one member is a bottle neck, not faster. But … why bother when we have …better options!? Read on  !

!

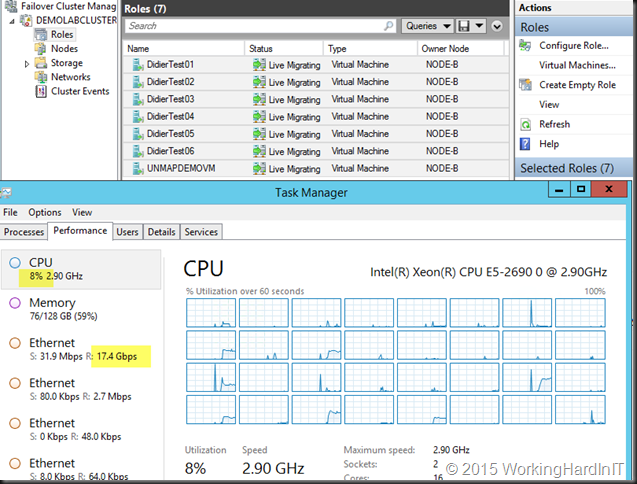

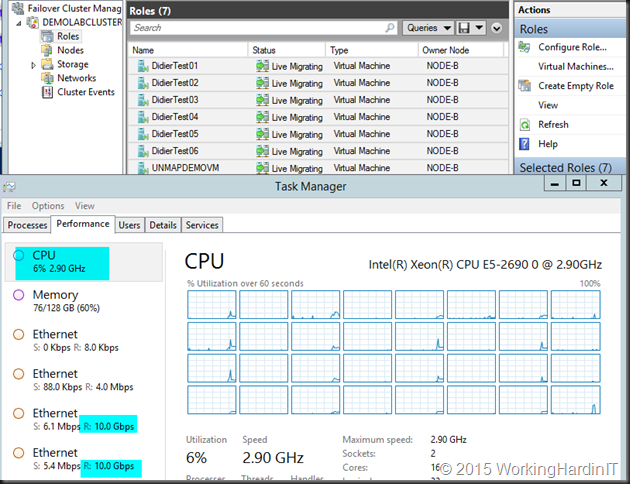

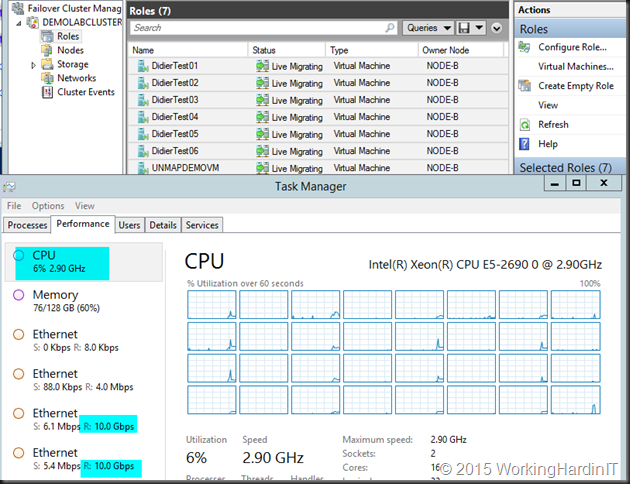

No Teaming – 2*10Gbps with SMB Multichannel, RSS Optimized

We are reaching very good throughput but it’s better (20Gbps) with 8 RSS queues assigned to 8 physical cores. The CPU at the time of live migration is pretty good at 6%-7%.

Important: This is what you want to use if you don’t have 10Gbps but you do have 4* 1Gbps NICs for live migration. You can test with compression and LACP teaming if you want/can to see if you get better results. Your mirage may vary  . If you have only one 1Gbps NIC => Compression is your sole & only savior.

. If you have only one 1Gbps NIC => Compression is your sole & only savior.

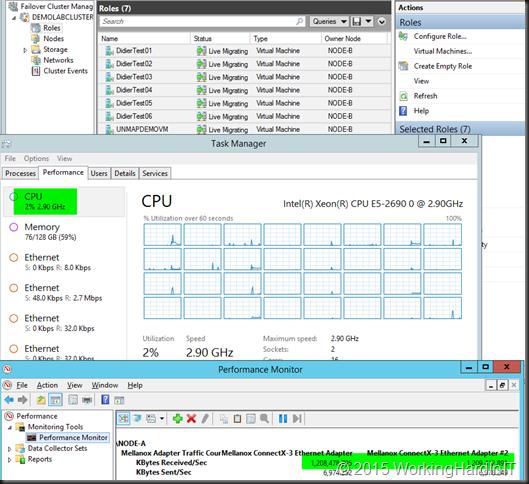

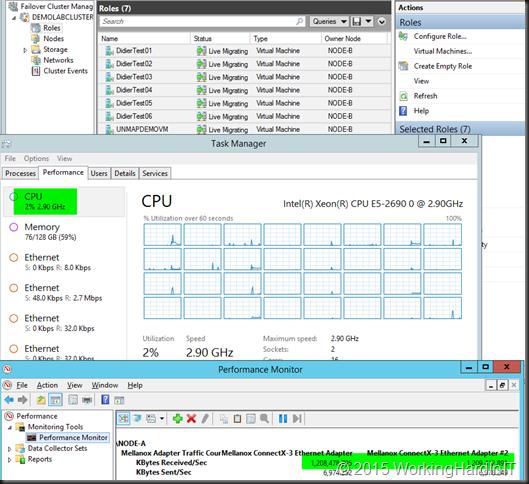

2*10Gbps with SMB Direct

We’re using perfmon here to see the used bandwidth as RDMA traffic does not show up in Task Manager.

We have no problems reaching the best possible throughput but it’s better (20Gbps, line speed). But now look at the CPU during live migration. How do you like them numbers?

Do not buy non RDMA capable NICs or Switches without DCB support!

These are real numbers, the only thing is that the type and quality of the NICs, firmware and drivers used also play a role an can skew the results a bit. The onboard LOM run of the mill NICs aren’t always the best choice. Do note that configuration matters as you have seen. But SMB Direct eats them all for breakfast, no matter what.

Convinced yet? People, one of my core highly valuable skillsets is getting commodity hardware to perform and I tend to give solid advice. You can read all my tips for fast live migrations here in Live Migration Speed Check List – Take It Easy To Speed It Up

Does all of this matter to you? I say yes , it does. It depends on your environment and usage patterns. Maybe you’re totally over provisioned and run only very small workloads in your virtual machines. But it’s save to say that if you want to use your hardware to its full potential under most circumstances you really want to leverage SMB Direct for live migrations. What about that Hyper-V cluster with compute and storage heavy applications, what about SQL Server virtualization? Would you not like to see this picture with SMB RDMA? The Mellanox RDMA cards are very good value for money. Great 10Gbps switches that support DCB (for PFC/ETS) can be bought a decent prices. You’re missing out and potentially making a huge mistake not leveraging SMB Direct for live migrations and many other workloads. Invest and design your solutions wisely!

The end result is a lab at home on PC hardware and an enterprise grade lab to work with in the datacenter. Busy times ahead.

The end result is a lab at home on PC hardware and an enterprise grade lab to work with in the datacenter. Busy times ahead.