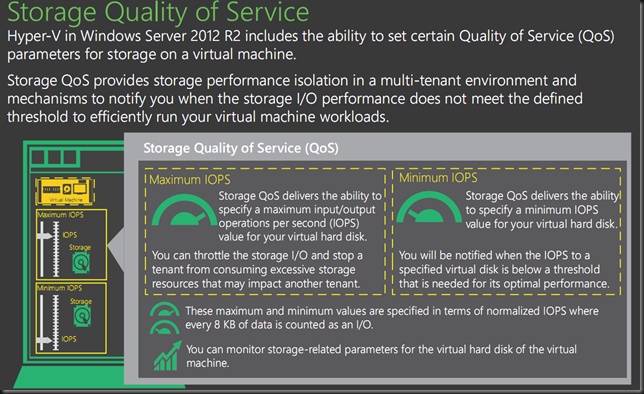

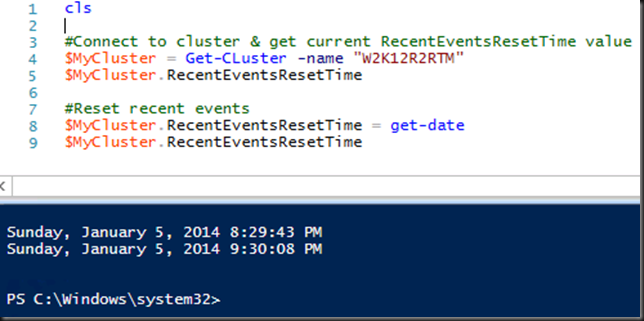

At TechEd 2013 John Matthew & Liang Yang presented following session MDC-B345: Windows Server 2012 Hyper-V Storage Performance. That was a great one. During this they demonstrated the use of WMI to monitor storage alerts related to Storage QoS in Windows Server 2012 R2. We’re going to go further, dive in a bit deeper and show you how to identify the virtual hard disk and the virtual machine.

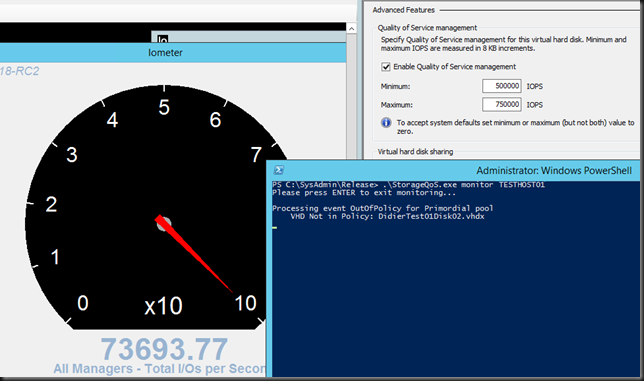

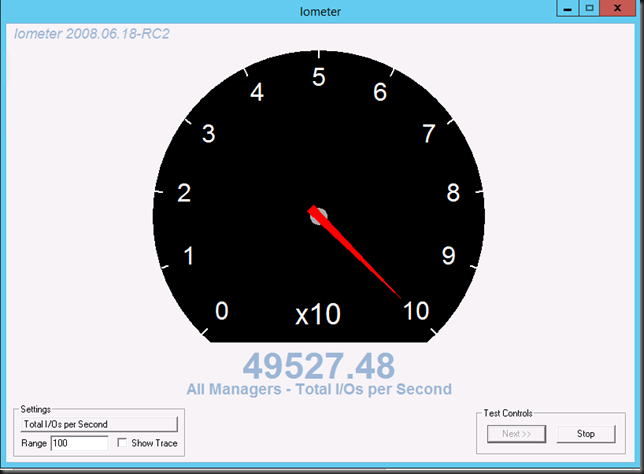

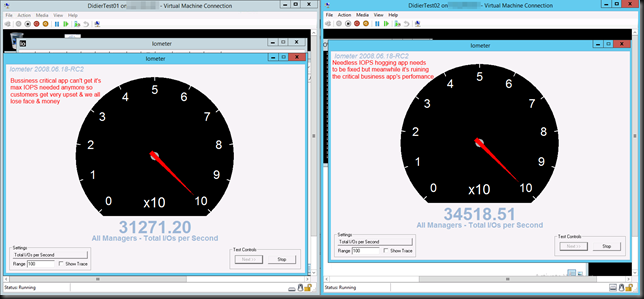

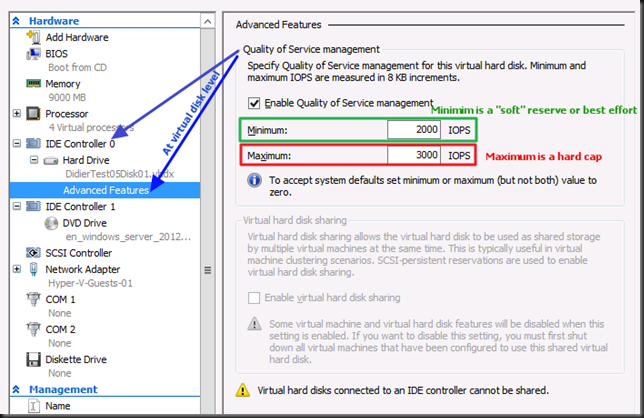

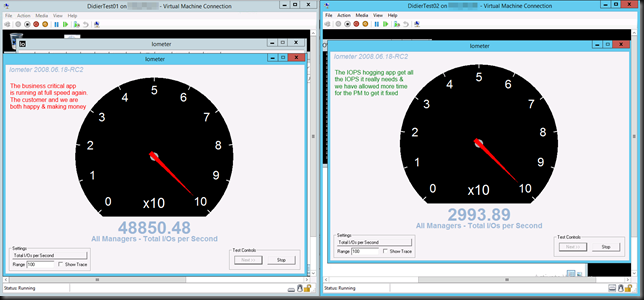

One important thing in all this is that we need to have the reserve or minimum IOPS not being met, so we run IOMeter to make sure that’s the case. That way the events we need will be generated. It’s a bit of a tedious exercise.

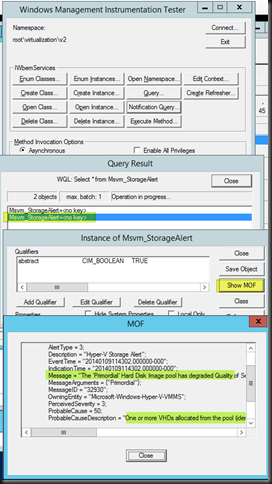

So we start with a wmi notification query, this demonstrates that notifications are sent when the minimum IOPS cannot be met. The query is simply:

select * from Msvm_StorageAlert

instance of Msvm_StorageAlert

{

AlertingManagedElement = "\\TESTHOST01\root\virtualization\v2:Msvm_ResourcePool.InstanceID="Microsoft:70BB60D2-A9D3-46AA-B654-3DE53004B4F8"";

AlertType = 3;

Description = "Hyper-V Storage Alert";

EventTime = "20140109114302.000000-000";

IndicationTime = "20140109114302.000000-000";

Message = "The ‘Primordial’ Hard Disk Image pool has degraded Quality of Service. One or more Virtual Hard Disks allocated from the pool is not reporting sufficient throughput as specified by the IOPSReservation property in its Resource Allocation Setting Data.";

MessageArguments = {"Primordial"};

MessageID = "32930";

OwningEntity = "Microsoft-Windows-Hyper-V-VMMS";

PerceivedSeverity = 3;

ProbableCause = 50;

ProbableCauseDescription = "One or more VHDs allocated from the pool (identified by value of AlertingManagedElement property) is experiencing insufficient throughput and is not able to meet its configured IOPSReservation.";

SystemCreationClassName = "Msvm_ComputerSystem";

SystemName = "TESTHOST01";

TIME_CREATED = "130337413826727692";

};

That’s great, but what virtual hard disk of what VM is causing this? That’s the question we’ll dive into in this blog. Let’s go. On MSDN docs on Msvm_StorageAlert class we read:

Remarks

The Hyper-V WMI provider won’t raise events for individual virtual disks to avoid flooding clients with events in case of large scale malfunctions of the underlying storage systems.

When a client receives an Msvm_StorageAlert event, if the value of the ProbableCause property is 50 (“Storage Capacity Problem“), the client can discover which virtual disks are operating outside their QoS policy by using one of these procedures:

Query all the Msvm_LogicalDisk instances that were allocated from the resource pool for which the event was generated. These Msvm_LogicalDisk instances are associated to the resource pool via the Msvm_ElementAllocatedFromPool association.

Filter the result list by selecting instances whose OperationalStatus contains “Insufficient Throughput”.

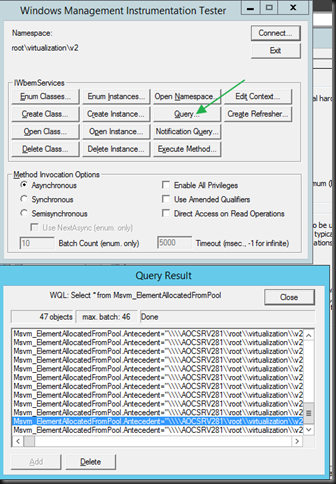

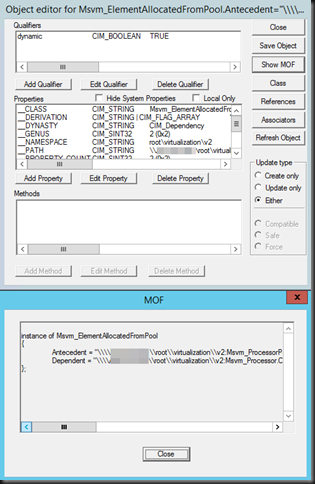

So I query (NOT a notification query!) the Msvm_ElementAllocatedFromPool class, click through on a result and select Show MOF.

Let’s look at that MOF …In yellow is the GUID of our VM ID. Hey cool!

instance of Msvm_ElementAllocatedFromPool

{

Antecedent = "\\TESTHOST01\root\virtualization\v2:Msvm_ProcessorPool.InstanceID="Microsoft:B637F347-6A0E-4DEC-AF52-BD70CB80A21D"";

Dependent = "\\TESTHOST01\root\virtualization\v2:Msvm_Processor.CreationClassName="Msvm_Processor",DeviceID="Microsoft:b637f346-6a0e-4dec-af52-bd70cb80a21d\\6",SystemCreationClassName="Msvm_ComputerSystem",SystemName="96CD7F7E-0C0A-42FE-96CB-B5550D937F27"";

};

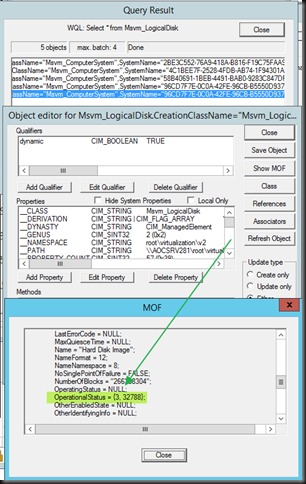

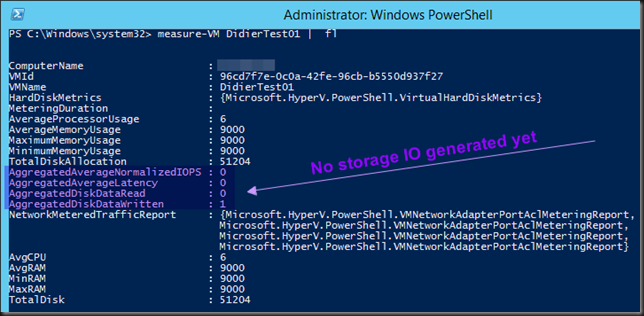

Now we want to find the virtual hard disk in question! So let’s do what the docs says and query Msvn_LogicalDisk based on the VM GUID we find the relates results …

Look we got OperationalStatus 32788 which means InsufficientThroughput, cool we’re on the right track … now we need to find what virtual disk of our VM that is. Well in the above MOF we find the device ID: DeviceID = "Microsoft:5F6D764F-1BD4-4C5D-B473-32A974FB1CA2\\L"

Well if we then do a query for Msvm_StorageAllocationSettingData we find two entrties for our VM GUID (it has two disks) and by looking at the value InstanceID that contains the above DeviceID we find the virtual hard disk info we needed to identify the one not getting the minimum IOPS.

HostResource = {"C:\ClusterStorage\Volume5\DidierTest01\Virtual Hard Disks\DidierTest01Disk02.vhdx"};

HostResourceBlockSize = NULL;

InstanceID = "Microsoft:96CD7F7E-0C0A-42FE-96CB-B5550D937F27\5F6D764F-1BD4-4C5D-B473-32A974FB1CA2\\L";

Are you tired yet? Do you realize you need to do this while the disk IOPS is not being met to see the events. This is no way to it in production. Not on a dozen servers, let alone on a couple of hundred to thousands or more hosts is it? All the above did was give us some insight on where and how. But using wbemtest.exe to diver deeper into wmi notifications/events isn’t really handy in real life. Tools will need to be developed to deal with this larger deployments. The can be provided by your storage vendor, your VAR, integrator or by yourself if you’re a large enough shop to make private cloud viable or if you are the cloud provider ![]() .

.

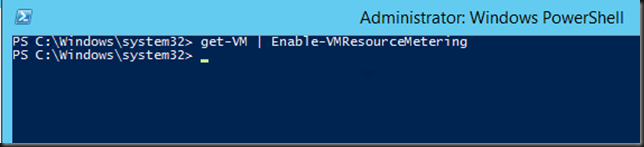

To give you an idea on how this can be done there is some demo code on MSDN over here and I have that compiled for demo purposes.

We have 4 VMs running on the host. One of them is being hammered by IOMeter while it’s minimum IOPS have been set to an number it cannot possibly get. We launch StorageQos to monitor our Hyper-V host.

Just let it run and when the notification event that the minimum IOPS cannot be delivered on the storage this monitor will query WMI further to tell us what virtual disk or disks are involved. Cool huh! A good naming convention can help identify the VM and this tools works remotely against node so you can launch one for each node of the cluster. Time to fire up Visual Studio 2013 me thinks or go and chat to a good dev you might know to take this somewhere, some prefer this sort of work to the modern day version of CRUD apps any day. If you buy monitoring tools you might want them to have this capability.

While this is just demo code, it gives you an idea of how tools and solutions can be developed & build to monitor the Minimum IOPS part of Storage QoS in Windows Server 2012 R2. Hope you found this useful!