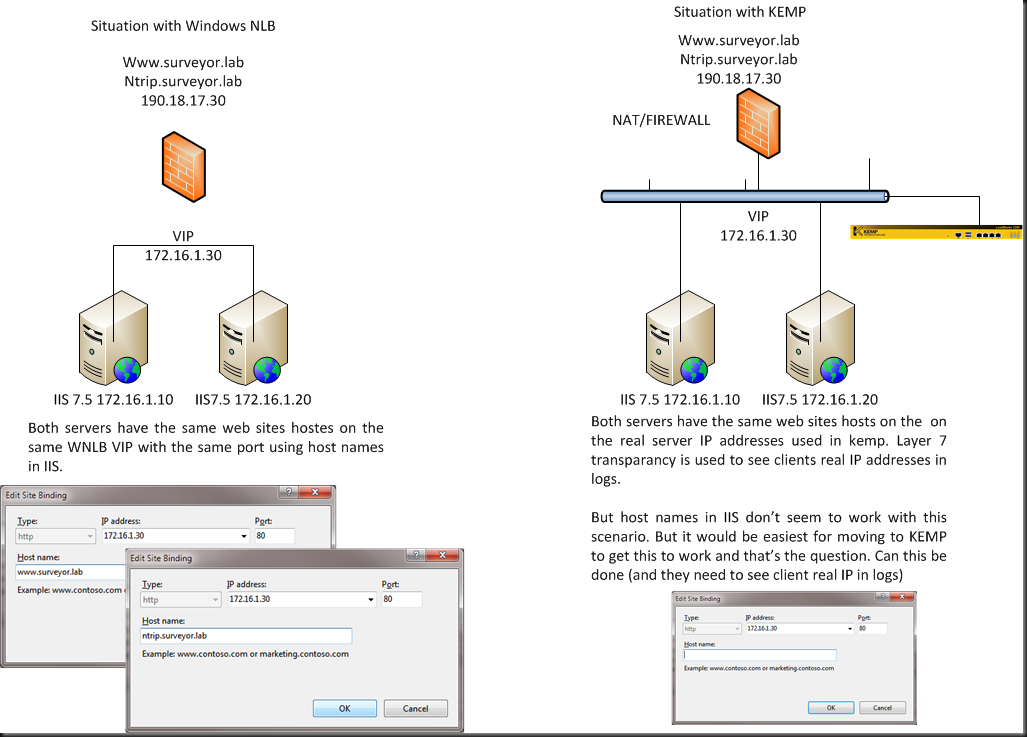

At a client the change over of a web site from old servers to new ones lead to the investigation of an issue with the hardware load balancer. Since that web site is related to an existing surveyors solutions suite that already had a KEMP LoadMaster 2200 in use the figured we’d also use it for the web site and no longer use WNLB.

Now the original web site had multiple DNS entries and host header names defined in IIS (see Configure a Host Header for a Web Site (IIS 7)) . Host header names in IIS allow you to host multiple web sites on an IIS server using the same IP address and port. A small added security benefit is that surfing on IP address fails which means we marginally disrupt some script kiddies & get an extra security checkbox marked during an audit ![]() .

.

In our example we needed:

- ntrip.surveyor.lab

- www.surveyor.lab

Note: The real names have been changed as well as the reasons why as this has some business & historical justifications that don’t matter here.

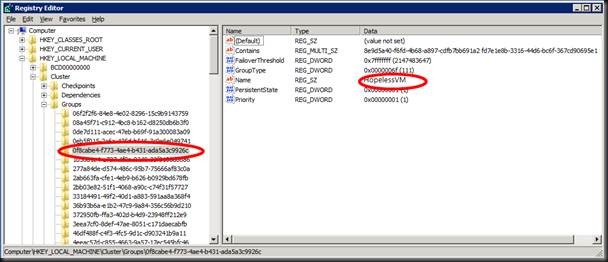

ntrip.surveyor.lab needs to be handled by the load balanced web servers in the solution. The www.surveyor.lab needs to be redirected to another web server to keep the business happy. However for political reasons we have to keep the DNS record for www.surveyor.lab pointing to the load balanced servers, i.e. the load master VIP.

Now without host names IIS al worked fine until we wanted to use HTTP redirect. As the web site is the same IP address for both names we either redirected them both or none. To fix this we needed two sites in IIS. The real one hosting ntrip.surveyor.lab and a “fake” one hosting the www.surveyor.lab that we want to redirect. Well as both are hosted on the same IP address and port on the IIS server we need to use host names. But then the sites became unavailable.

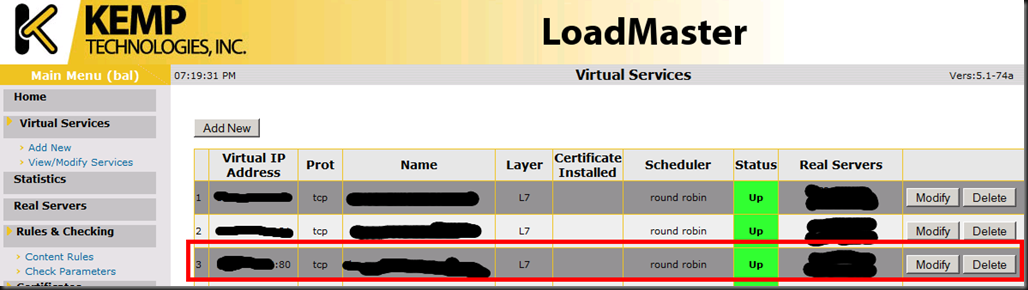

When checking the LoadMaster configuration, the virtual service for the web servers seemed well.

Is this a limitation of hardware load balancing or this specific Loadmaster? Some searching on the internet made it look like I was about the only on on the planet dealing with this issue so no help there.

Kemp Support Rocks

I already knew this but this experience reaffirms it. KEMP Technologies really does care about their customers and are very fast & responsive. I threw a quick question on twitter to @KempTech on Twitter and they responded very fast with some pointers. After that I replied with some more details, they offered to take it on via other means as twitter has it limits. OK, no problems. The next morning I got an e-mail from one of their engineers (Ekkehard) with more information and a request for more input from our side. I quickly made a VISIO diagram of the current and the desired situation. Based on this he let me know this should work.

He asked for a copy of the configuration and already pointed to the solution:

And what exactly happens – does the RS turn “red” in the “View/Modify Services” view? That might be caused by the health check settings…

(Remember that a 302 is considered NOT ok, so you had to enter the proper check URL and or / HTTP1.1 hostname)

But at that moment I did not realize this yet. I saw no error or the real server turning red indicating it was down. So we went through the configuration and decided to test without forcing layer 7 to see what happened. This didn’t make a difference and it wasn’t really a solution if it had as we needed layer 7 and layer 7 transparency.

Ekkehard also noticed my firmware was getting rather old (don’t fix what isn’t broken ![]() ) and suggest an upgrade (5.1-24 to 5.1.-74). So I did, reboot and tested some more settings. To make sure I didn’t miss anything I threw a network sniffer (WireShark) against the issue. And guess what? As soon as I added a host name to the IIS web site bindings I didn’t even get any request from my client on that server anymore. So it was definitely being stopped at the Loadmaster. Without it request from a client came through perfectly. That was not IIS doing as with a host name nothing came into the server. So why would the LoadMaster stop traffic to a real server? Because it’s down, that’s why, just like Ekkehard has indicated in one of his mails but we didn’t see it then.

) and suggest an upgrade (5.1-24 to 5.1.-74). So I did, reboot and tested some more settings. To make sure I didn’t miss anything I threw a network sniffer (WireShark) against the issue. And guess what? As soon as I added a host name to the IIS web site bindings I didn’t even get any request from my client on that server anymore. So it was definitely being stopped at the Loadmaster. Without it request from a client came through perfectly. That was not IIS doing as with a host name nothing came into the server. So why would the LoadMaster stop traffic to a real server? Because it’s down, that’s why, just like Ekkehard has indicated in one of his mails but we didn’t see it then.

Better check again and sure enough, the health service told me the real servers are down. Hey … that’s new. Did the previous firmware not show this, or just slower? I can’t say for sure. It’s either me being to impatient, a hiccup, the firmware or premature dementia ![]()

Root Cause

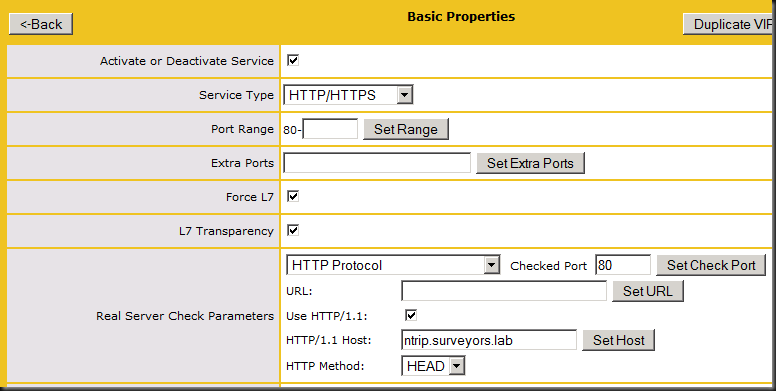

So what happens? The default health check uses HTTP 1.0. You can customize it with a path like /owa or such but in essence it uses the IP address of the real server and guess what. With a Host header name in IIS that isn’t allowed other wise it can’t figure out what website you want to go to if you’re using this feature to run multiple sites on the same IP address and port. So we need to check the health based on host name. Can the LoadMaster do that for us? Yes it can!

The fix

You need to enable HTTP 1.1 and fill out the host name you want to use for health checking. In our case that’s ntrip.surveyor.lab. That’s all there’s to it. Easy as can be if you know. And Ekkehard knew he indicated to this in his quoted mail above.

Lessons Learned

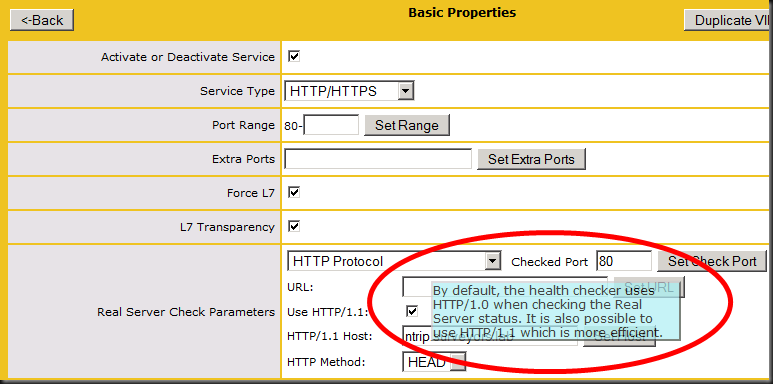

So how did I not know this? Isn’t this documented? Sure enough on page 56 of the LoadMaster manual it says the following:

7 HTTP The LoadMaster opens a TCP connection to the Real Server on the Service port (port80). The LoadMaster sends a HTTP/1.0 HEAD request the server, requesting the page ―/‖. If the server sends a HTTP response with a status code of 2 (200-299, 301, 302, 401) the LoadMaster closes the connection and marks the server as active. If the server fails to respond within the configured response time for the configured number of times or if it responds with a different status code, it is assumed dead. HTTP 1.0 and 1.1 support available, using HTTP 1.1 allows you to check host header enabled web servers.

Typical, you read the exact line of information you need AND understand it after having figured it out. Now linking that information (yes we always read all manuals completely ![]() ) to the situation at hand isn’t always that fast a process but I got there in the end with some help from KEMP Technologies.

) to the situation at hand isn’t always that fast a process but I got there in the end with some help from KEMP Technologies.

One hint is perhaps to mention this is in the handy tips that pop up when you hover over a setting in the LoadMaster console. I rely on this a lot and a mention of “HTTP 1.1 allows you to check host header enabled web servers” might have helped me out. But it’s not there. A very poor excuse I know … ![]()

Host Header Names & HTTP redirection

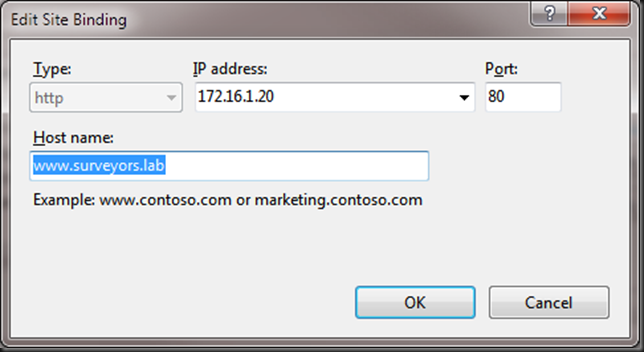

After having fix this issue I proceeded to configure HTTP redirect in IIS 7.5. For this is used two sites. One was just a fake site tied to the www.surveyors.lab hostname in IIS on port 80.

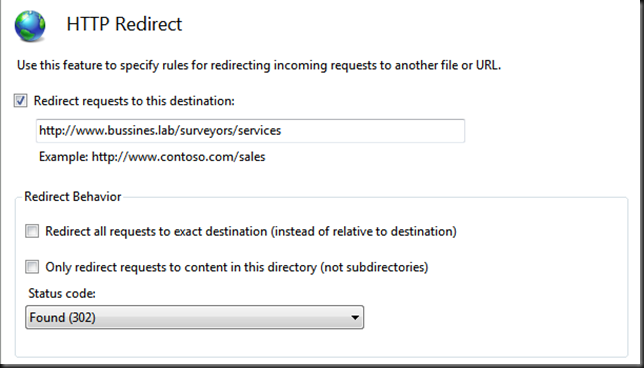

For this site I created a HTTP redirect to www.bussines.lab/surveyors/services. This works just fine as long as you don’t forget the http:// in the redirect URL.

So it has to be http://www.bussines.lab/surveyors/services or you’ll get a funky loop effect looking like this:

http://www.surveyors.lab/www.bussines.lab/surveyors/services/www.bussines.lab/surveyors/services/www.bussines.lab/surveyors/services

Firefox will tell you you have a loop that will never end but Internet Explorer doesn’t, it just fails. You do get that URL as a pointer to the cause of the issue. That is if you can relate it to that.

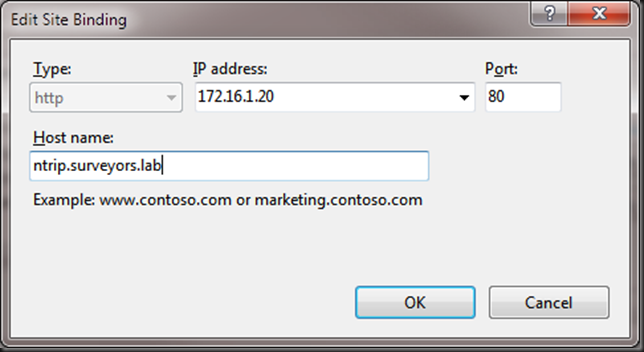

The other was the real site and was configured with following bindings and without redirection.

Don’t forget to do this on all real servers in the farm! The next thing I need to find out is how to health check two host names in the LoadMaster as I have two websites with the same IP address, port but different host names.