Most IT people don’t have a warm and fuzzy feeling when NTFS permissions & “ACLing” are being discussed. While you can do great & very functional things with it, in reality when dealing with file servers over time “stuff” happens. Some of it technical, most of it is what I’ll call “real life”. When it comes to file servers, real life, especially in a business environment, has very little respect, let alone consideration for NFTS/ACL best practices. So we all end up dealing with the fall out of this phenomena. If you haven’t I could state you’re not a real sys admin but in reality I’m just envious of your avoidance skills ![]() .

.

You don’t want to fight NTFS/ACLs, but if it can’t be avoided you need the best possible knowledge about how it works and the best possible tools to get the job done (in that order).

If you have not heard of SetACL or DelProf2, you might also not have heard of uberAgent for Splunk, let alone of their creator, community rock star Helge Klein. If you new to the business I’ll forgive you but if you been around for a while you have to get to know these tools. His admin tools, both the free or the paying ones, are rock solid and come in extremely handy in day to day work. When the shit hits the fans they are priceless.

Helge is an extremely knowledgeable, experienced, talented and creative IT Professional and developer. I’ve met him a couple of times (E2EVC, where he’s an appreciated speaker) and all I can say is that on top of all that, he’s a great guy, with heart for the community.

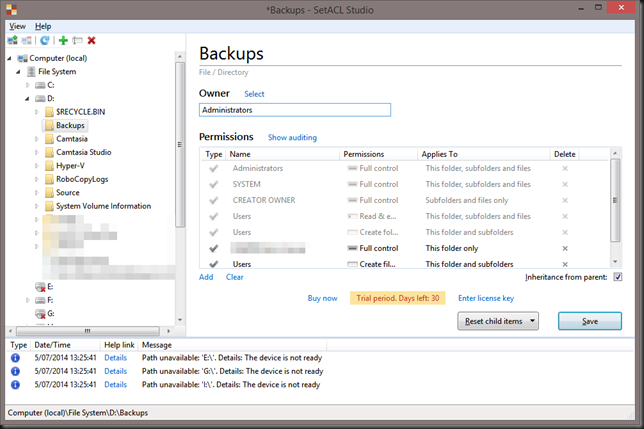

Having the free SetACL.exe available for scripting of NTFS permissions is a luxury I cannot do without anymore. On top of that for a very low price you can buy SetACL Studio. This must be the most efficient GUI tool for managing NFTS permissions / ACLs I have ever come across.

Not long ago I was faced with a MBR to GPT LUN migration on a very large file server. It’s the proverbial file server from hell. We’ve all been there too many times and even after 15 years plus we still cannot get people to listen and follow some best practices and above all the KISS principle. So you end up having to deal with the fall out of every political, organizational, process and technical mistake you can imagine when it comes to ACLs & NTFS permissions. So what did I reach for? SetACL.exe and SetACL Studio, these are my go to tools for this.

Check out the web page to read up on what this tool can do for you. It very easy to use, intuitive and fast. It can do ACL on file systems, registry, services, printers and even WMI. It helps you deal with granting ownership and rights without messing up the existing NTFS permissions in an easy way. It works on both local and remote systems. Last but not least it has an undo function, how cool is that?! Yup and admin tool that let you change your mind. Quite unique.

As an MVP I can get a license for free form Helge Klein but I recommend any IT Pro or consultant to buy this tool as it makes a wonderful addition to anyone’s toolkit, saving countless of hours, perhaps even days. It pays itself back within the 15 minutes you use it.

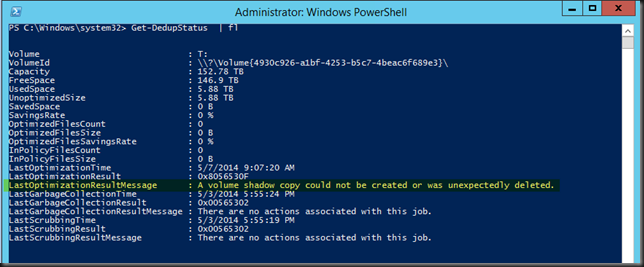

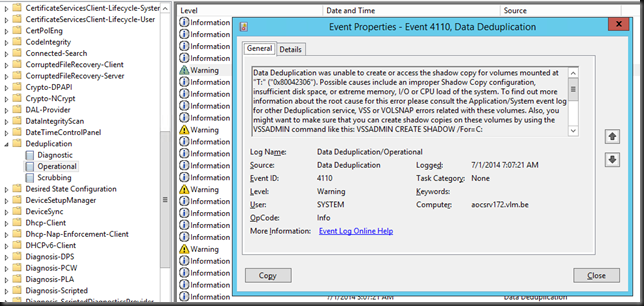

Other useful tools in your toolkit are http://www.editpadlite.com/ as it can handle the large (550-800 MB) log files RoboCopy can produce and some PowerShell scripting skills to parse these files.