Can it be done?

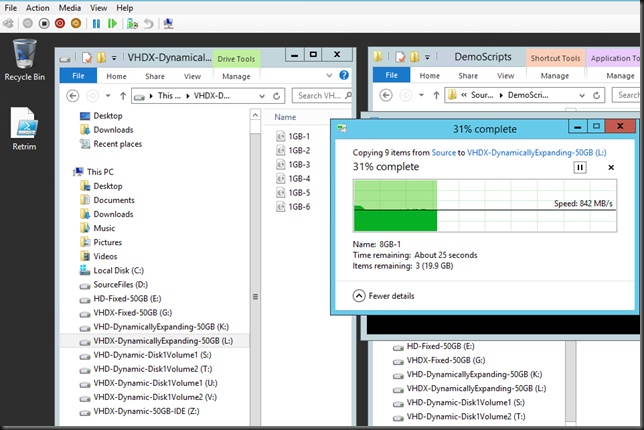

All I can say is that, yes, absolutely, you can virtualize resource intensive workloads. Done right you’ll gain all benefits associated with virtualization and you won’t lose your performance & scalability.

Now I have to stress done right. There are a couple of major causes of problems with virtualization. So let’s look at those and see how a few well placed torpedoes can sink your project fast & effective.

Common Sense

One of them is the lack of common sense. If you currently have 10 SQL Servers with 12 15K RPM SAS Disks in RAID 1 and RAID 10 for the OS, TempDB, Logs & Data files, 64 GB of Memory, dual Quad Core sockets and teamed 1Gbps for resilience and throughput and you want to virtualize them you should expect to deliver the same resources to the virtualized servers. It’s technology people. Hoping that a hypervisor will magically create resources out of thin air is setting yourself up for failure. You cannot imagine how often people use cheap controllers, less disk or slower disks, less bandwidth or CPU cycles and then dump their workload on it. Dynamic memory, NUMA awareness, Storage QoS, etc. cannot rescue a undersized, ill conceived solution. I realize you have read that most physical servers are sitting there idle and let their resources go to waste. If you don’t measure this you can get bitten. You can get ripped to pieces when you’re dealing with virtualizing intensive workloads on Hyper-V based on assumptions.

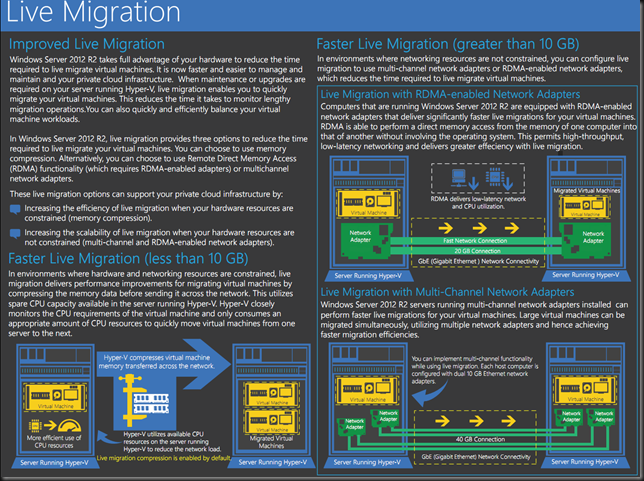

Consider the entire stack

The second torpedo is not understanding the technology stack. The integration part of things or the holistic approach in management consulting speak. The times one could think as a storage admin, network admin, server admin, virtualization admin, SQL DBA, Exchange Engineer is long gone. Really, long gone. You need to think about the entire stack. Know your bottle necks, SPOF, weaknesses, capabilities and how these interact. If you’re still on premise for 100% that means you have to be a datacenter admin, not forgetting you might have multiple of those. And you’d better communicate a bit through DevOps to make sure the developers know that all those resources are not magically super redundant, are not continuously available without any limitation and that these do not have infinite scalability.

Drivers, firmware & bugs can sink your project

Hardware, VAR & ISV support is also a frequent cause of problems. They’ll al tell you that everything is supported. You can learn very fast and very painfully that this is too often not the case or serious bugs are wreaking havoc on your beautiful design. So I live by one of my mantras: “Trust but verify”. However sad it may be, you cannot in good faith trust OEM, VAR and ISVs. I’m not saying they are willfully doing this, but their experience, knowledge isn’t perfect & complete either. You have to do your due diligence. There are too many large scale examples of this right now with Emulex NIC issues around DVMQ. This is a prime example of how you slow acknowledgement of a real issue can ruin your virtualization project for intensive workloads and has been doing so for 9 months and might very well take a year to resolve. Due diligence could have saved you here. A VAR should protect its customers from that, but in reality they often find out when it’s too late. Another example is bugs in storage vendors implementation of ODX causing corruption or extremely slow support for a new version of Windows effectively blocking the use of it in production when you need it for the performance & scalability. I have long learned that losing customers and as such revenue is the only real language vendors understand. So do not be afraid to make hard decisions when you need to.

Knowledge & Due Diligence

Know your hypervisor and core technologies well. Don’t think it’s the same a hardware based deployments, don’t think all options and features work everywhere for everything, don’t think all hypervisor work the same. They do not. Know about Exchange and the rules/limits around virtualization. The same goes for SQL Server and any resource intensive workload you virtualize. Don’t think that the same rules apply to all workloads. There is no substitute for knowledge, experience and hands on testing, the verification part of trust but verify, remember? It goes for you as well!

It can be done

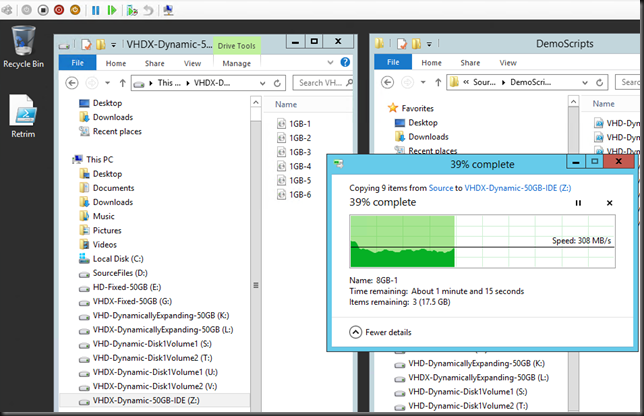

Yes, we can ![]() ! If you want to see some high level examples to simulate your appetite just browse my blog. Here are some pointers to get you started.

! If you want to see some high level examples to simulate your appetite just browse my blog. Here are some pointers to get you started.

Remember , don’t just say “Damn those torpedoes, full speed ahead” but figure out why, where, when and how you’ll get the job done.