When you are optimizing the number of snapshots to be taken for backups or are dealing with storage vendors software that leveraged their hardware VSS provider you some times encounter some requirements that are at odds with virtual machine mobility and dynamic optimization.

For example when backing up multiple virtual machines leveraging a single CSV snapshot you’ll find that:

- Some SAN vendor software requires that the virtual machines in that job are owned by the same host or the backup will fail.

- Backup software can also require that all virtual machines are running on the same node when you want them to be be protected using a single CSV snap shot. The better ones don’t let the backup job fail, they just create multiple snapshots when needed but that’s less efficient and potentially makes you run into issues with your hardware VSS provider.

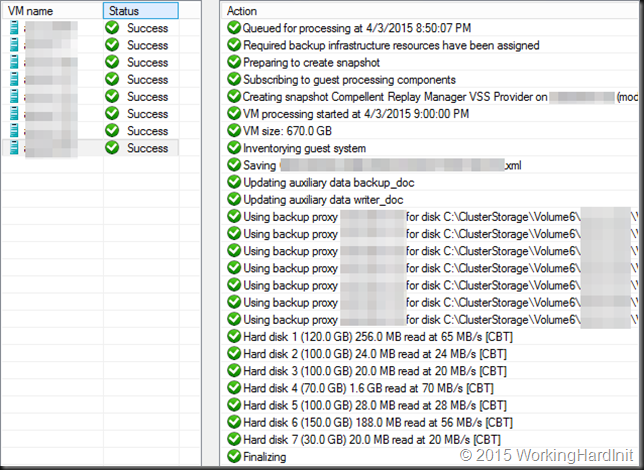

VEEAM B&R v8 in action … 8 SQL Server VMs with multiple disks on the same CSV being backed up by a single hardware VSS writer snapshot (DELL Compellent 6.5.20 / Replay Manager 7.5) and an off host proxy Organizing & orchestrating backups requires some effort, but can lead to great results.

Normally when designing your cluster you balance things out a well as you can. That helps out to reduce the needs for constant dynamic optimizations. You also make sure that if at all possible you keep all files related to a single VM together on the same CSV.

Naturally you’ll have drift. If not you have a very stable environment of are not leveraging the capabilities of your Hyper-V cluster. Mobility, dynamic optimization, high to continuous availability are what we want and we don’t block that to serve the backups. We try to help out to backups as much a possible however. A good design does this.

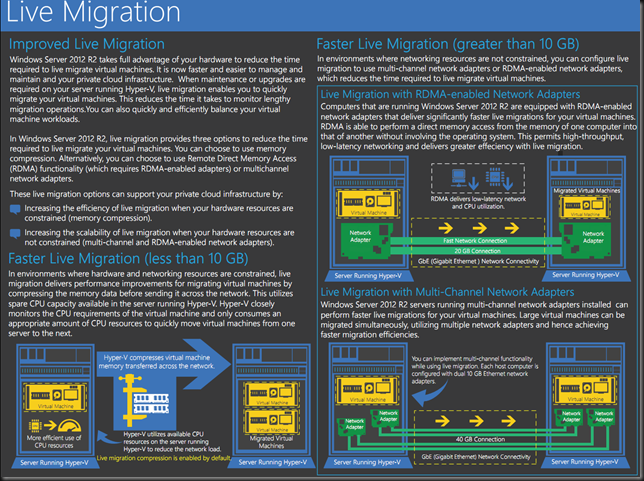

If you’re not running a backup every 15 minutes in a very dynamic environment you can deal with this by live migrating resources to where they need to be in order to optimize backups.

Here’s a little PowerShell snippet that will live migrate all virtual machines on the same CSV to the owner node of that CSV. You can run this as a script prior to the backups starting or you can run it as a weekly scheduled task to prevent the drift from the ideal situation for your backups becoming to huge requiring more VSS snapshots or even failing backups. The exact approach depends on the storage vendors and/or backup software you use in combination with the needs and capabilities of your environment.

cls

$Cluster = Get-Cluster

$AllCSV = Get-ClusterSharedVolume -Cluster $Cluster

ForEach ($CSV in $AllCSV)

{

write-output "$($CSV.Name) is owned by $($CSV.OWnernode.Name)"

#We grab the friendly name of the CSV

$CSVVolumeInfo = $CSV | Select -Expand SharedVolumeInfo

$CSVPath = ($CSVVolumeInfo).FriendlyVolumeName

#We deal with the \ being and escape character for string parsing.

$FixedCSVPath = $CSVPath -replace '\\', '\\'

#We grab all VMs that who's owner node is different from the CSV we're working with

#From those we grab the ones that are located on the CSV we're working with

$VMsToMove = Get-ClusterGroup | ? {($_.GroupType –eq 'VirtualMachine') -and ( $_.OwnerNode -ne $CSV.OWnernode.Name)} | Get-VM | Where-object {($_.path -match $FixedCSVPath)}

ForEach ($VM in $VMsToMove)

{

write-output "`tThe VM $($VM.Name) located on $CSVPath is not running on host $($CSV.OwnerNode.Name) who owns that CSV"

write-output "`tbut on $($VM.Computername). It will be live migrated."

#Live migrate that VM off to the Node that owns the CSV it resides on

Move-ClusterVirtualMachineRole -Name $VM.Name -MigrationType Live -Node $CSV.OWnernode.Name

}

}

Now there is a lot more to discuss, i.e. what and how to optimize for virtual machines that are clustered. For optimal redundancy you’ll have those running on different nodes and CSVs. But even beyond that, you might have the clustered VMs running on different cluster, which is the failure domain. But I get the remark my blogs are wordy and verbose so … that’s for another time