I recently had to do some lab work on a Windows Server 2012 R2 ADFS farm to prep for a migration to Windows Server 2016. Due to some storage shortage and some upgrades and migrations (all hardware in the lab runs Windows Server 2016) I had parked my Windows Server 2012 R2 ADFS farm offline.

So when I copied them back to my cluster and imported them I knew I had to make sure the domain was OK. This is easy enough, just run:

Reset-ComputerMachinePassword [-Credential Mydomain\bigadmin -Server MyDC01

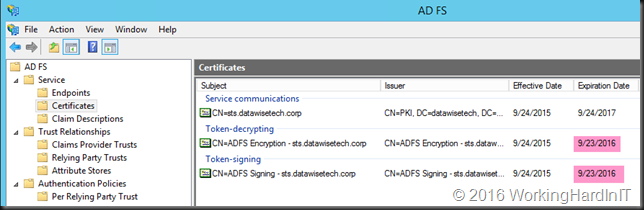

That worked like a charm and soon enough my 2 VMs where up an running happily in the domain. I did have some issues however. My AFDS servers had been of line long enough before the expiration of the token-decrypting and the token-signing certificates to not yet have generate the new certificates for auto renewal and long enough to have them expire already. Darn!

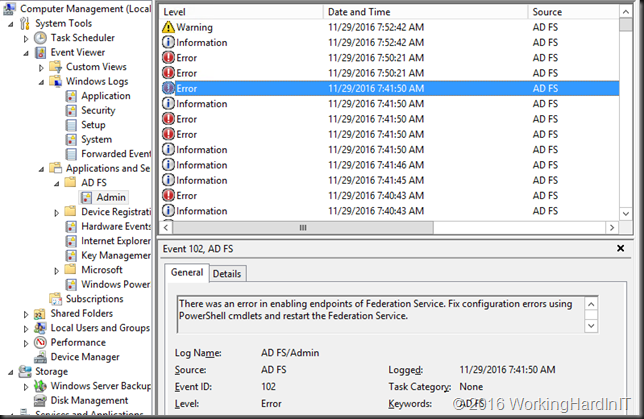

The result was a bunch of errors in the event log as you might expect and appreciate.

An error occurred during an attempt to build the certificate chain for configuration certificate identified by thumbprint ’26AFDC4A226D2605955BF6F844F0866C14B1E82B’. Possible causes are that the certificate has been revoked or certificate is not within its validity period.

The following errors occurred while building the certificate chain:

MSIS2013: A required certificate is not within its validity period when verifying against the current system clock.

But this also raised the question on how to get the ADFS servers back in a working condition. Normally these are generated automatically close to the expiration date of your existing certs (or at the critical threshold you configured). So I disabled / re-enabled auto certificate rollover but does actually does it even kick in if you have already expired? That I don’t know and I really had no time to wait hours or days to see what happens.

Luckily there is a command you can issue to renew the certificates immediately. This is the same command you can use when you have disabled auto rollover and need it re-enabled. That works normally after some patience.

Update-AdfsCertificate -Urgent

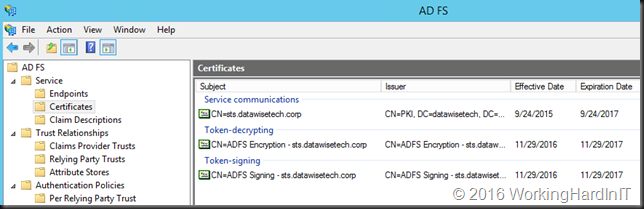

The result was immediate, the self signing certs were renewed.

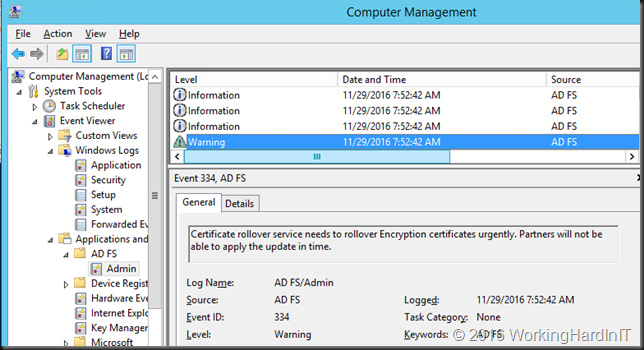

And we can see this in the various entries in the event log

Do note that this command will cause a disruption of the service with your partners until they have refreshed the information from your federation metadata – or in the case this isn’t or can’t be leveraged, manually updated. In my case I had a “service down” situation anyway, but in normal conditions you’d plan this and follow the normal procedure you have in place with any partner that need your ADFS Services.