Introduction

In the cloud it’s all about economies of scale, automation, wipe and (redeploy). Servers are cattle to be destroyed and rebuild when needed. And “needed” here is not like in the past. It has become the default way. But not every one or every workload can achieve that operational level.

There are use cases where I do use in place upgrades for my own infrastructure or for very well known environments where I know the history and health of the services. An example of this is my blog virtual machine. Currently that is running on Azure IAAS, Windows Server 2016, WordPress 4.9.1, MySQL 5.7.20, PHP. It has never been reinstalled.

I upgraded my WordPress versions many times for both small incremental as major releases. I did the same for my MySQL instance used for my blog. The same goes for PHP etc. The principal here is the same. Avoid risk of tech debt, security risks and major maintenance outages by maintaining a modern platform that is patched and up to date. That’s the basis of a well running and secure environment.

In-place upgrade of an Azure virtual machine

In this approach updating the operating system needs to be done as well so my blog went from Windows Server 2012 R2 to Windows Server 2016. That was also an in place upgrade. The normal way of doing in place upgrades, from inside the virtual machine is actually not supported by Azure and you can shoot yourself in the foot by doing so.

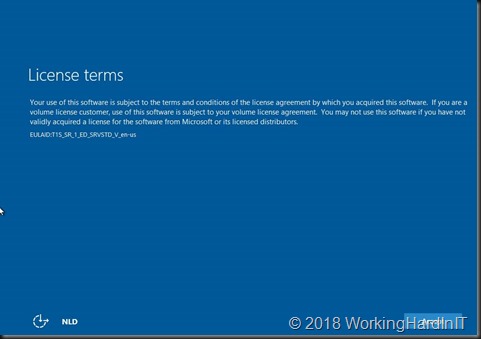

The reason for this is the risk. You do not have console access to an Azure IAAS virtual machine. This means that when things go wrong you cannot fix it. You will have to resort to restoring a backup or other means of disaster recovery. There is also no quick way of applying a checkpoint to the VM to return to a well known situation. Even when all goes well you might lose RDP access (didn’t have it happen yet). But even if all goes well and that normally is the case, you’ll be stuck at the normal OOBE screen where you need to accept the license terms that you get after and upgrade to Windows Server 2016.

The default upgrade will boot to that screen and you cannot confirm it as you have no console access. If you have boot diagnostics enabled for the VM you can see the screen but you cannot get console access. Bummer. So what can you do?

Supported way of doing an in-place upgrade of an Azure virtual machine

Microsoft gives you two supported options to upgrade an Azure IAAS virtual machine in

An in-place system upgrade is not supported on Windows-based Azure VMs. These approaches mitigate the risk. The first is actually a migration to a new virtual machine. The second one is doing the upgrade locally on the VHD disk you download from Azure and then upload to create a new IAAS virtual machine. All this avoids messing up the original virtual machine and VHD.

Unsupported way of doing an in-place upgrade of an Azure virtual machine

There is one way to do it, but if it goes wrong you’ll have to consider the VM as lost. I have tested this approach in a restored backup of the real virtual machine to confirm it works. But, it’s not supported and you assume all risks when you try this.

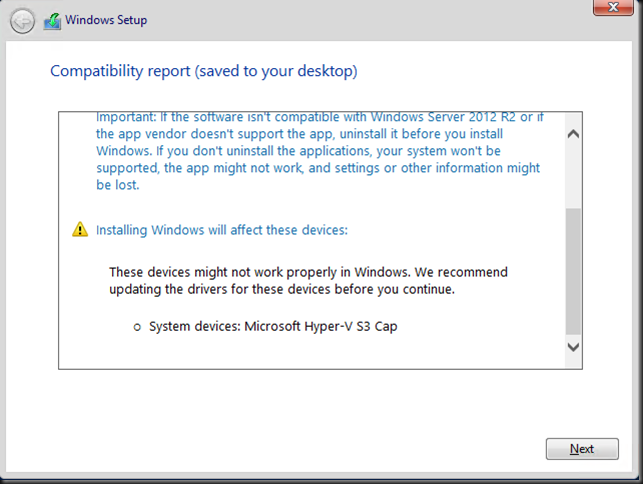

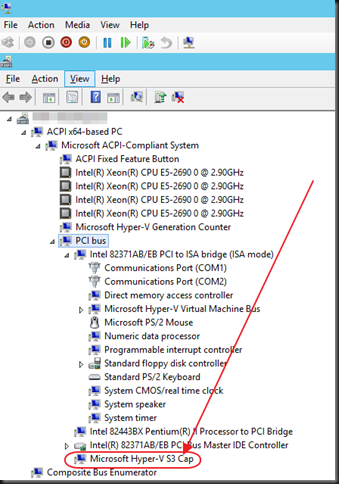

Mount your Windows Server 2016 ISO in the Windows Server 2012 R2 IAAS virtual machine. Open an administrative command prompt and navigate to the drive letter (mine was E![]() of the mounted ISO. From there you launch the upgrade as follows:

of the mounted ISO. From there you launch the upgrade as follows:

E:\setup.exe /auto upgrade /DynamicUpdate enable /pkey CB7KF-BWN84-R7R2Y-793K2-8XDDG /showoobe none

The key is the client KMS key so it can activate and the /showoobe none parameter is where the “magic” is at. This will let you manually navigate through the wizard and the upgrade process will look very familiar (and manual). But the big thing here is that you told the upgrade not to show the OOBE screen where you accept the license terms and as such you won’t get stuck there. So fare I have done this about 5 times and I have never lost RDP access due to the in-place upgrade. So this worked for me. But whatever you do, make sure you have a backup, a way out, ideally multiple ways out!

Note that you can use /Quiet to automate things completely. See Windows Setup Command-Line Options

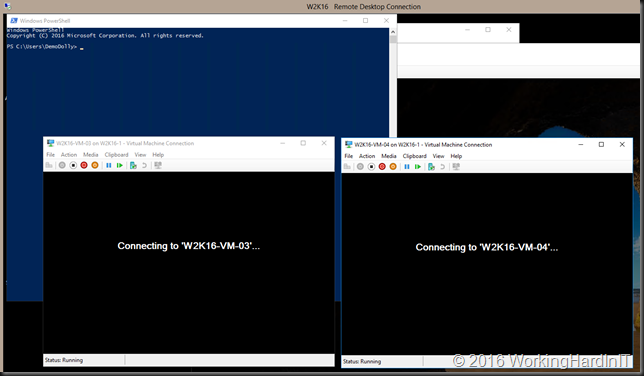

Nested virtualization can give us console access

Since we now have nested virtualization you have an option to fix a broken in-^lace upgrade but by getting console access to a nested VM using the VHD of the VM which upgrade failed. See:

- https://blogs.technet.microsoft.com/mckittrick/troubleshoot-broken-azure-vm-using-nested-virtualization-in-azure-arm/

- https://blogs.technet.microsoft.com/mckittrick/troubleshoot-a-broken-azure-vm-using-nested-virtualization-in-azure-managed-disk/

- https://blogs.technet.microsoft.com/mckittrick/troubleshoot-broken-azure-vm-using-nested-virtualization-in-azure-rdfe/

Conclusion

If Microsoft would give us virtual machine console access or DRAC or ILO capabilities that would take care of this issue. Having said all that, I known that in place upgrades of applications, services or operating systems isn’t the cloud way. I also realize that dogmatic purism doesn’t help in a lot of scenarios so if I can help people leverage Azure even when they have “pre cloud” needs, I will as long as it doesn’t expose them to unmanaged risk. So while I don’t recommend this, you can try it if that’s the only option you have available for your situation. Make sure you have a way out.

IAAS has progressed a tremendous amount over the last couple of years. It still has to get on par with capabilities we have not only become accustomed to but learned to appreciate over over the years. But it’s moving in the right direction making it a valid choice for more use cases. As always when doing cloud, don’t do copy paste, but seek the best way to handle your needs.